As organizations increasingly turn to machine learning to manage limited resources and opportunities, the implications are far-reaching. For example, machine-learning models can enhance hiring processes by screening resumes to identify top candidates or assist hospitals in prioritizing kidney transplant patients based on survival potential.

Ensuring fairness in these predictions is a priority for users, leading them to implement strategies aimed at reducing bias. This typically involves tweaking model features or calibrating output scores.

However, a collaborative study by researchers from MIT and Northeastern University suggests that traditional fairness methods fall short in tackling structural injustices and inherent uncertainties. In a recent publication, they demonstrate that carefully structured randomization of a model’s decisions can enhance fairness in specific contexts.

Consider the scenario where multiple companies deploy the same machine-learning model to rank job candidates deterministically, devoid of any randomization. In such cases, a highly qualified candidate might consistently land at the bottom of the list due to the model’s scoring system, which might unfairly weigh certain responses. Integrating randomization could safeguard against this, offering equal chances and preventing deserving individuals from routinely being overlooked for valuable opportunities like job interviews.

The researchers discovered that randomization is particularly advantageous in scenarios marked by uncertainty or when one group consistently faces adverse outcomes.

They propose a framework for incorporating a defined level of randomization into decision-making, utilizing a weighted lottery approach. This adaptable method allows for fairness improvements while maintaining the efficiency and accuracy of the machine-learning model.

“Even if fair predictions are possible, should we really base crucial social decisions on rankings or scores alone? As these algorithms determine more opportunities, the uncertainties inherent in their scores might grow. Our findings indicate that fairness often necessitates some form of randomization,” explains Shomik Jain, a graduate student at the Institute for Data, Systems, and Society (IDSS) and the paper’s lead author.

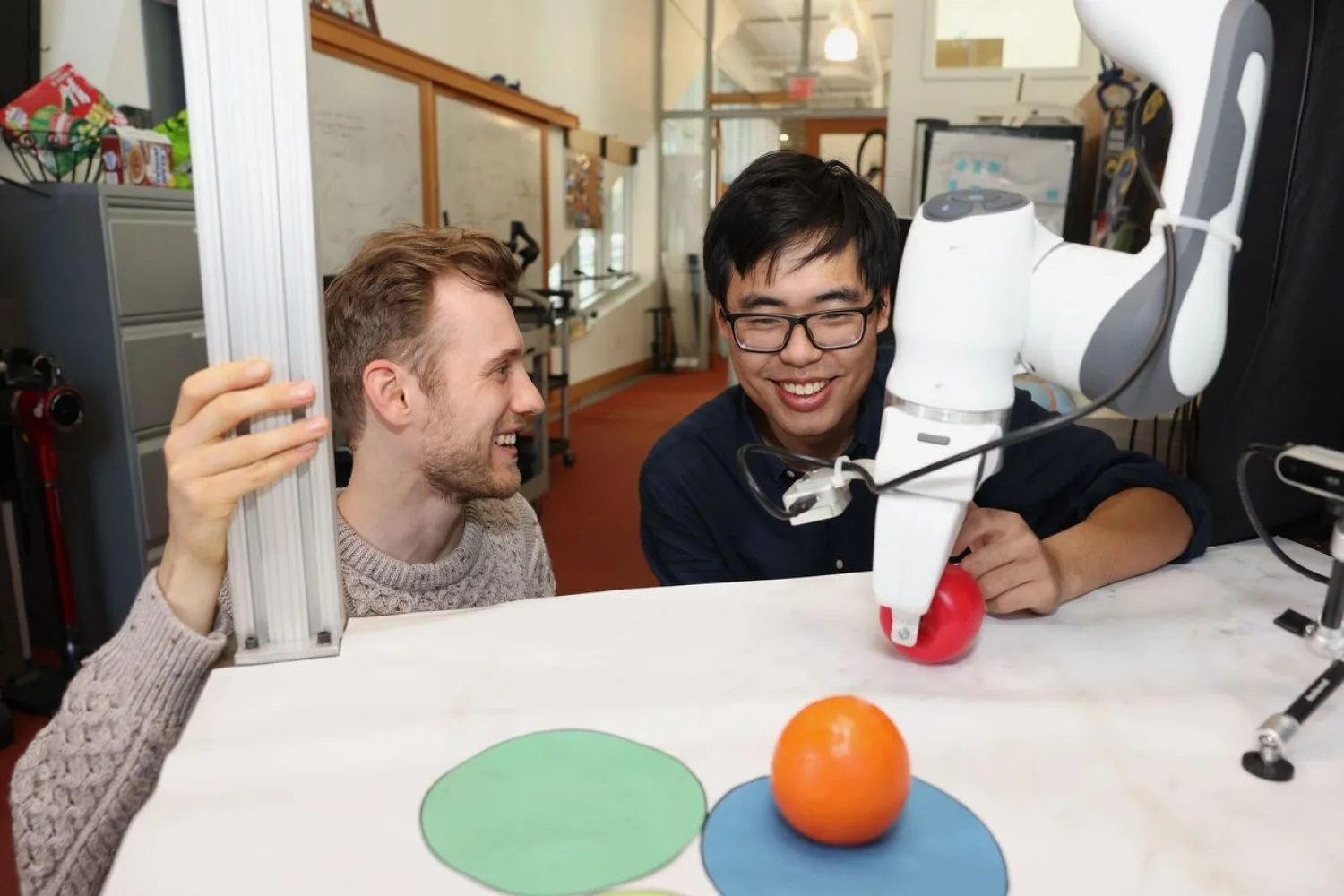

Joining Jain in this research are Kathleen Creel, assistant professor of philosophy and computer science at Northeastern University; and senior author Ashia Wilson, the Lister Brothers Career Development Professor in the Department of Electrical Engineering and Computer Science and a principal investigator at the Laboratory for Information and Decision Systems (LIDS). Their research is slated for presentation at the International Conference on Machine Learning.

Understanding Claims

This work builds upon a previous study in which the team examined the potential harms of using deterministic systems at scale. They identified how deploying a machine-learning model for resource allocation can exacerbate inequalities embedded in training data, thereby perpetuating bias and systemic disparities.

“Randomization serves as a valuable concept in statistics, and we were encouraged to discover that it meets fairness needs from both systemic and individual perspectives,” states Wilson.

In their previous work, they explored under what conditions randomization could enhance fairness, anchoring their analysis in the philosophical insights of John Broome, who advocated for lotteries as a means to allocate scarce resources equitably.

Individuals’ claims to vital resources, such as kidney transplants, may hinge on merit, deservingness, or need. For instance, the fundamental right to life influences one’s claim to a kidney, as explained by Wilson.

“Recognizing that people have diverse claims to these limited resources underlines the necessity of honoring all individuals’ claims. If we perpetually allocate resources to those with the strongest claims, where is the fairness?,” Jain poses.

This deterministic allocation can trigger systemic exclusion or exacerbate patterned inequalities—where one allocation increases the likelihood of receiving future benefits. Additionally, since machine-learning models can err, a deterministic strategy may repeat the same mistakes.

Randomization offers a solution to these dilemmas, yet not all model decisions should be equally randomized.

Embracing Structured Randomization

The researchers suggest utilizing a weighted lottery to modulate the level of randomization based on the uncertainty tied to a model’s decision-making process. Decisions deemed less certain warrant increased randomization.

“For kidney allocation, planning often revolves around projected lifespans, which can be profoundly uncertain. When two patients are merely five years apart in age, for example, it becomes significantly challenging to forecast outcomes. We aim to harness this uncertainty to inform our randomization strategy,” Wilson elaborates.

Employing statistical uncertainty quantification, the researchers assessed the required degree of randomization in various contexts. They find that calibrated randomization can yield fairer results for individuals without substantially compromising a model’s utility or effectiveness.

“Balancing overall utility with respect for individuals’ rights to scarce resources is critical, but often the compromise necessary is minimal,” Wilson notes.

Despite these findings, the researchers emphasize that there are circumstances where randomizing decisions would not enhance fairness and could even be detrimental, such as in criminal justice settings.

Nonetheless, various other areas may benefit from randomization, like college admissions, prompting the researchers to consider further studies examining additional use cases. They also aim to investigate how randomization might influence other factors like competition and pricing, and how it could bolster machine-learning models’ robustness.

“Our paper aims to illustrate the potential advantages of randomization, presenting it as a strategic tool. Ultimately, the extent to which it is applied is up to the stakeholders involved in the allocation process, and determining that is a question for further research,” Wilson concludes.

Photo credit & article inspired by: Massachusetts Institute of Technology