Identifying a single faulty turbine in a vast wind farm can feel like searching for a needle in a haystack, especially when hundreds of signals and millions of data points are involved.

To tackle this complex challenge, engineers often turn to advanced deep-learning models designed to detect anomalies in time-series data—those repeated measurements captured over time for each turbine.

However, training these deep-learning models to analyze time-series data is not only expensive but also labor-intensive. Add to this the need for potential retraining post-deployment and the fact that many wind farm operators lack machine-learning expertise, and you can see the daunting task ahead.

In a groundbreaking study, researchers at MIT discovered that large language models (LLMs) could serve as more efficient anomaly detectors for time-series data. Significantly, these pretrained models can be employed immediately without extensive modifications.

The team introduced a framework known as SigLLM, which includes a component that transforms time-series data into text-based inputs compatible with LLMs. Users can input their prepared data into the model, instructing it to identify anomalies, while the LLM also has the capability to forecast future time-series data points, enhancing its role in the anomaly detection process.

While LLMs didn’t surpass state-of-the-art deep learning models in anomaly detection, they did perform comparably to several other AI techniques. With further refinement, this framework could empower technicians to pinpoint potential issues in various types of equipment, including heavy machinery and satellites, before they escalate, all without the costly training of deep-learning models.

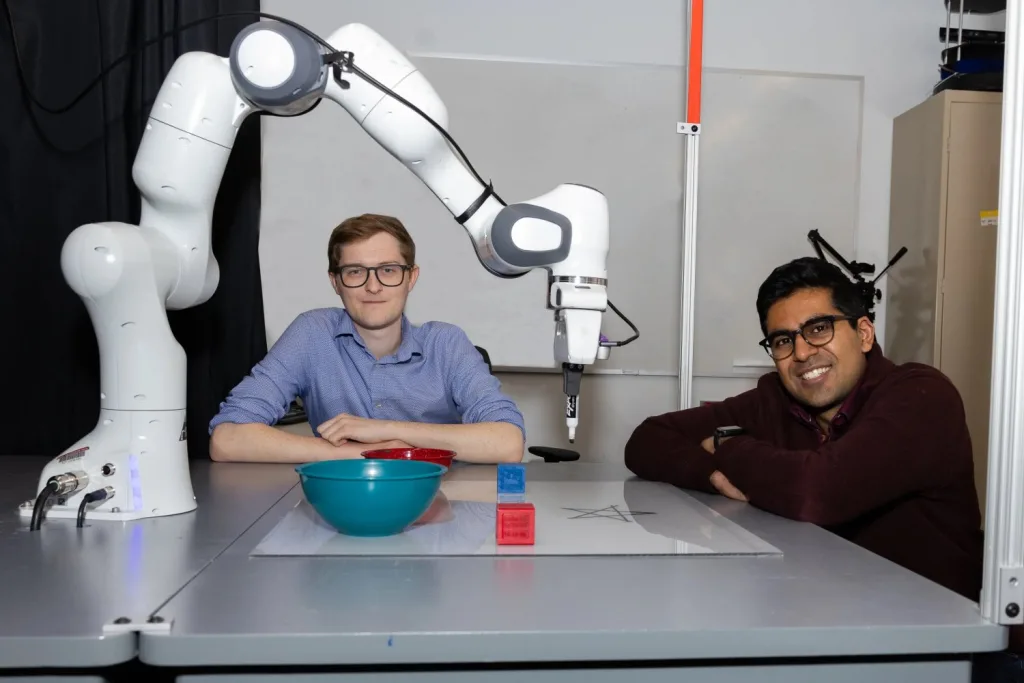

“This initial effort shows promising opportunities to leverage LLMs for complex anomaly detection tasks,” says Sarah Alnegheimish, a graduate student in electrical engineering and computer science (EECS) and the lead author of a paper about SigLLM.

Her research team included Linh Nguyen, another EECS graduate student; Laure Berti-Equille, a research director at the French National Research Institute for Sustainable Development; and senior author Kalyan Veeramachaneni, a principal research scientist at MIT’s Laboratory for Information and Decision Systems. Their findings will be presented at the IEEE Conference on Data Science and Advanced Analytics.

An Off-the-Shelf Solution

LLMs function in an autoregressive manner, meaning they recognize that the most recent values in sequential data rely on prior values. For example, models like GPT-4 can predict the next word in a sentence based on its preceding words.

Given that time-series data is sequential, the researchers hypothesized that the autoregressive characteristics of LLMs could make them ideal candidates for detecting anomalies within this data format.

They sought to create a method that bypasses the need for fine-tuning, a process that requires engineers to retrain a general-purpose LLM on a limited dataset for a specific task. Instead, they introduced a straightforward, out-of-the-box application of the LLM.

The challenge was converting time-series data into text-based inputs suitable for the language model. The researchers achieved this through a series of transformations, highlighting the crucial elements of the time series while minimizing data representation with fewer tokens. More tokens necessitate higher computation costs.

“If you don’t execute these transformations carefully, you risk omitting significant portions of your data, potentially losing vital information,” Alnegheimish explains.

With the conversion process established, the team devised two distinct anomaly detection methodologies.

Approaches for Anomaly Detection

The first method, referred to as Prompter, involves feeding the prepared data to the model and instructing it to find anomalous values. “We iterated numerous times to determine the optimal prompts for specific time series. It isn’t straightforward to comprehend how these LLMs process the data,” Alnegheimish adds.

The second approach, dubbed Detector, utilizes the LLM as a forecaster to predict subsequent values in a time series. The model’s predicted value is compared to the actual value, and a significant discrepancy indicates a likely anomaly.

Detector acts as part of an anomaly detection pipeline, while Prompter operates independently. Notably, Detector outperformed Prompter, which occasionally produced excessive false positives.

“With the Prompter approach, we were asking the LLM to tackle a more convoluted problem than necessary,” notes Veeramachaneni.

When comparing both methods against existing techniques, Detector excelled over transformer-based AI models in seven out of eleven datasets evaluated, all without any requisite training or fine-tuning.

In the future, LLMs might also deliver plain-language explanations of their predictions, helping operators better understand why specific data points are flagged as anomalies.

However, it’s worth noting that top-tier deep learning models significantly outperformed LLMs, indicating that more development is necessary before LLMs can be effectively utilized for anomaly detection.

“The pressing question is what it will take for LLMs to reach parity with these advanced models. An LLM-based anomaly detector must deliver substantial innovations to warrant this effort,” states Veeramachaneni.

The research team is keen to explore whether fine-tuning can enhance performance, although this would involve additional time, costs, and expertise. Presently, their LLM approaches take between 30 minutes and 2 hours for result generation, so optimizing for speed is another critical focus for future work. The team also aims to investigate how LLMs carry out anomaly detection to potentially enhance their effectiveness further.

“For complex tasks like anomaly detection in time series, LLMs are certainly a strong contender. Perhaps they can also address other complex challenges,” Alnegheimish muses.

This research received funding from SES S.A., Iberdrola, ScottishPower Renewables, and Hyundai Motor Company.

Photo credit & article inspired by: Euronews