Have you ever pondered the capabilities of large language models (LLMs) like GPT-4? For example, though these models can’t actually “smell” a rain-drenched campsite, they can articulate vivid descriptions of it, speaking of “an air thick with anticipation” and “earthy freshness.” However, this expressiveness might stem from their extensive training data rather than genuine sensory experience. But can LLMs truly grasp concepts like recognizing that a lion is larger than a house cat, despite lacking physical senses? This question taps into longstanding debates among scholars and philosophers about language comprehension and intelligence.

Exploring this fascinating question, researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have made groundbreaking discoveries indicating that LLMs may create their own understanding of reality, enhancing their generative capacities. To investigate this, the team designed a series of Karel puzzles, wherein they instructed an LLM to solve challenges to control a robot in a virtual setting without demonstrating how to execute those solutions. Employing a machine learning technique known as “probing,” they examined the model’s internal reasoning processes as it devised new solutions.

After training on over a million unique puzzles, remarkable results emerged: the LLM seemed to develop its own interpretation of the simulation, despite never being directly exposed to it during training. This revelation challenges preconceptions regarding the types of information essential for learning linguistic meaning and opens up discussions on whether LLMs might one day achieve a richer comprehension of language.

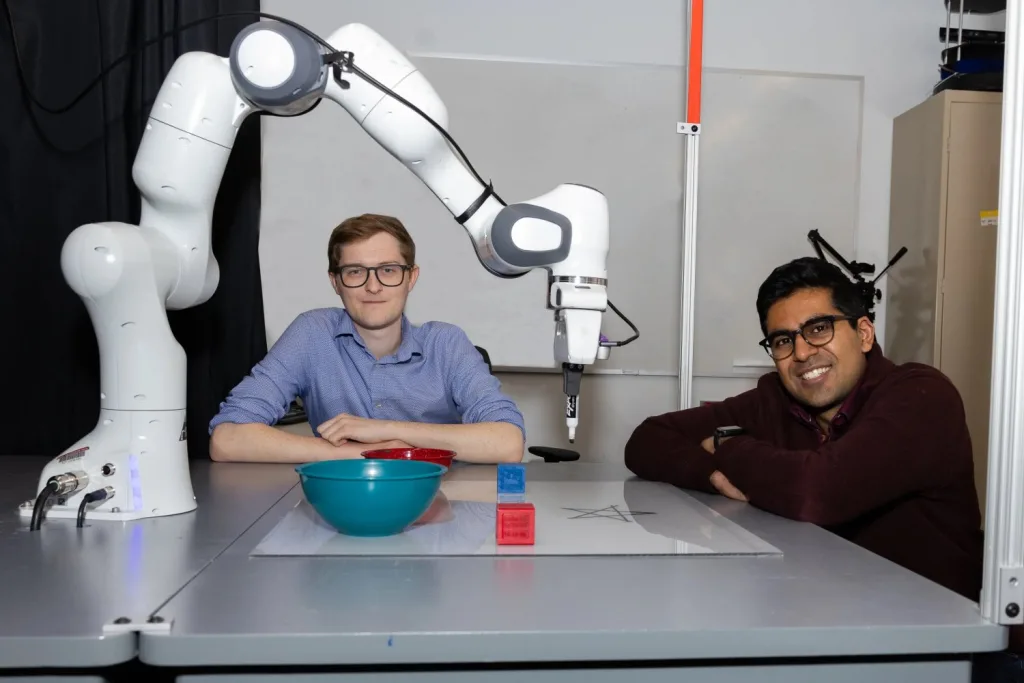

“Initially, the language model produced random, ineffective instructions. By the end of our training, it generated correct instructions 92.4% of the time,” explains Charles Jin, an MIT PhD candidate in electrical engineering and computer science (EECS) and lead author of a new research paper detailing their findings. “This high accuracy sparked excitement, suggesting our model might truly understand linguistic meanings — a realization that signals its potential to grasp language on a level deeper than mere word assembly.”

Peeking Inside an LLM’s Mind

The probing process allowed Jin to closely observe the evolution of the LLM’s capabilities. It examined how the model internally interpreted the instructions, unveiling that it formed its own simulation of how the robot executed each command. As the LLM’s problem-solving improved, so did its conceptual understanding of the instructions, eventually leading it to successfully generate effective commands.

Jin draws parallels between the LLM’s language learning journey and a child’s gradual mastery of speech. Initially, it resembles a baby babbling nonsensical phrases. Gradually, the LLM grasps syntax, enabling it to produce plausible-sounding instructions that may not function correctly, yet eventually, it evolves to form accurate instructions just as a child learns to articulate coherent sentences.

Decoding Understanding: A “Bizarro World” Experiment

While the probe was intended to explore the LLM’s thought processes, an intriguing question arose: could the probe also influence the model’s results? The researchers needed to disentangle the probe’s functions to ensure that the LLM’s understanding was genuine and not merely a reflection of the probe’s translations.

To this end, they introduced a new probe set in a “Bizarro World,” where commands like “up” were redefined to mean “down.” Jin explains, “If the probe can translate these inverted instructions with ease, it suggests it was simply extracting movements without true understanding. Conversely, if it struggles, we’d infer that the LLM has an independent grasp of the instruction meanings.”

The results confirmed the latter; the probe encountered difficulties translating the altered meanings, revealing that the original semantics were indeed embedded within the language model, underscoring the model’s autonomy in comprehending instructions.

“Our findings address a core question in artificial intelligence: Are the impressive abilities of large language models merely a product of statistical correlations, or do they foster a meaningful understanding of the concepts they handle? This research indicates that LLMs can develop an internal framework of the simulated environments presented to them,” asserts Martin Rinard, an MIT professor in EECS, CSAIL member, and senior author of the paper.

Despite the promising implications of their research, Jin does acknowledge some limitations due to the use of a straightforward programming language and a relatively small model during this study. Looking ahead, they plan to expand their experiments into broader contexts. Although this research does not present a method for accelerating LLMs’ understanding of meaning, it lays the groundwork for future improvements in training methodologies.

“An intriguing question remains: Is the LLM employing its internal model of reality to reason through tasks like robot navigation? Our results suggest it might be, but the current study was not equipped to definitively answer that,” Rinard adds.

In contemporary discussions about AI, the line between true understanding and mere statistical manipulation often gets blurred. Questions surrounding the essence of comprehension in LLMs are critical as they directly influence our perceptions of AI’s capabilities. This paper elegantly navigates the complexities inherent in this exploration, examining how computer code shares traits with natural language in terms of both syntax and semantics, while allowing for direct observation and experimentation. The findings inspire optimism that perhaps LLMs can develop deeper insights into the meanings behind language.” remarks Ellie Pavlick, an assistant professor of computer science and linguistics at Brown University, who was not associated with this research.

Jin and Rinard’s work received support from grants provided by the U.S. Defense Advanced Research Projects Agency (DARPA).

Photo credit & article inspired by: Massachusetts Institute of Technology