Have you ever faced a question where you only had a partial answer? In situations like that, calling a knowledgeable friend can help you provide a more accurate response. This collaborative effort is similarly beneficial for large language models (LLMs), as it enhances their response accuracy. However, teaching LLMs to identify the right moment to seek help from other models has proven challenging. To address this, researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have introduced a natural approach.

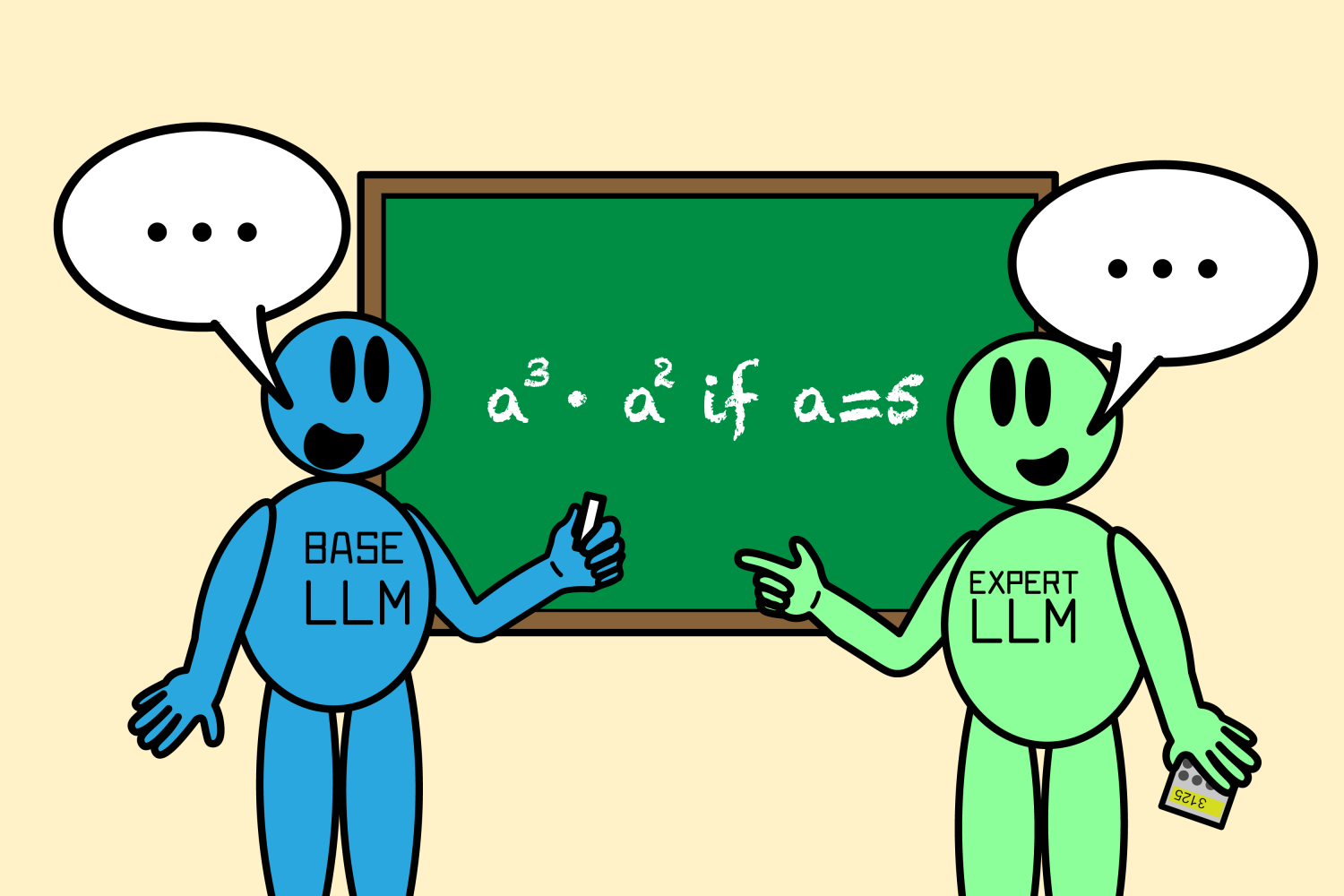

Their innovative algorithm, termed “Co-LLM,” pairs a versatile base LLM with a specialized model, facilitating seamless collaboration. As the general-purpose model constructs a response, Co-LLM analyzes the words (tokens) to determine when to draw a more precise answer from the expert model. This collaborative process leads to improved accuracy, particularly in complex domains like medical inquiries and mathematical problems. Importantly, since the expert model isn’t required for every step, the overall response generation becomes more efficient.

To ascertain when the base model might benefit from the expert’s insights, Co-LLM employs machine learning to develop a “switch variable.” This tool indicates the quality of each word in the responses generated by both models, acting like a project manager that identifies when expert intervention is necessary. For instance, if you asked Co-LLM to list examples of extinct bear species, the two models would jointly create answers. The general-purpose LLM would generate an initial reply while the switch variable identifies opportunities to incorporate better information from the expert model, like specifying the extinction year of each species.

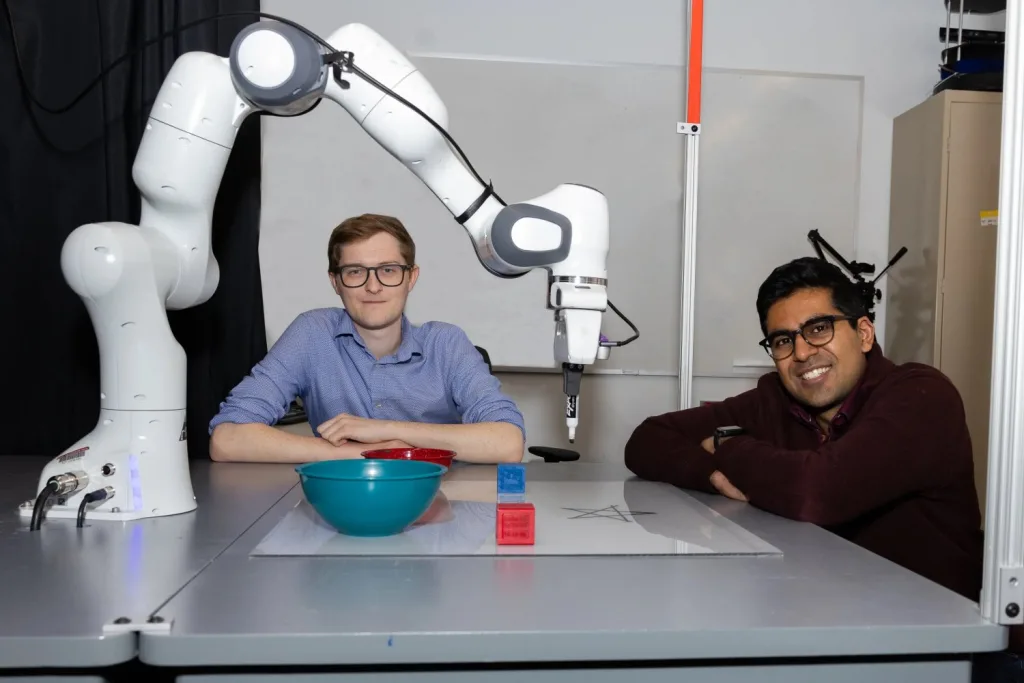

“With Co-LLM, we’re effectively training the general-purpose LLM to ‘call’ upon the expert model as needed,” explains Shannon Shen, an MIT PhD student and CSAIL associate, who is the lead author of a recently published paper on this method. “We utilize domain-specific data to enhance the base model’s understanding of the expert’s strengths in areas such as biomedical challenges and math problem-solving. This process autonomously pinpoints the data segments that the base model struggles with, guiding it to consult the expert model, pre-trained in a related domain. The general-purpose model lays the groundwork for the generation, and when it engages the specialized LLM, it prompts the expert to provide the necessary information. Our findings suggest that these models organically learn to collaborate, much like humans instinctively know when to seek out an expert.”

The Blend of Flexibility and Accuracy

Imagine querying a general-purpose LLM to list the components of a specific prescription medication. It may yield an inaccurate response, revealing the need for a specialized model’s expertise.

To illustrate Co-LLM’s flexibility, the researchers utilized datasets such as the BioASQ medical corpus to integrate a base LLM with expert models across various fields, including the Meditron model, which is designed for medical data. This arrangement allowed the algorithm to respond to questions typically addressed by biomedical professionals, such as identifying mechanisms of specific diseases.

For example, if you asked a basic LLM to identify the ingredients of a particular prescription drug, it might provide an incorrect answer. However, by leveraging the insights from a specialized biomedical model, Co-LLM can offer a much more accurate response and also inform users about areas where they should verify the information.

Another case highlighting Co-LLM’s capability involved a math problem like “a3 · a2 if a=5.” The general-purpose model initially calculated the answer as 125. Thanks to Co-LLM’s collaboration with a robust math LLM called Llemma, they jointly arrived at the correct solution of 3,125.

Co-LLM has been shown to generate more accurate responses compared to both fine-tuned simple LLMs and specialized models working in isolation. Unlike other effective LLM collaboration strategies, such as “Proxy Tuning,” which require all components to share similar training backgrounds, Co-LLM facilitates an interaction between differently trained models. This unique mechanism activates the expert model only when necessary, optimizing response generation efficiency.

Knowing When to Consult the Expert

The MIT team’s algorithm emphasizes that mimicking human collaborative behavior can enhance accuracy in multi-model engagements. To further bolster factual accuracy, the researchers are considering implementing a robust deferral strategy to backtrack in instances where the expert model offers incorrect information. This enhancement would enable Co-LLM to correct itself, maintaining the quality of its responses.

Moreover, the team aims to facilitate updates for the expert model through training the base model in response to new information, ensuring responses remain timely. Such capabilities could allow Co-LLM to effectively manage enterprise documents, leveraging the latest information to keep them current. Additionally, Co-LLM could assist in training smaller, secure models alongside a powerful LLM to improve sensitive documents that need to stay within specific servers.

“Co-LLM introduces a compelling approach to optimizing model selection for enhanced efficiency and performance,” notes Colin Raffel, an associate professor at the University of Toronto and associate research director at the Vector Institute, who was not involved in the research. “By making routing decisions at the token level, Co-LLM offers a detailed method of directing complex generation tasks to a more proficient model. This innovative combination of model and token-level routing provides levels of flexibility that few other methods can match, advancing the development of ecosystems of specialized models that outperform traditional, larger AI systems.”

Shen co-authored the paper with four other CSAIL affiliates: Hunter Lang, Bailin Wang, Yoon Kim, and David Sontag. Their research received support from various institutions, including the National Science Foundation and the MIT-IBM Watson AI Lab, and was showcased at the Annual Meeting of the Association for Computational Linguistics.

Photo credit & article inspired by: Massachusetts Institute of Technology