In 1994, Florida jewelry designer Diana Duyser famously found what she believed to be the image of the Virgin Mary in a grilled cheese sandwich, ultimately preserving it and selling it at auction for a staggering $28,000. But how well do we really grasp pareidolia—the intriguing phenomenon where we see faces or patterns in random objects?

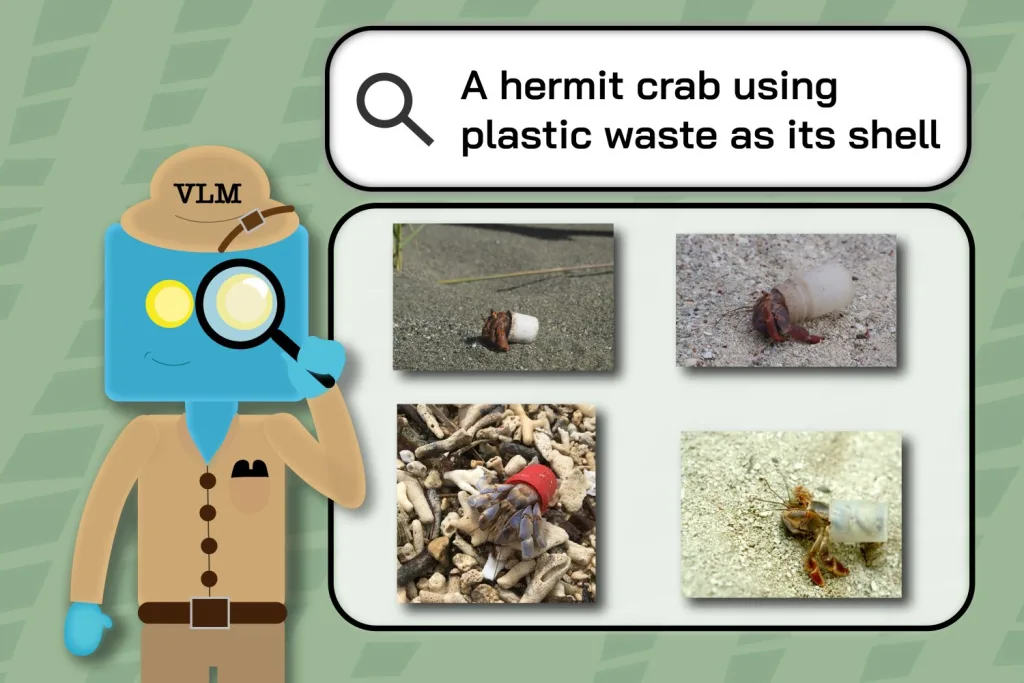

A groundbreaking study from the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) offers insight into this curious subject, featuring a robust dataset of 5,000 pareidolic images created with human input, which vastly exceeds the size of previous collections. Through this research, the team has uncovered several unexpected findings regarding the disparities between human and algorithmic perception, along with how our ability to see faces in everyday objects may have ancient survival implications.

“Face pareidolia has intrigued psychologists for quite some time, yet it remains an underexplored territory within the computer vision domain,” explains Mark Hamilton, a PhD student at MIT and the study’s lead researcher. “Our goal was to craft a valuable resource that sheds light on how both humans and AI interpret these illusory faces.”

What did these images reveal? Initially, AI models appeared less adept at recognizing pareidolic faces compared to human beings. Interestingly, the research team discovered that adjusting algorithms to identify animal faces significantly improved their accuracy in detecting pareidolia. This revelation hints at a potential evolutionary link between our ability to recognize animal faces—crucial for survival—and our inclination to see faces in inanimate objects. Hamilton notes, “Such a result suggests that pareidolia may stem from primal instincts, like quickly spotting a lurking tiger or identifying a deer’s gaze during a hunt, rather than social behaviors.”

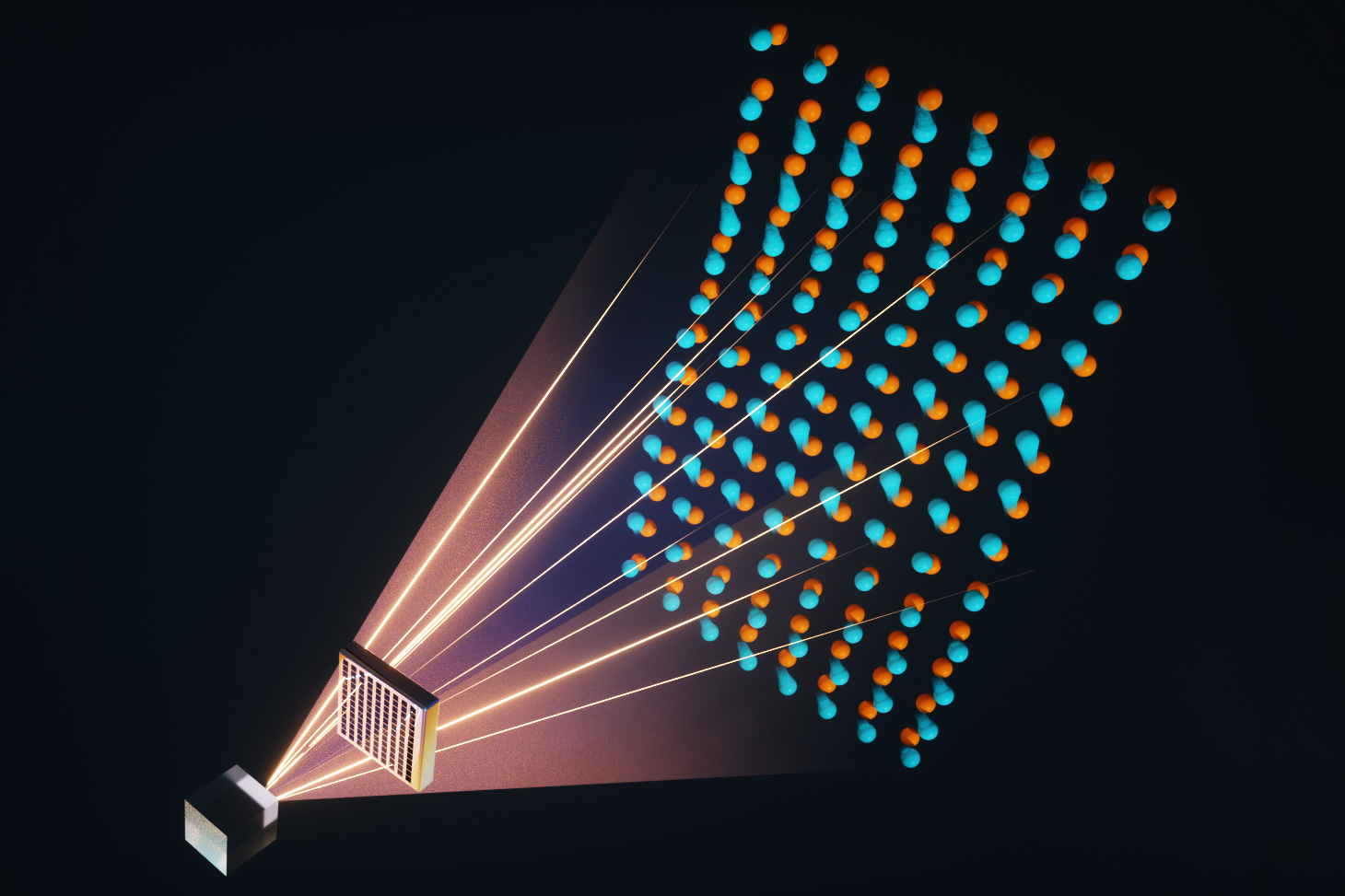

Another fascinating discovery from this study is what researchers refer to as the “Goldilocks Zone of Pareidolia,” a specific range of visual complexity where both humans and machines are most likely to perceive faces in non-human objects. “There exists an ideal complexity level where both humans and algorithms are most likely to detect faces. If the image is too simple, there’s insufficient detail; if it’s overly complex, it becomes noise,” comments William T. Freeman, MIT professor and project principal investigator.

To better understand this phenomenon, the team devised an equation that models how humans and algorithms identify these illusory faces. Their analysis revealed a definitive “pareidolic peak,” pinpointing the highest likelihood of face perception in images that possess just the right amount of complexity. This predicted “Goldilocks zone” was validated through tests with both human subjects and AI facial detection systems.

This new dataset, aptly named “Faces in Things,” far exceeds the limited scope of previous studies that typically employed just 20-30 visual stimuli. Its expansive size enabled researchers to thoroughly investigate the behaviors of cutting-edge facial recognition algorithms after fine-tuning them to recognize pareidolic faces. This approach not only allowed the algorithms to identify these faces but also provided a silicon counterpart to human cognition, enabling questions about the origin of pareidolic face detection that are otherwise unaddressable in human brains.

To assemble this dataset, the team sifted through roughly 20,000 candidate images from the LAION-5B dataset, meticulously labeling them with the help of human annotators. This involved drawing bounding boxes around perceived faces and answering various questions about each one, including perceived emotion, age, and whether the face was an accidental or intentional appearance. “Curating and annotating thousands of images was a monumental endeavor,” Hamilton reflects, adding, “I owe much of this success to my mom, who dedicated countless hours to labeling images.”

The implications of this study extend to advancements in face detection systems, notably in minimizing false positives. This could have significant repercussions for fields like self-driving technology, human-computer interactions, and robotics. Furthermore, the findings could inform product design, allowing for a better understanding and management of pareidolia in various objects. “Imagine refining the design of a car or a child’s toy to make it appear more welcoming, or ensuring a medical device doesn’t unintentionally seem intimidating,” Hamilton suggests.

“It’s intriguing how humans naturally ascribe human-like traits to inanimate objects. For example, you might glance at an electrical socket and envision it singing, complete with moving lips. Yet, algorithms don’t instinctively recognize these cartoonish faces as we do,” Hamilton notes. “This disparity raises compelling questions: What underlies the differences between human perception and algorithmic interpretation? Is pareidolia advantageous or disadvantageous? Why don’t algorithms experience this phenomenon like humans?” These inquiries fueled the investigation, as the classic psychological concept of pareidolia in humans had not been thoroughly examined with respect to algorithms.

As the team prepares to release their dataset to the scientific community, they are already setting their sights on future research. Upcoming work may entail training vision-language models to comprehend and articulate pareidolic faces, potentially creating AI systems that engage with visual stimuli in a way that more closely mimics human understanding.

“This study is a captivating read that provokes thought. Hamilton and colleagues present a tantalizing question: Why do we see faces in things?” states Pietro Perona, the Allen E. Puckett Professor of Electrical Engineering at Caltech, who was not involved in the research. “As they observe, learning from examples—like animal faces—doesn’t entirely clarify the phenomenon. I believe that pondering this question will yield vital insights into how our visual system transcends its real-life training.”

Hamilton and Freeman’s co-authors include Simon Stent, staff research scientist at the Toyota Research Institute; Ruth Rosenholtz, principal research scientist in the Department of Brain and Cognitive Sciences, NVIDIA research scientist, and former CSAIL member; along with CSAIL affiliates postdoc Vasha DuTell, Anne Harrington MEng ’23, and Research Scientist Jennifer Corbett. Their work was partly funded by the National Science Foundation and the CSAIL MEnTorEd Opportunities in Research (METEOR) Fellowship, alongside sponsorship from the United States Air Force Research Laboratory and the United States Air Force Artificial Intelligence Accelerator. The MIT SuperCloud and Lincoln Laboratory Supercomputing Center provided critical high-performance computing resources for the research team.

This insightful work is set to be presented at the European Conference on Computer Vision this week.

Photo credit & article inspired by: Massachusetts Institute of Technology