When someone advises you to “know your limits,” they’re often referring to moderation in activities like exercise. However, for robots, understanding their limits means grasping the constraints of their tasks within a given environment, which is essential for executing chores correctly and safely.

For example, envision a robot tasked with cleaning your kitchen without knowledge of the physical surroundings. How can the robot devise a multi-step plan to ensure the kitchen is spotless? While large language models (LLMs) can provide guidance, relying solely on them may lead the robot to overlook critical physical constraints, such as how far it can extend or the obstacles it needs to navigate. Without this understanding, you might end up with pasta stains lingering on your floorboards.

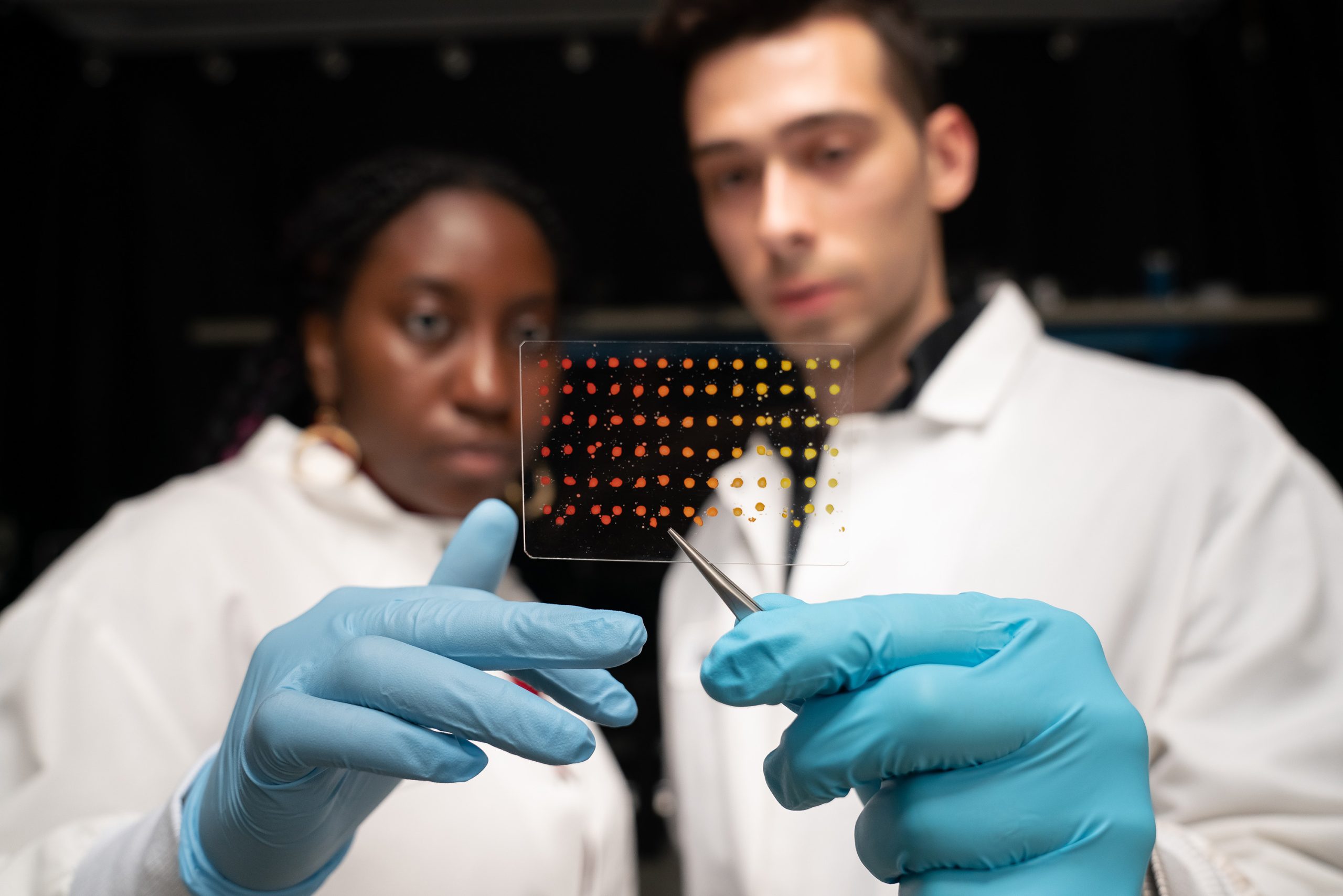

To address this challenge, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) harnessed vision models to help robots perceive their surroundings and understand their limitations. Their approach combines the power of an LLM that formulates a plan, which is subsequently validated in a simulation to ensure safety and practicality. If the initial sequence of actions is unfeasible, the LLM will iteratively generate new plans until a viable solution emerges.

This iterative approach, called “Planning for Robots via Code for Continuous Constraint Satisfaction” (PRoC3S), tests comprehensive plans to ensure they meet all necessary constraints. This method enables robots to handle various tasks, from writing letters to solving puzzles with blocks. In the future, PRoC3S could assist robots in performing more complex chores in dynamic settings, like executing a series of steps to “make breakfast.”

“LLMs in conjunction with traditional robotics frameworks like task and motion planners enable us to tackle open-ended problem-solving,” states PhD student Nishanth Kumar SM ’24, co-author of a recent paper on PRoC3S. “We simulate the environment around the robot in real-time, testing numerous action plans. Vision models help construct a realistic digital world that aids the robot in evaluating feasible actions for each phase of a long-term plan.”

This innovative research was presented at the Conference on Robot Learning (CoRL) held in Munich, Germany, earlier this month.

The CSAIL team’s strategy utilizes an LLM that’s pre-trained on a wide array of internet text. Before deploying the PRoC3S for a specific task, the researchers provided the LLM with a related sample task (like drawing a square) that mirrors the target task (drawing a star). This includes activity descriptions, a long-term action plan, and pertinent details about the robot’s environment.

So, how effective are these plans in real-world scenarios? During simulations, PRoC3S achieved success in drawing stars and letters approximately 80% of the time. It demonstrated proficiency in stacking digital blocks into pyramids and lines, as well as accurately placing specific items, like fruits onto plates. Across these digital trials, the CSAIL method outperformed comparable techniques such as “LLM3” and “Code as Policies”.

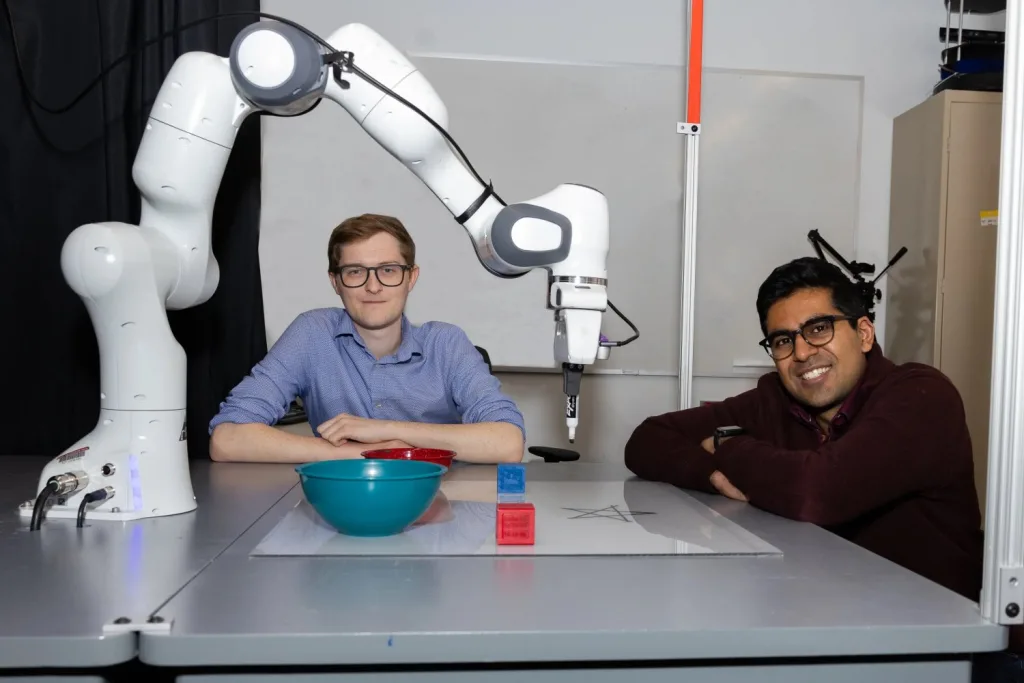

The CSAIL team didn’t stop at simulations. They brought their approach into the real world, effectively developing and executing plans on a robotic arm, teaching it to align blocks linearly and place colored blocks into matching bowls, as well as repositioning items on a table.

Kumar and co-author Aidan Curtis SM ’23, also a PhD student at CSAIL, emphasize that their findings demonstrate how an LLM can generate safer and more reliable plans for real-world execution. They foresee a future where home robots can take on broad instructions (like “bring me some chips”) and deduce the specific steps required to fulfill such requests. By simulating plans within an identical digital realm, PRoC3S could identify effective courses of action and, more importantly, deliver that snack you’ve been craving.

Looking ahead, the researchers aim to enhance outcomes by utilizing a more sophisticated physics simulator and expanding the capacity for handling more complex, long-term tasks through scalable data-search techniques. Additionally, they plan to adapt PRoC3S for mobile robots, like quadrupeds, tasked with navigating their environments.

“Using foundational models like ChatGPT for controlling robots can lead to unsafe or erroneous actions caused by hallucinations,” warns Eric Rosen of The AI Institute, an external researcher. “PRoC3S addresses this challenge by combining foundational model guidance with AI techniques that explicitly reason about the environment, ensuring action safety and correctness. This blend of planning and data-driven methodologies could be crucial for developing robots capable of understanding and reliably executing a wider range of tasks than before.”

Co-authors of Kumar and Curtis include fellow CSAIL affiliates: MIT undergraduate researcher Jing Cao and professors Leslie Pack Kaelbling and Tomás Lozano-Pérez from the MIT Department of Electrical Engineering and Computer Science. Their research received support from various organizations, including the National Science Foundation, the Air Force Office of Scientific Research, the Office of Naval Research, and MIT Quest for Intelligence.

Photo credit & article inspired by: Massachusetts Institute of Technology