Ever wondered if you should take your umbrella before heading out? Checking the weather forecast only helps if it’s reliable.

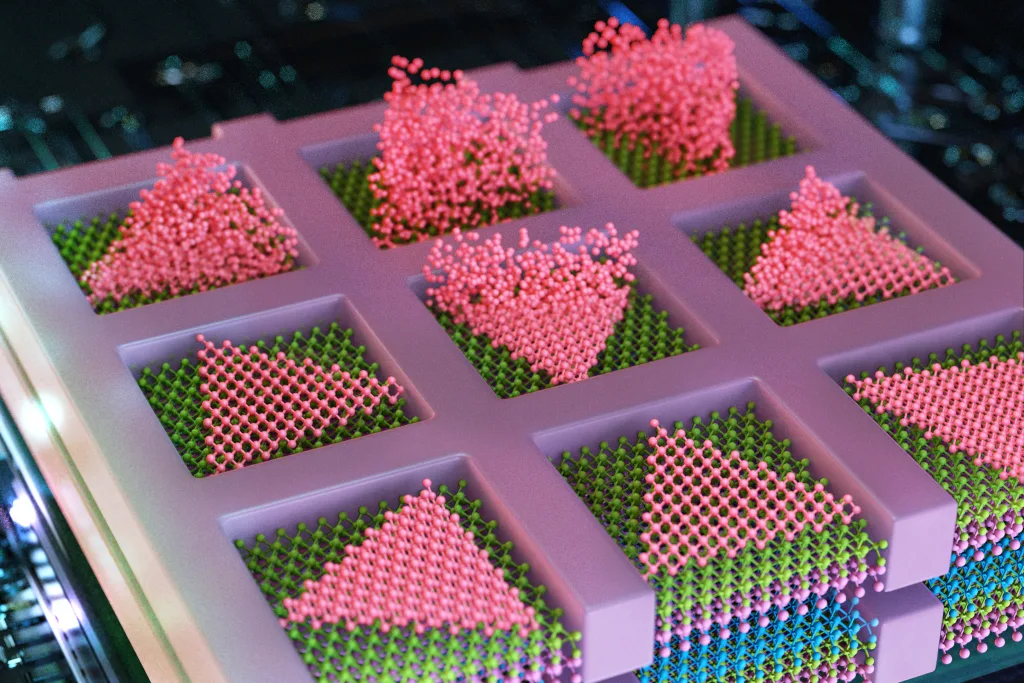

Spatial prediction challenges—such as weather forecasting or air quality assessments—require us to forecast the values of certain variables at new locations using known values from other areas. Traditionally, scientists have relied on established validation methods to gauge the accuracy of these predictions.

However, recent findings from MIT researchers reveal that these common validation techniques can often mislead us in spatial prediction scenarios. Users may mistakenly trust an inaccurate forecast or overrate a prediction model’s efficacy.

The team devised a novel technique to evaluate prediction-validation methods and demonstrated that two widely-used classical techniques can yield significantly erroneous outcomes for spatial tasks. They identified the underlying reasons for these failures and introduced a new validation method tailored to the specific characteristics of spatial data.

In tests using both real and synthetic data, the new validation approach outperformed the two traditional methods. The researchers examined real-world spatial challenges, like estimating wind speed at Chicago O’Hare Airport and projecting air temperatures across five major U.S. cities.

This improved validation method holds promise for various applications, from assisting climate scientists in estimating sea surface temperatures to helping epidemiologists assess the effects of air pollution on public health.

“We hope this leads to more trustworthy evaluations as new predictive models are developed, facilitating a deeper understanding of their performance,” remarks Tamara Broderick, an associate professor at MIT’s Department of Electrical Engineering and Computer Science (EECS), a member of the Laboratory for Information and Decision Systems, and affiliated with the Institute for Data, Systems, and Society as well as the Computer Science and Artificial Intelligence Laboratory (CSAIL).

Co-authors of the study, which will be presented at the International Conference on Artificial Intelligence and Statistics, include lead author and MIT postdoc David R. Burt, along with EECS graduate student Yunyi Shen.

Assessing Validation Techniques

Broderick and her team have collaborated with oceanographers and atmospheric scientists to create machine-learning predictive models specifically designed for spatially-oriented issues.

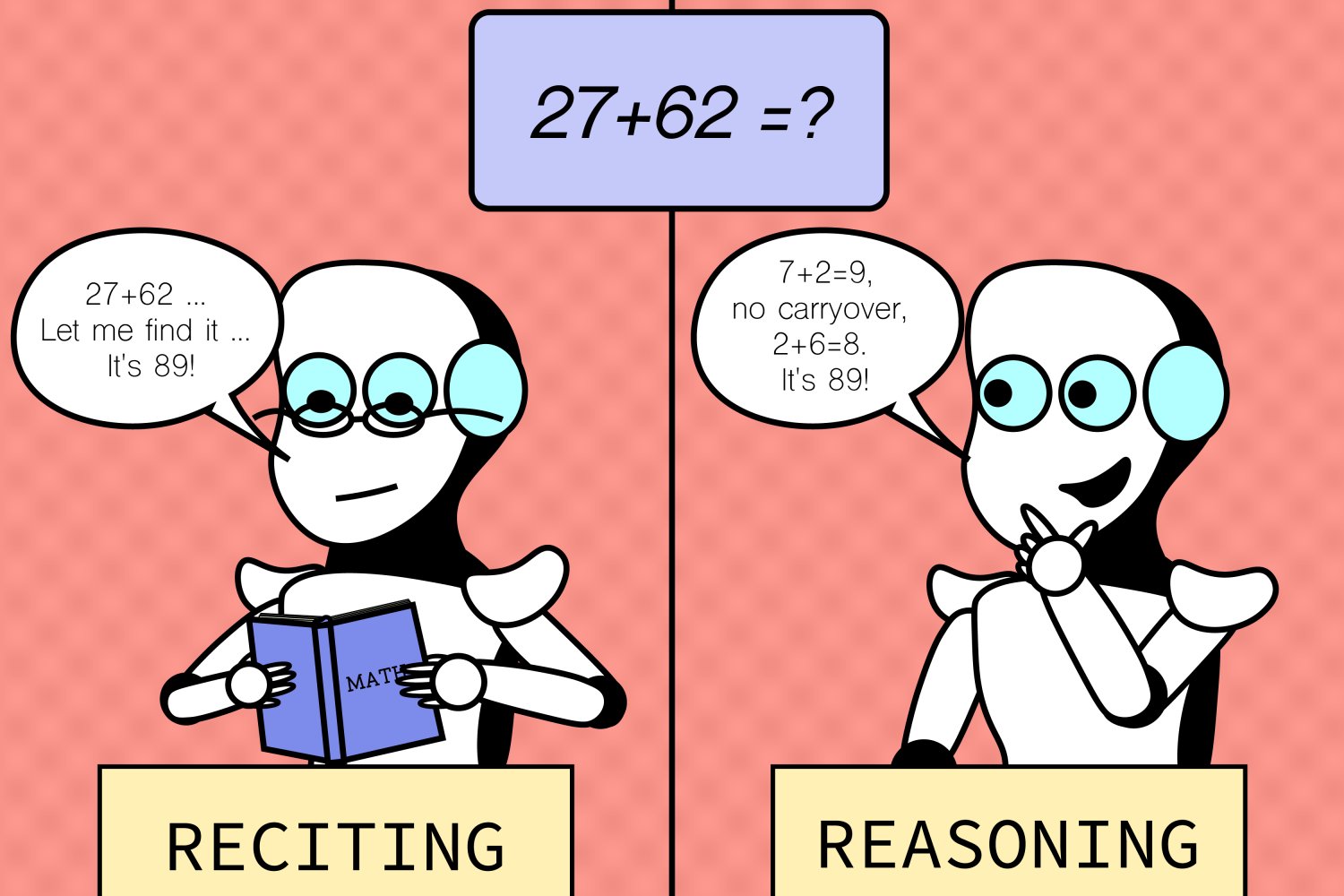

Through this collaboration, they observed that the standard validation methods often struggle in spatially dependent scenarios. Typically, these methods reserve a portion of the training data—termed validation data—to evaluate prediction accuracy.

To understand the shortcomings, a comprehensive analysis revealed that conventional methods rely on unsuitable assumptions regarding spatial data. Traditional validation techniques assume an independence of the validation and test data, suggesting that the value of one observation does not influence another—a notion frequently invalid for spatial contexts.

For example, researchers might utilize validation data from EPA air pollution sensors to assess predictions for pollution levels in protected areas. However, these sensors are not distributed independently; their locations are influenced by surrounding sensor placements.

Moreover, using validation data from urban sensors while predicting for rural conservation zones introduces discrepancies, as the statistical characteristics likely differ due to location. Broderick explains: “Our experiments clearly demonstrated that such assumptions lead to vastly incorrect results in spatial predictions.”

The researchers set out to establish a more fitting assumption for these scenarios.

Focused on Spatial Contexts

In rethinking validation in a spatial context—where data are sourced from various locations—they proposed a new evaluation method predicated on the idea that validation and test data will exhibit gradual variations within a given area. For instance, air quality levels between neighboring houses are unlikely to differ drastically.

“This smoothness assumption is suitable for numerous spatial phenomena, enabling us to construct a valid evaluation process specifically for spatial predictors. To date, no systematic theoretical review has explored this issue comprehensively, leading us to create a superior approach,” says Broderick.

Their technique involves inputting the predictive model, target locations, and validation data, automatically determining the accuracy of the predictions for the specified location. However, validating the effectiveness of their evaluation method was challenging.

“Instead of evaluating a predictive method, we were assessing an evaluation method. We had to creatively experiment with various approaches,” Broderick notes.

Initially, they designed controlled tests using synthetic data to pin down specific parameters, then transitioned to semi-simulated data by adjusting real data. Ultimately, they experimented with genuine data for multiple analyses.

Using three distinct data types from realistic scenarios—including predicting flat prices in England based on location and forecasting wind speed—allowed for an expansive assessment. In most cases, their method surpassed the accuracy of the traditional comparison techniques.

Moving forward, the researchers aim to apply these techniques to enhance uncertainty quantification in spatial contexts and explore other areas where their smoothness assumption could enhance predictive performance, including time-series data.

This research was partially funded by the National Science Foundation and the Office of Naval Research.

Photo credit & article inspired by: Massachusetts Institute of Technology