When robots encounter unfamiliar objects, they often face a significant challenge: appearances can be deceiving. For instance, a robot may attempt to grasp what looks like a block but find out it’s actually a realistic piece of cake. Such misleading visual cues can lead robots to miscalculate essential physical properties such as weight and center of mass, causing them to apply inadequate grasps or excessive force.

To tackle this issue, researchers at the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) developed the Grasping Neural Process. This advanced predictive physics model allows robots to infer hidden object traits in real-time, enhancing their grasping capabilities. Unlike traditional models, which require extensive computational resources and interaction data, this deep-learning system significantly reduces these demands, making it ideal for environments such as warehouses and households.

The Grasping Neural Process is specifically designed to recognize invisible physical attributes by analyzing a history of attempted grasps. It then utilizes these inferred properties to predict effective future grasps. Previous methods often relied solely on visual data to guide robotic grasps, but this innovative system takes into account a broader range of interactions.

Traditional approaches to inferring physical properties typically involved complex statistical techniques requiring numerous known grasps and considerable computation time. In contrast, the Grasping Neural Process excels in unstructured settings, efficiently executing quality grasps with far less interaction data and completing these computations in under a tenth of a second, compared to the seconds or even minutes needed by older methods.

This model is particularly beneficial in unpredictable environments, where items vary widely. For instance, a robot utilizing this MIT technology could swiftly learn to manage tightly packed boxes containing various food items without ever seeing their contents. Similarly, in fulfillment centers, it could handle objects of differing physical characteristics and geometries, sorting them into their respective shipping boxes.

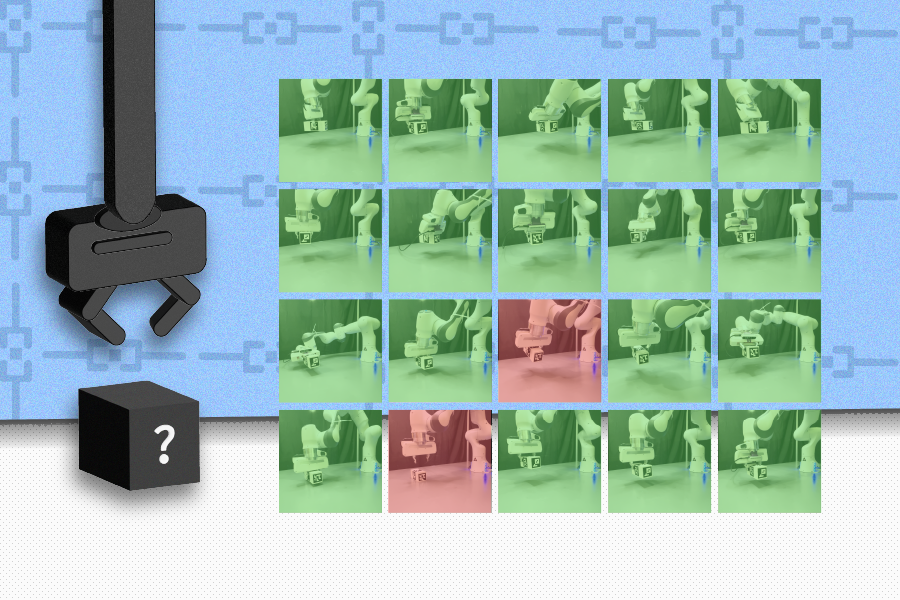

Training the Grasping Neural Process involved using 1,000 unique shapes and 5,000 objects, achieving stable grasps in simulations using novel 3D structures from the ShapeNet repository. The team then tested their model with two weighted blocks in the real world, where their system outperformed a baseline that considered only object geometries. With just 10 experimental grasps beforehand, the robotic arm successfully lifted the boxes on 18 and 19 out of 20 attempts. In contrast, when unprepared, it only managed eight and 15 stable grasps.

The process of effective robotic inference consists of three key stages: training, adaptation, and testing. Initially, robots work with a fixed set of objects, learning to infer physical properties based on past successful and unsuccessful grasps. The CSAIL model streamlines this inference, training a neural network to predict the outputs of costly statistical algorithms. This approach allows the robot to assess which grasps will yield the best results with limited prior interaction.

Next comes the adaptation phase, where the robot encounters unfamiliar objects. At this stage, the Grasping Neural Process aids the robot in experimenting and adjusting its positions, leading to a refined understanding of which grips will be most effective. Finally, the testing phase sees the robot executing a grasp with its newfound comprehension of the object’s characteristics.

“As an engineer, it’s crucial not to assume that a robot inherently knows all the details necessary for a successful grasp,” said lead author Michael Noseworthy, a PhD student in electrical engineering and computer science (EECS) at MIT and a CSAIL affiliate. “Without labeling from humans, robots had to rely on expensive inference methods.” Fellow lead author Seiji Shaw, also an EECS PhD student and CSAIL affiliate, emphasized the efficiency of their approach: “Our model significantly streamlines this process, enabling robots to envision the grasps most likely to yield success.”

Chad Kessens, an autonomous robotics researcher at the U.S. Army’s DEVCOM Army Research Laboratory, which sponsored this project, added, “To transition robots from controlled environments like labs to real-world applications, they must better handle the unknown and minimize failures from slight deviations in their programming. This research represents a monumental step towards realizing the full transformative potential of robotics.”

Although the Grasping Neural Process effectively infers static properties, the research team aims to enhance the system to adjust grasps in real time for tasks involving objects with dynamic characteristics. Imagine robots adapting to changes such as shifting mass distributions when a bottle fills. Ultimately, this groundbreaking work could enable robots to perform complex tasks—like picking up and then chopping a carrot—efficiently.

Joining the research team is Nicholas Roy, a professor of aeronautics and astronautics at MIT and a CSAIL member, who served as a senior author on this work. The team recently presented their findings at the IEEE International Conference on Robotics and Automation.

Photo credit & article inspired by: Massachusetts Institute of Technology