Neural networks are revolutionizing the way engineers develop controllers for robots, leading to the creation of more adaptive and efficient machines. However, while these brain-like machine-learning systems are incredibly powerful, their complexity poses significant challenges. One of the biggest hurdles is ensuring that a robot operated by a neural network can safely perform its tasks.

The traditional method for verifying safety and stability relies on Lyapunov functions. By identifying a Lyapunov function that consistently decreases in value, engineers can assure that the robot will avoid unsafe or unstable conditions associated with higher values. Unfortunately, existing methods for confirming Lyapunov conditions often struggle to scale when applied to complex robotic systems.

In an exciting advancement, researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and partner institutions have developed innovative techniques to rigorously validate Lyapunov calculations in sophisticated systems. Their newly created algorithm efficiently searches for and verifies a Lyapunov function, offering a stability guarantee for various robotic configurations. This breakthrough could pave the way for safer deployment of robots and autonomous vehicles, including aircraft and spacecraft.

To enhance performance over previous algorithms, the research team discovered a pragmatic shortcut in the training and verification processes. They generated less expensive counterexamples, such as adversarial data from sensors that could potentially disrupt a robot’s control system. By optimizing the robotic systems to account for these challenges, they enabled the machines to learn how to navigate difficult circumstances. This understanding allowed them to operate safely under more varied conditions than previously achievable. Furthermore, they devised a novel verification formulation utilizing a scalable neural network verifier known as α,β-CROWN, delivering rigorous worst-case scenario guarantees that extend beyond just counterexamples.

“While we’ve observed impressive performance in AI-controlled machines like humanoid robots and robotic dogs, these AI systems often lack the formal guarantees essential for safety-critical operations,” remarks Lujie Yang, an MIT electrical engineering and computer science (EECS) PhD student and co-lead author of the recent study alongside Toyota Research Institute researcher Hongkai Dai. “Our research effectively bridges the gap between the high-performance capabilities of neural network controllers and the necessary safety assurances required for the deployment of complex neural networks in real-world applications,” explains Yang.

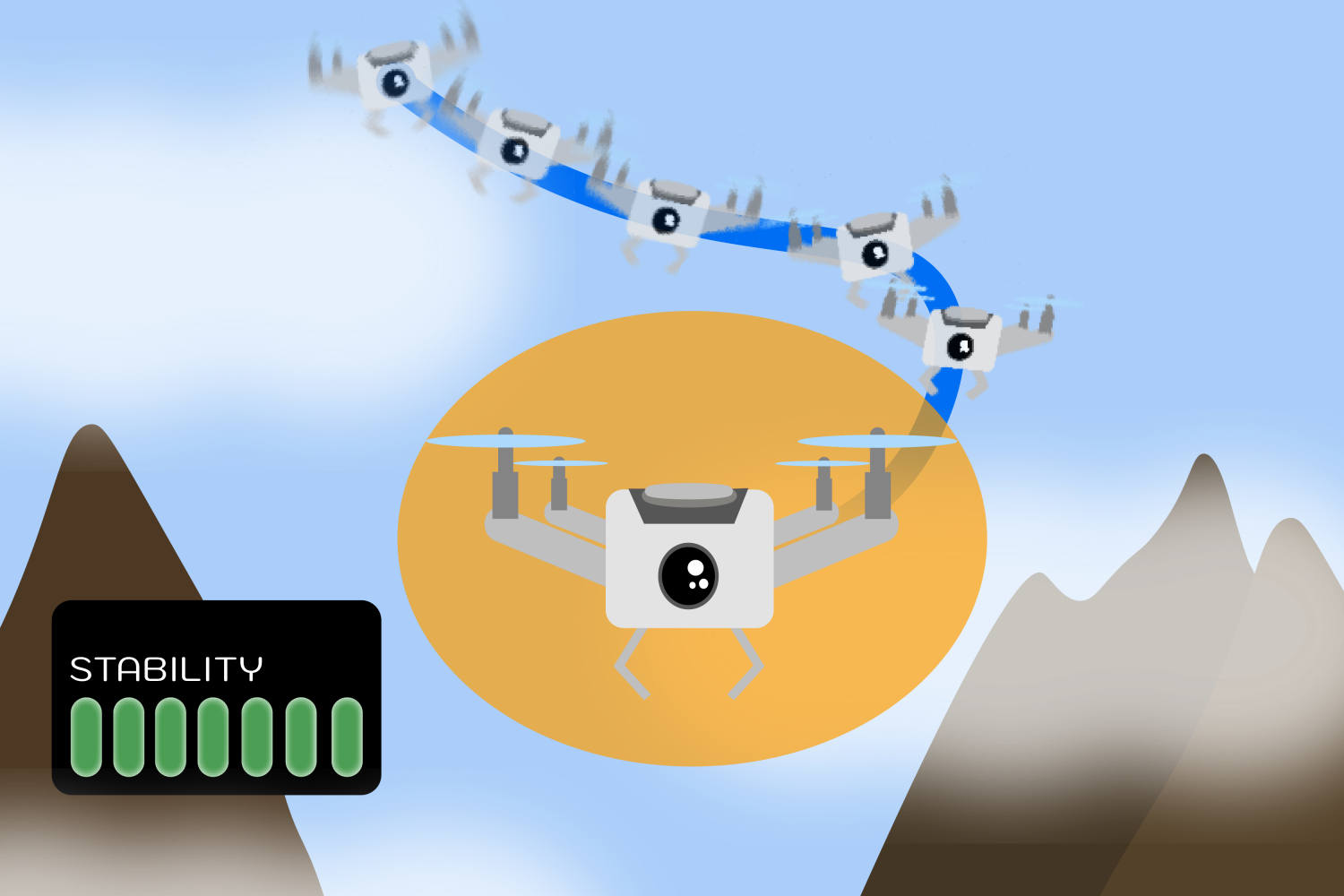

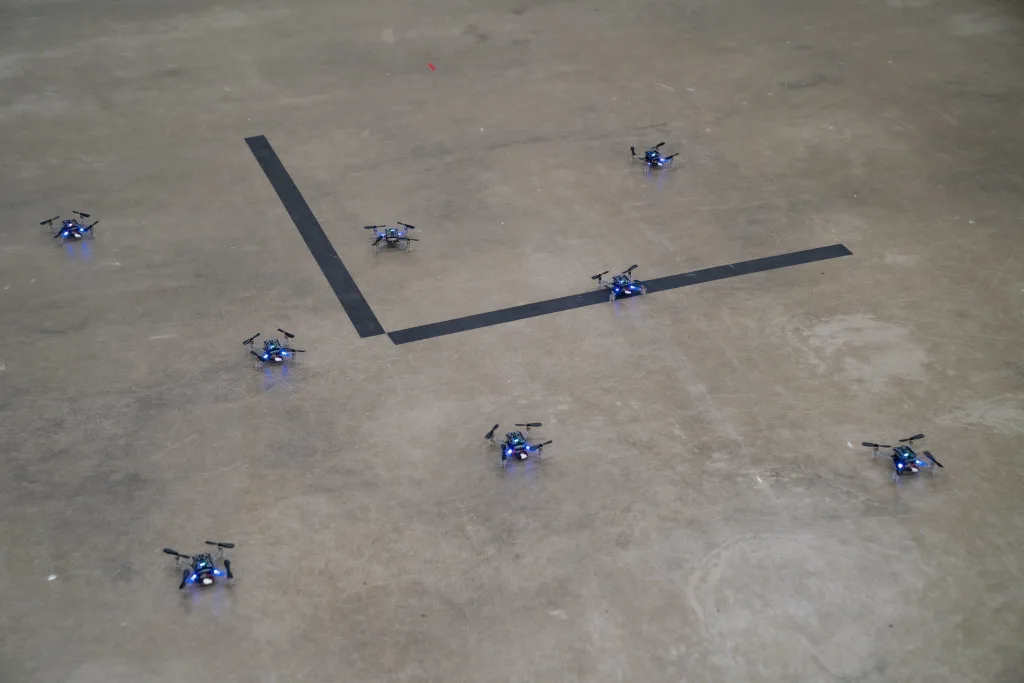

For a practical demonstration, the team simulated a quadrotor drone utilizing lidar sensors to stabilize within a two-dimensional environment. Their algorithm effectively guided the drone to a steady hover position, using only the limited environmental information provided by the lidar sensors. In further experiments, their method enabled the stable operation of two complex simulated robotic systems—the inverted pendulum and a path-tracking vehicle—across a broader range of conditions. These scenarios, though relatively modest, represent increased complexity compared to earlier neural network verification efforts, particularly as they included sensor models.

“Unlike typical machine learning challenges, properly employing neural networks as Lyapunov functions involves tackling difficult global optimization problems, making scalability crucial,” notes Sicun Gao, an associate professor of computer science and engineering at the University of California at San Diego. Gao, who did not participate in this research, emphasizes that this work significantly contributes by developing algorithmic strategies optimized for the specific application of neural networks in control problems. This advancement marks a substantial improvement in both scalability and solution quality over existing methods, and it opens thrilling avenues for the future of optimization algorithms in neural Lyapunov techniques as well as the broader use of deep learning in robotics and control systems.

Yang and her colleagues’ stability approach offers a wealth of potential applications where safety is non-negotiable. It could play a critical role in ensuring a smooth ride for autonomous vehicles like planes and spacecraft while enhancing the reliability of drones conducting deliveries or mapping diverse terrains.

The techniques introduced here are versatile and extend beyond robotics; they hold promise for various fields such as biomedicine and industrial processing in the years to come.

While this technique shows improvement in scalability compared to previous methodologies, the researchers are also investigating ways to enhance its performance for high-dimensional systems. They aim to incorporate additional data inputs beyond lidar readings, such as images and point clouds.

Looking ahead, the team endeavors to extend the same stability guarantees to systems operating in unpredictable environments and vulnerable to disturbances. For example, they aspire to ensure that a drone can maintain a steady flight and complete its tasks, even when facing robust wind gusts.

Moreover, the researchers intend to apply their methods to optimization challenges aimed at minimizing both the time and distance a robot requires for task completion while maintaining stability. They plan to adapt their techniques for humanoid robots and other real-world systems, where maintaining stability during contact with various surfaces is critical.

Russ Tedrake, the Toyota Professor of EECS, Aeronautics and Astronautics, and Mechanical Engineering at MIT, serves as a senior author on this research. The paper also acknowledges contributions from UCLA PhD student Zhouxing Shi, associate professor Cho-Jui Hsieh, and assistant professor Huan Zhang from the University of Illinois Urbana-Champaign. Their work received support from numerous organizations, including Amazon, the National Science Foundation, the Office of Naval Research, and the AI2050 program at Schmidt Sciences. The findings will be presented at the 2024 International Conference on Machine Learning.

Photo credit & article inspired by: Massachusetts Institute of Technology