One of the top priorities for many automation enthusiasts is tackling the mundane world of household chores.

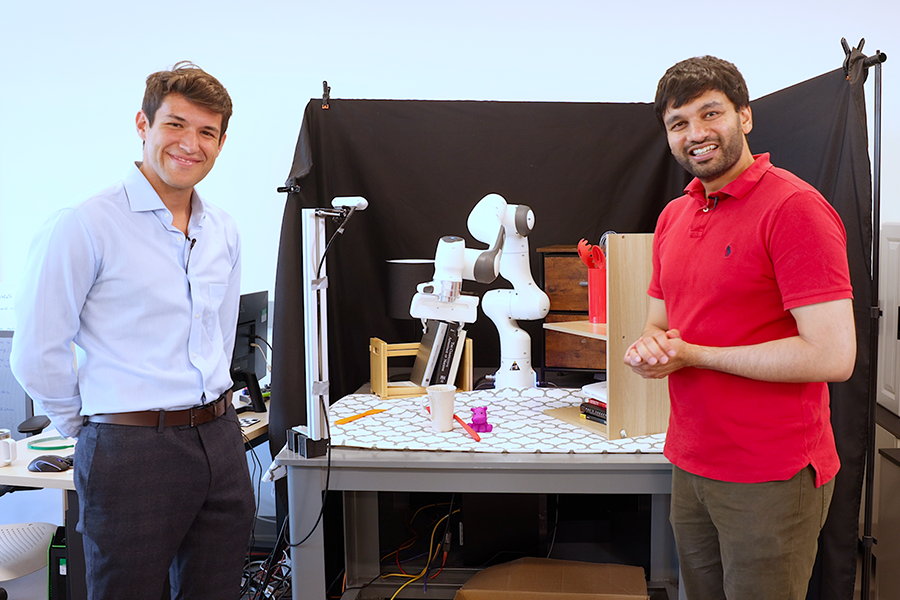

For roboticists, the ultimate goal is to devise a sophisticated combination of hardware and software that allows machines to learn “generalist” policies—strategies that guide robot behavior in a variety of environments and conditions. However, if you own a home robot, you likely prefer its capabilities to be finely tuned to your personal space rather than your neighbor’s. In light of this, researchers at the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) have embarked on a mission to simplify the training of robust robot policies tailored to specific settings.

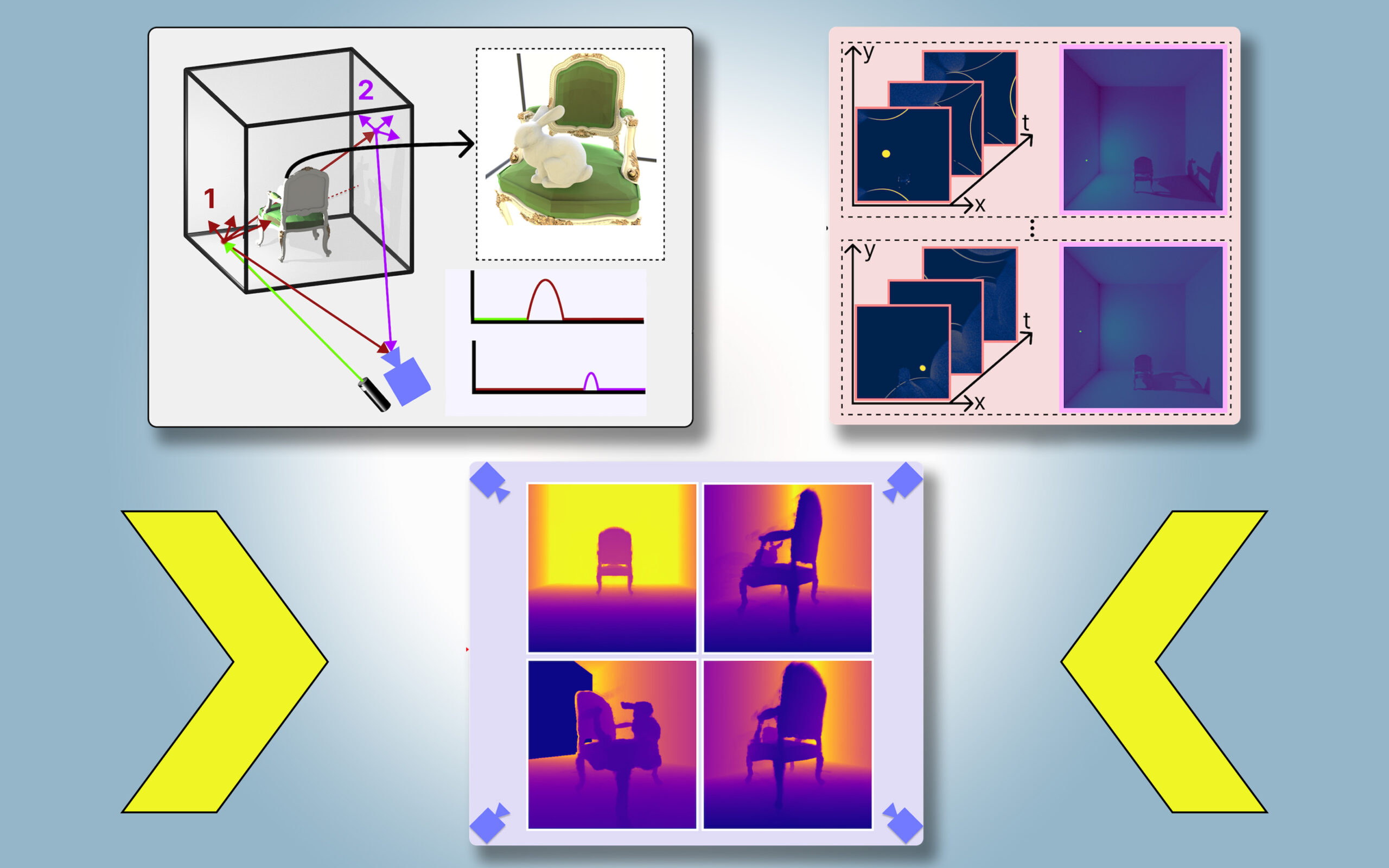

“Our objective is to equip robots with the ability to perform exceptionally well despite distractions, varying lighting conditions, and changes in object arrangements, all within a defined environment,” explains Marcel Torne Villasevil, a research assistant at MIT CSAIL and lead author of a recent paper discussing this innovative venture. “We propose a method to create digital twins in real time using the latest advancements in computer vision. By simply capturing a scene with their smartphones, users can build digital replicas of the real world, enabling robots to train in simulated environments much faster than they would in the physical world, thanks to GPU parallelization. This technique alleviates the burden of extensive reward engineering, utilizing a few real-world demonstrations to kickstart the training process.”

Transforming Your Home Environment

The project, known as RialTo, is much more than just waving your phone and having a personal robot at your disposal. It starts by harnessing your device to scan the specific environment using tools like NeRFStudio, ARCode, or Polycam. After reconstructing the scene, users can upload it to RialTo’s user-friendly interface for detailed adjustments and enhancements, including the addition of necessary joints for the robots.

Once refined, the scene is imported into a simulation environment. This allows researchers to develop policies based on real-world actions, such as picking up a cup from a counter. By replicating these real-life demonstrations in the simulation, valuable data for reinforcement learning is generated. “This method aids in forming strong policies that are effective in both simulated and real-world contexts,” adds Torne.

The testing phase revealed that RialTo effectively established strong operational policies across various tasks, demonstrating a 67% improvement over traditional imitation learning methods while utilizing the same number of demonstrations. The tasks included opening a toaster, placing a book on a shelf, positioning a plate on a rack, and more. For each of these tasks, testing was conducted under three increasing difficulty levels by randomizing object positions, introducing visual distractions, and applying physical disturbances during task performance. When used alongside real-world data, RialTo surpassed traditional imitation-learning approaches, especially in scenarios laden with distractions or disruptions.

“The results indicate that leveraging digital twins is a much more effective strategy for achieving robustness in specific environments than extensive data collection across diverse settings,” states Pulkit Agrawal, MIT associate professor of electrical engineering and computer science (EECS), principal investigator at MIT CSAIL, and senior author of the study.

Currently, one notable limitation of RialTo is its training duration, which spans three days. To expedite this, the team is exploring improvements to the underlying algorithms and incorporating foundation models. There are also challenges associated with simulating complex real-life scenarios, such as deformable objects or liquids.

Elevating the Future of Robotics

What’s next on the horizon for RialTo? Building on prior advancements, the team is focused on maintaining robustness against various disturbances while enhancing the model’s adaptability to new environments. “Our next target is to utilize pre-trained models to speed up the learning process, reduce human intervention, and attain broader generalization capabilities,” reveals Torne.

“We are exceptionally excited about our concept of ‘on-the-fly’ robot programming, where robots can autonomously scan their surroundings and learn to solve specific tasks within a simulation. While our current methodology has certain limitations—such as needing initial human demonstrations and considerable training time—we view it as a significant advancement towards achieving genuine ‘on-the-fly’ robot learning and deployment,” Torne continues. “This strategy brings us closer to a future where robots don’t need a universal policy that anticipates every scenario but can swiftly learn new tasks without excessive real-world exposure. Such a breakthrough could significantly accelerate the practical application of robotics beyond what traditional all-encompassing policies allow.”

“Traditionally, researchers have relied on imitating expert data, which can be costly, or reinforcement learning, which carries potential safety risks,” comments Zoey Chen, a computer science Ph.D. student at the University of Washington and not a contributor to the study. “RialTo addresses both the safety issues associated with real-world reinforcement learning and the efficiency requirements for data-driven learning methods by employing its unique real-to-sim-to-real pipeline. This approach not only ensures secure and robust simulation training prior to real-world application but also greatly enhances data collection efficiency. RialTo has the potential to dramatically scale robot learning, enabling robots to navigate complex real-world situations much more effectively.”

“Simulation has demonstrated remarkable capabilities on real robots by offering inexpensive, seemingly infinite data for policy learning,” adds Marius Memmel, a Ph.D. student at the University of Washington who was not involved with the project. “However, these methods have been restricted to specific scenarios, and creating related simulations can be costly and time-consuming. RialTo simplifies the reconstruction of real-world environments in minutes rather than hours. Moreover, it effectively uses gathered demonstrations during policy learning, alleviating the operator’s workload and decreasing the sim-to-real gap while demonstrating impressive robustness to object positioning and disturbances in real-world performance.”

The research paper was co-authored by senior contributors Abhishek Gupta, an assistant professor at the University of Washington, and Agrawal. Additional contributions came from CSAIL team members, including EECS Ph.D. student Anthony Simeonov, research assistant Zechu Li, undergraduate student April Chan, and Ph.D. student Tao Chen. Valuable feedback and support in developing this project were also provided by members of the Improbable AI Lab and the WEIRD Lab.

This research was partly funded by the Sony Research Award, the U.S. government, and Hyundai Motor Co., with assistance from the WEIRD Lab. The study was recently presented at the Robotics Science and Systems (RSS) conference.

Photo credit & article inspired by: Massachusetts Institute of Technology