When it comes to mastering new skills, the age-old adage “practice makes perfect” applies not just to humans but also to robots navigating uncharted territories. Imagine a robot unpacked in a vast warehouse—armed with foundational skills like object placement but unfamiliar with its new environment. Initially, it may struggle to pick items from a shelf due to a lack of situational awareness. To enhance its performance, the robot needs to identify which specific skills require improvement and focus on refining its approach.

While a human could intervene and manually program the robot to optimize its tasks, groundbreaking research from the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT and The AI Institute offers a revolutionary solution. Their newly developed “Estimate, Extrapolate, and Situate” (EES) algorithm empowers robots to autonomously practice and enhance their skills, making them more adept at performing tasks in various settings such as factories, homes, and hospitals.

Understanding the Task at Hand

The EES algorithm equips robots with an advanced vision system that enables them to perceive and interact with their surroundings intelligently. By estimating the effectiveness of their actions—like sweeping floors—the algorithm determines whether it is beneficial for the robot to continue practicing that skill. EES predicts how improving a specific skill would impact the overall task performance and facilitates targeted practice. With each attempt, the vision system checks the accuracy of the actions performed, providing critical feedback.

This technology holds immense potential in diverse environments. Consider a scenario where you want a robot to tidy up your living room; it would need to practice skills such as sweeping effectively. According to Nishanth Kumar SM ’24 and his colleagues, the EES algorithm can refine the robot’s performance significantly with minimal human oversight, requiring only a few practice trials.

“We started this project with doubts about whether specialization could be achieved efficiently with a limited sample size,” explains Kumar, co-lead author of a publication detailing their findings, and a PhD student in electrical engineering and computer science. “We’ve created an algorithm that allows robots to enhance specific skills meaningfully within a feasible timeframe, using tens or hundreds of data points as opposed to the thousands typically needed in standard reinforcement learning algorithms.”

Spot On: A Case Study

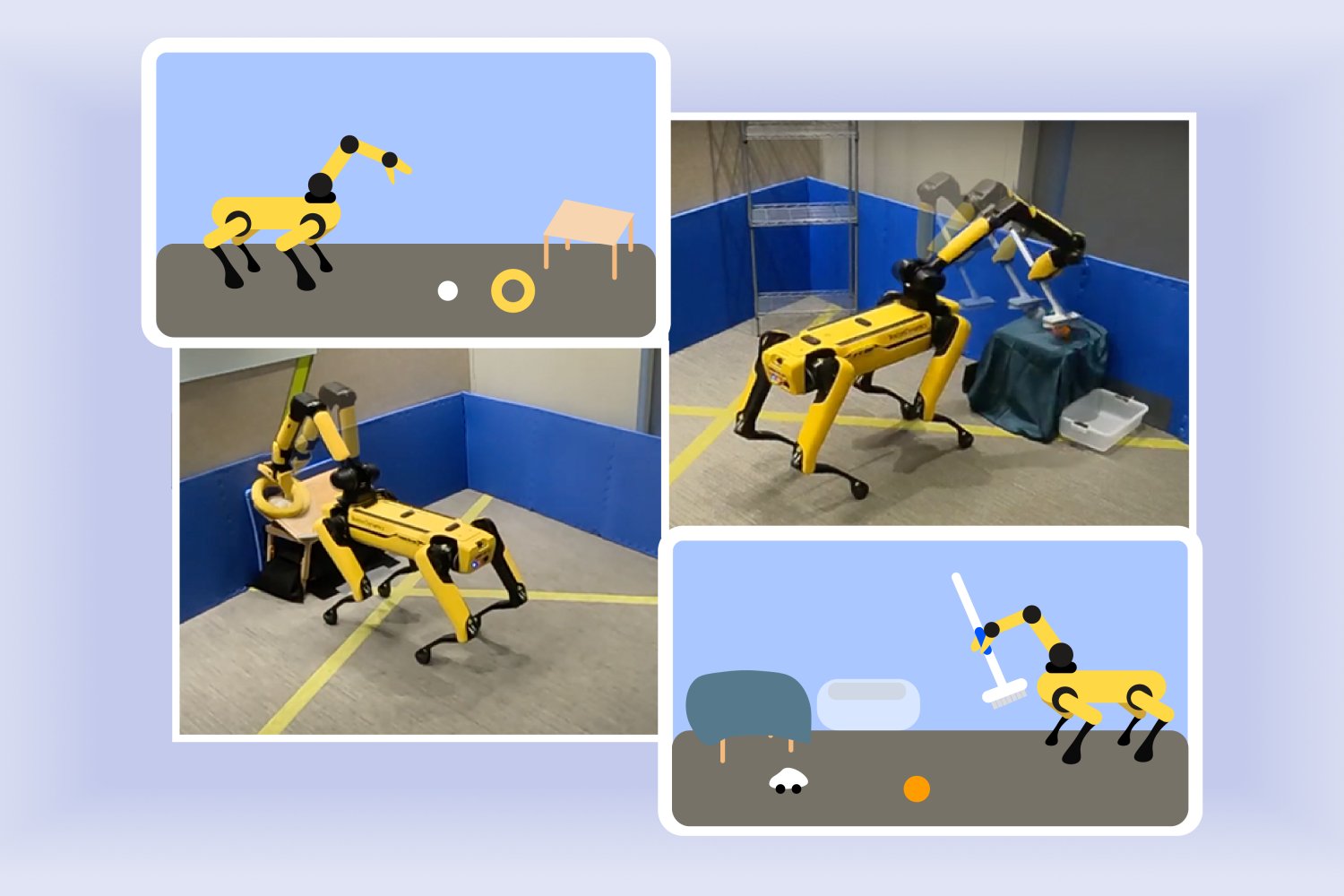

The effectiveness of EES was demonstrated during experiments with Boston Dynamics’ Spot quadruped robot at The AI Institute. Equipped with an arm, Spot learned to perform manipulation tasks after just a few hours of practice. In one instance, the robot mastered the placement of a ball and ring on a sloped surface in about three hours. Additionally, it improved its efficiency in sweeping toys into a bin in roughly two hours—significantly faster than previous methods that could take over ten hours per task.

“Our goal was for the robot to accumulate its own experiences, enabling it to determine which strategies would be most effective in its new environment,” said co-lead author Tom Silver SM ’20, PhD ’24, an alumnus of electrical engineering and computer science from MIT and now an assistant professor at Princeton University. “By concentrating on the robot’s existing knowledge, we aimed to answer the critical question: Which skill should the robot focus on improving at this moment?”

While EES shows promise for automating practice for robots in new environments, it isn’t without limitations. For instance, initial tests were conducted using low tables to enhance visibility, and the robot required a 3D-printed handle for better grip on the brush. Additionally, the robot encountered challenges detecting certain items or misidentifying their locations, which the researchers noted as errors in performance.

Innovating Robot Learning

The researchers believe that the practice efficiency exhibited in their physical trials could be further accelerated using simulators. By integrating real-world practice with virtual training, robots could potentially learn more swiftly and with less delay. Future iterations may even see the development of an algorithm designed to analyze sequences of practice attempts, refining the learning process even further.

“Empowering robots to learn autonomously is both an exciting opportunity and a significant challenge,” remarks Danfei Xu, an assistant professor in the School of Interactive Computing at Georgia Tech and a research scientist at NVIDIA AI, who was not involved in this study. “As home robots become increasingly common, they will need the ability to adapt and learn varied tasks on their own. However, unstructured learning can be slow and fraught with complexities. The work by Silver and his team surfaces as a major advancement in enabling robots to autonomously practice their skills in a systematic manner, paving the way for advancements in home robotics that can evolve and improve independently.”

In collaboration with CSAIL, co-authors include Stephen Proulx and Jennifer Barry from The AI Institute along with Linfeng Zhao, Willie McClinton, Leslie Pack Kaelbling, and Tomás Lozano-Pérez. Their research received support from various entities, including The AI Institute, the U.S. National Science Foundation, the U.S. Air Force Office of Scientific Research, the U.S. Office of Naval Research, the U.S. Army Research Office, and MIT Quest for Intelligence, among others, utilizing high-performance computing resources from the MIT SuperCloud and Lincoln Laboratory Supercomputing Center.

Photo credit & article inspired by: Massachusetts Institute of Technology