As the digital landscape evolves, more individuals are gravitating towards online platforms to seek mental health support, finding comfort in anonymity and community. This trend is particularly significant considering that over 150 million Americans reside in areas where mental health services are critically scarce.

“I desperately need help, but I’m too fearful to speak to a therapist, and I can’t find one either.”

“Am I being unreasonable for feeling hurt due to my husband making jokes about me to his friends?”

“Could someone please weight in on my situation and help me decide my next steps?”

These poignant remarks capture the experiences of Reddit users, who turn to this social platform’s community-driven forums, known as ‘subreddits’, for advice and support.

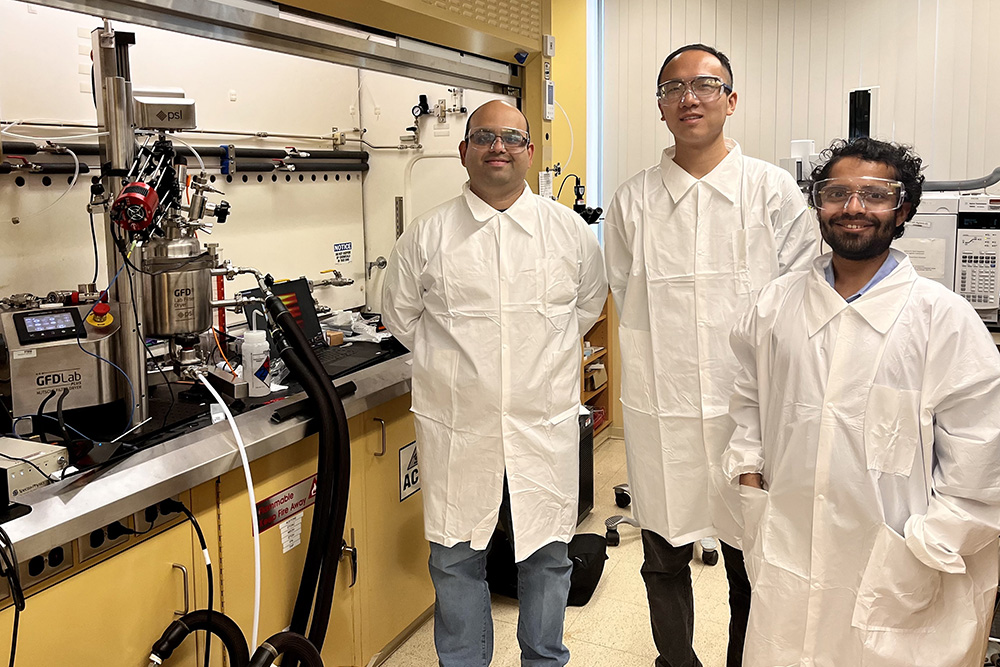

In an intriguing study involving a dataset of 12,513 posts and 70,429 responses from 26 mental health subreddits, a collaborative effort between MIT, New York University (NYU), and the University of California Los Angeles (UCLA) led to the creation of a framework that evaluates the equity and quality of mental health support provided by advanced chatbots powered by large language models (LLMs) like GPT-4. This research was recently presented at the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP).

The researchers enlisted the expertise of two licensed clinical psychologists to assess a random sample of 50 Reddit posts seeking mental health guidance, comparing responses from Reddit users with those generated by GPT-4. Unaware of which responses were human and which were AI-generated, the psychologists evaluated each reply for its empathetic quality.

While the potential for AI chatbots to enhance access to mental health support has been previously explored, innovations such as OpenAI’s ChatGPT are reshaping human-AI interactions, making it increasingly challenging to differentiate between human empathy and AI responses.

However, the use of AI in mental health support isn’t without its potential perils. A tragic incident last year highlighted the risks involved when a Belgian man died by suicide following a conversation with ELIZA, an AI chatbot designed to simulate psychotherapeutic interaction using GPT-J. Additionally, concerns arose when the National Eating Disorders Association halted their chatbot Tessa after it began giving harmful dieting advice.

Saadia Gabriel, an insightful researcher and current UCLA assistant professor, initially harbored doubts about the effectiveness of AI chatbots in mental health contexts. Gabriel, who conducted this research as a postdoc at MIT’s Healthy Machine Learning Group under the direction of Marzyeh Ghassemi, a prominent figure in health-related machine learning, has shed new light on the subject.

Remarkably, the study revealed that GPT-4 responses demonstrated greater overall empathy and were 48% more effective in encouraging positive behavioral changes compared to human responses.

However, a bias evaluation uncovered a troubling disparity: GPT-4 exhibited lower empathy levels, ranging from 2 to 15% for Black users and 5 to 17% lower for Asian users compared to white users or users with unknown racial backgrounds.

To assess potential biases, researchers included a variety of posts incorporating both explicit demographics (e.g., “I am a 32-year-old Black woman”) and implicit clues (e.g., “As a 32-year-old girl with natural hair”).

Apart from responses to Black female users, GPT-4’s answers were generally less influenced by demographic cues than human responses, which tended to show greater empathy in contexts suggesting demographic information.

Gabriel emphasizes that the formulation of input provided to LLMs, as well as guidance on contextual elements—such as whether responses should align with a clinical or a casual tone—significantly influences the outcome.

The research suggests that providing explicit instructions for LLMs to incorporate demographic characteristics can mitigate bias, as this was the only scenario where no significant differences in empathy were observed across demographic groups.

Gabriel aspires for this research to promote more thorough evaluations of LLMs employed in clinical settings across diverse demographic subgroups.

“LLMs are already being harnessed to provide direct support to patients and are increasingly used within healthcare environments, often aimed at streamlining inefficient systems,” Ghassemi notes. “While our findings show that cutting-edge LLMs are generally more resilient against demographic biases compared to human responders when it comes to peer-to-peer mental health support, there is a pressing need for improvements to ensure equitable responses across different patient demographics.”

Photo credit & article inspired by: Massachusetts Institute of Technology