Machine-learning models can encounter significant challenges when predicting outcomes for individuals who are underrepresented in the datasets used for training. Consider a scenario where a model is designed to recommend optimal treatment options for patients with chronic illnesses; if this model was primarily trained on data from male patients, its predictions may prove ineffective for female patients in a clinical setting.

To counteract these issues, engineers often attempt to balance their training datasets by removing data points to ensure equal representation of subgroups. Although this approach is well-intentioned, it frequently involves discarding substantial amounts of valuable data, potentially diminishing the overall performance of the model.

Researchers from MIT have introduced a groundbreaking technique that identifies and eliminates specific data points in a training dataset that most significantly contribute to a model’s inadequacies regarding minority subgroups. Unlike traditional methods, their approach allows for the removal of far fewer data points, thereby preserving the model’s overall accuracy while enhancing its effectiveness for underrepresented groups.

This novel technique also uncovers hidden biases within unlabeled training datasets—a common scenario, as unlabeled data is often more accessible than labeled data in various applications.

This method holds promise for collaboration with other strategies aimed at improving the fairness of machine-learning algorithms, specifically those employed in critical situations like healthcare. It may one day play a vital role in ensuring that underserved patients receive accurate diagnoses, free from the biases of AI.

“Many algorithms attempting to tackle this issue mistakenly assume every single data point has an equal influence on model outcomes. Our research demonstrates that this assumption is flawed. We can pinpoint specific data points that drive bias, remove them, and achieve superior performance,” explains Kimia Hamidieh, an electrical engineering and computer science (EECS) graduate student at MIT and co-lead author of a recent study discussing this innovative technique.

She collaborated on this paper with co-lead authors Saachi Jain, a PhD candidate from the class of 2024, and fellow EECS graduate student Kristian Georgiev; Andrew Ilyas, a Stein Fellow at Stanford University; and senior authors Marzyeh Ghassemi, an associate professor in EECS and a member of the Institute of Medical Engineering Sciences, and Aleksander Madry, the Cadence Design Systems Professor at MIT. Their research is set to be showcased at the Conference on Neural Information Processing Systems.

Removing Problematic Instances

Machine-learning models typically rely on massive datasets gathered from diverse online sources. However, these collections are often too expansive for a thorough manual review, which means they may include erroneous examples that hinder model performance.

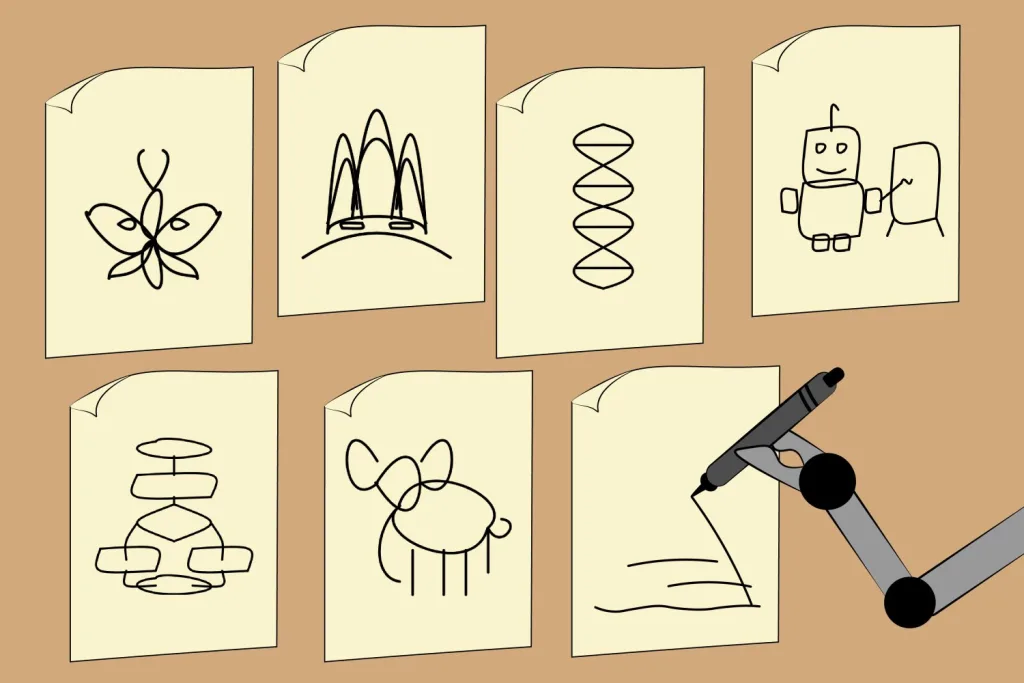

Interestingly, researchers are aware that not all data points influence a model’s performance equally on subsequent tasks. Drawing upon this understanding, the MIT team developed a technique to pinpoint and remove these problematic data points, specifically addressing a challenge known as worst-group error—when models falter notably on minority subgroups within the training dataset.

The new method builds on previous work where they introduced a technique named TRAK that isolated crucial training examples for specific model outputs. For the current innovation, they analyze incorrect predictions made by the model for minority subgroups and utilize TRAK to discern which training examples most contributed to those erroneous outcomes.

“By aggregating this information from poor test predictions accurately, we can identify which sections of the training data are adversely affecting worst-group accuracy,” Ilyas elaborates.

The next step involves removing those identifiable samples and retraining the model with the remaining data. Given that having a larger dataset generally enhances performance, selectively removing samples that contribute to worst-group failures allows for maintained overall accuracy while improving performance among minority subgroups.

An Accessible Solution

Across three distinct machine-learning datasets, this innovative method consistently outperformed numerous existing techniques. In one case, it enhanced worst-group accuracy while discarding approximately 20,000 fewer training samples than traditional data balancing methods. Additionally, their approach yielded higher accuracy compared to those requiring internal model modifications.

Because the MIT technique focuses on modifying the dataset itself, it presents a more accessible solution for practitioners, applicable across a variety of machine-learning models.

Furthermore, it remains effective even in situations where biases are unrecognized, as the method can identify data points that significantly impact a model’s learning feature. This enables a deeper understanding of the factors driving model predictions.

“This is a tool that anyone engaged in machine-learning can employ. They can examine these data points and assess whether they align with their modeling objectives,” conveys Hamidieh.

While deploying this technique to identify unknown subgroup biases necessitates a certain intuition about which groups might be relevant, the researchers are keen to validate this approach further through extensive human studies. They aim to enhance both the technique’s performance and reliability, ensuring it remains user-friendly for practitioners aspiring to deploy it in real-world contexts.

“Having tools that encourage critical examination of data and identification of problematic data points is a crucial first step toward developing models that are fairer and more dependable,” concludes Ilyas.

This research is partially supported by funding from the National Science Foundation and the U.S. Defense Advanced Research Projects Agency.

Photo credit & article inspired by: Massachusetts Institute of Technology