For roboticists, mastering the art of generalization is a pivotal challenge—the quest to design machines capable of adapting seamlessly to diverse environments and conditions. The field has undergone significant evolution since the 1970s, transitioning from complex programming to deep learning strategies that allow robots to learn from human behaviors. Nevertheless, a major hurdle persists: the quality of training data. Robots must face scenarios that stretch their capabilities, often requiring human guidance to facilitate this growth. Unfortunately, as robots become increasingly advanced, the gap between the need for high-quality data and human availability becomes pronounced.

In response to this challenge, researchers at the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) have pioneered a groundbreaking training methodology that promises to expedite the deployment of versatile, intelligent robots in the real world. Known as “LucidSim,” this innovative system harnesses the power of generative AI coupled with physics simulators to cultivate richly varied and realistic virtual training environments, enabling robots to master complex tasks without the need for physical world data.

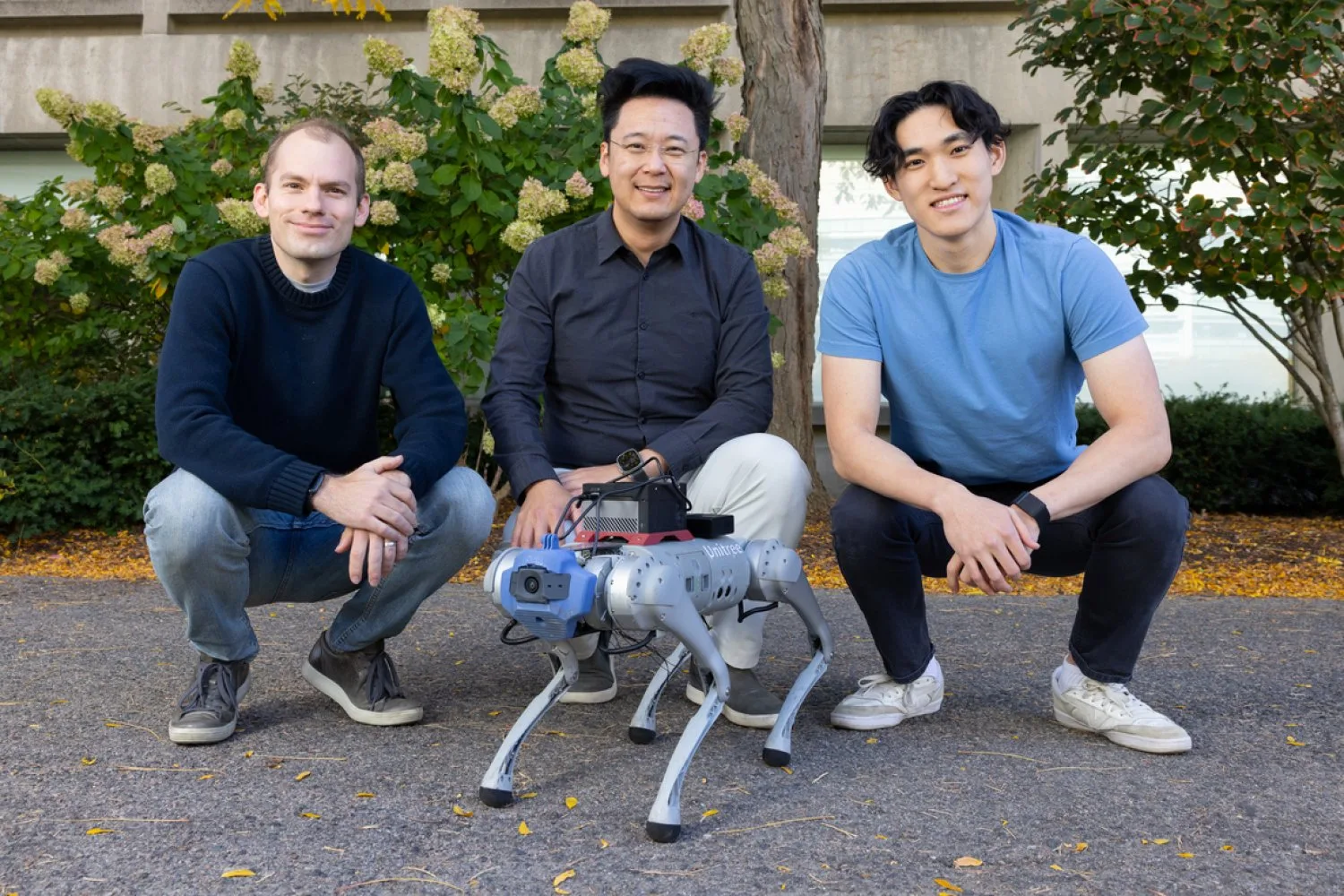

LucidSim uniquely melds physics simulation with generative AI, tackling the enduring problem of transferring skills learned in simulated contexts to real-world applications. “A key challenge in robotic learning has always been the ‘sim-to-real gap’—the disparity that exists between simulated training environments and the unpredictable complexities of the real world,” explains Ge Yang, a postdoctoral researcher at MIT CSAIL and a leading mind behind LucidSim. “Previous methodologies typically relied on depth sensors which simplified the challenge at the expense of overlooking vital real-world intricacies.”

This multifaceted system integrates multiple technologies. At its foundation, LucidSim employs large language models to generate detailed, structured descriptions of various environments. These descriptions are subsequently transformed into visual representations through generative models, aided by a physics simulator that ensures the fidelity of the imagery to real-world physics.

Inception of an Idea: From Casual Conversation to Innovation

The inception of LucidSim sprang from an unexpected inspiration during a discussion outside Beantown Taqueria in Cambridge, Massachusetts. “We initially aimed to teach vision-equipped robots through human feedback,” shares Alan Yu, an electrical engineering and computer science undergraduate at MIT and co-lead author on LucidSim. “But as our conversation unfolded, it became clear we lacked a vision-based policy to begin with. That’s when the idea struck us.”

To generate their training data, the team leveraged depth maps—providing geometric data—and semantic masks—identifying various image components—from simulated environments. However, they soon discovered that stringent controls on image composition often led to repetitive, non-variant images. Therefore, they innovatively sourced diverse text prompts using ChatGPT.

While this approach initially yielded single images, the team sought to create concise, coherent videos that simulated immersive “experiences” for the robots. This led to the development of a novel technique called “Dreams In Motion,” which calculates pixel movements across frames, transforming a single generated image into dynamic, multi-frame videos. This system considers the 3D geometry of the scene along with the robot’s perspective changes.

“Our approach surpasses domain randomization, a technique established in 2017 that applies random colors and patterns to objects in training environments, and still remains a standard today,” states Yu. “While domain randomization provides diverse data, it lacks realism. LucidSim effectively bridges the gaps in both diversity and realism. It’s remarkable that, even without direct exposure to the real world during training, the robot can identify and navigate obstacles in the physical environment.”

The researchers are particularly enthusiastic about expanding LucidSim’s application beyond quadruped locomotion and parkour, its preliminary testing grounds. One promising domain is mobile manipulation, where robots must handle objects in expansive areas, requiring acute color perception. “Presently, these robots continue to rely on real-world demonstrations,” Yang notes. “Although gathering demonstrations is straightforward, scaling a real-world robot teleoperation setup to train thousands of skills poses a significant challenge due to the need for human involvement in setting up each scenario. We aim to enhance this process, making it much more scalable by transitioning data collection into virtual realms.”

Who Truly Becomes the Expert?

The team evaluated LucidSim against traditional methods involving expert demonstrations for robot learning. The findings were unexpected: robots trained by human experts succeeded only 15 percent of the time, and even quadrupling the expert training data had minimal impact. In stark contrast, when robots utilized LucidSim to collect their own training data, performance surged dramatically—doubling the dataset increased success rates to an astounding 88 percent. “Our results show a clear trend: providing our robot with more data consistently improves its performance—ultimately, the student can surpass the expert,” explains Yang.

“One crucial issue in sim-to-real transfer for robotics has been achieving visual realism in simulated environments,” comments Shuran Song, an assistant professor of electrical engineering at Stanford University who was not involved in this research. “LucidSim elegantly addresses this challenge by utilizing generative models to produce diverse, highly realistic visual data for any simulation. This advancement may substantially expedite the introduction of robots trained in virtual settings to tackle real-world tasks.”

From the vibrant streets of Cambridge to cutting-edge advancements in robotics, LucidSim is poised to lead the charge toward a new class of intelligent, adaptable machines—capable of learning to navigate our complex world without ever stepping into it.

Yu and Yang collaborated on this research with fellow CSAIL members: Ran Choi, a postdoc in mechanical engineering; Yajvan Ravan, an undergraduate in EECS; John Leonard, the Samuel C. Collins Professor of Mechanical and Ocean Engineering; and Phillip Isola, an associate professor in EECS. Their project received support from a variety of sources, including a Packard Fellowship, a Sloan Research Fellowship, the Office of Naval Research, Singapore’s Defence Science and Technology Agency, Amazon, MIT Lincoln Laboratory, and the National Science Foundation Institute for Artificial Intelligence and Fundamental Interactions. The team presented their findings at the Conference on Robot Learning (CoRL) in early November.

Photo credit & article inspired by: Massachusetts Institute of Technology