Vijay Gadepally, a leading senior researcher at MIT Lincoln Laboratory, oversees several innovative projects at the Lincoln Laboratory Supercomputing Center (LLSC). His work focuses on optimizing computing platforms and enhancing the efficiency of artificial intelligence systems. In this interview, Gadepally sheds light on the growing integration of generative AI in daily applications, its unnoticed environmental consequences, and the proactive measures Lincoln Laboratory and the broader AI community are taking to lower emissions for a sustainable future.

Q: What trends are you observing in the use of generative AI in computing?

A: Generative AI employs machine learning (ML) techniques to generate new content, such as text and images, based on user-provided data. At the LLSC, we develop some of the world’s largest academic computing platforms, and recently, we have witnessed a remarkable surge in projects requiring high-performance computing resources for generative AI. Moreover, generative AI is reshaping diverse fields—take ChatGPT, for instance, which is rapidly evolving in educational and professional settings, often outpacing regulatory adaptation.

Looking ahead, we anticipate numerous applications of generative AI over the next decade—including advanced virtual assistants, groundbreaking drug discovery, and enhanced scientific exploration. While it’s challenging to predict every future use, it’s clear that the increased complexity of algorithms will significantly escalate their computational, energy, and environmental footprints.

Q: What strategies is LLSC implementing to address climate impacts?

A: We are constantly pursuing ways to make computing more efficient, allowing our data center to utilize resources optimally while enabling scientific advancements in the most sustainable way possible.

For instance, we’ve successfully cut power consumption for our hardware through straightforward actions, akin to turning off lights in an unoccupied room. In one experiment, we achieved a reduction of 20 to 30 percent in energy use for a group of graphics processing units, all while minimally impacting their performance by applying a power cap. This approach not only decreased the operating temperature of the GPUs, extending their lifespans, but also enhanced cooling efficiency.

Furthermore, we’ve adopted climate-conscious practices. Just like how many individuals might rely on renewable energy at home, we are integrating similar strategies at LLSC—training AI models during cooler periods or when local grid demand is low.

Recognizing that a substantial portion of energy consumption arises from avoidable sources, we’ve developed techniques to monitor ongoing computing workloads and terminate those unlikely to yield valuable outcomes. Surprisingly, in numerous instances, we found that a significant amount of computation could be halted prematurely without compromising the final results.

Q: Can you provide an example of a project that reduces energy consumption in a generative AI program?

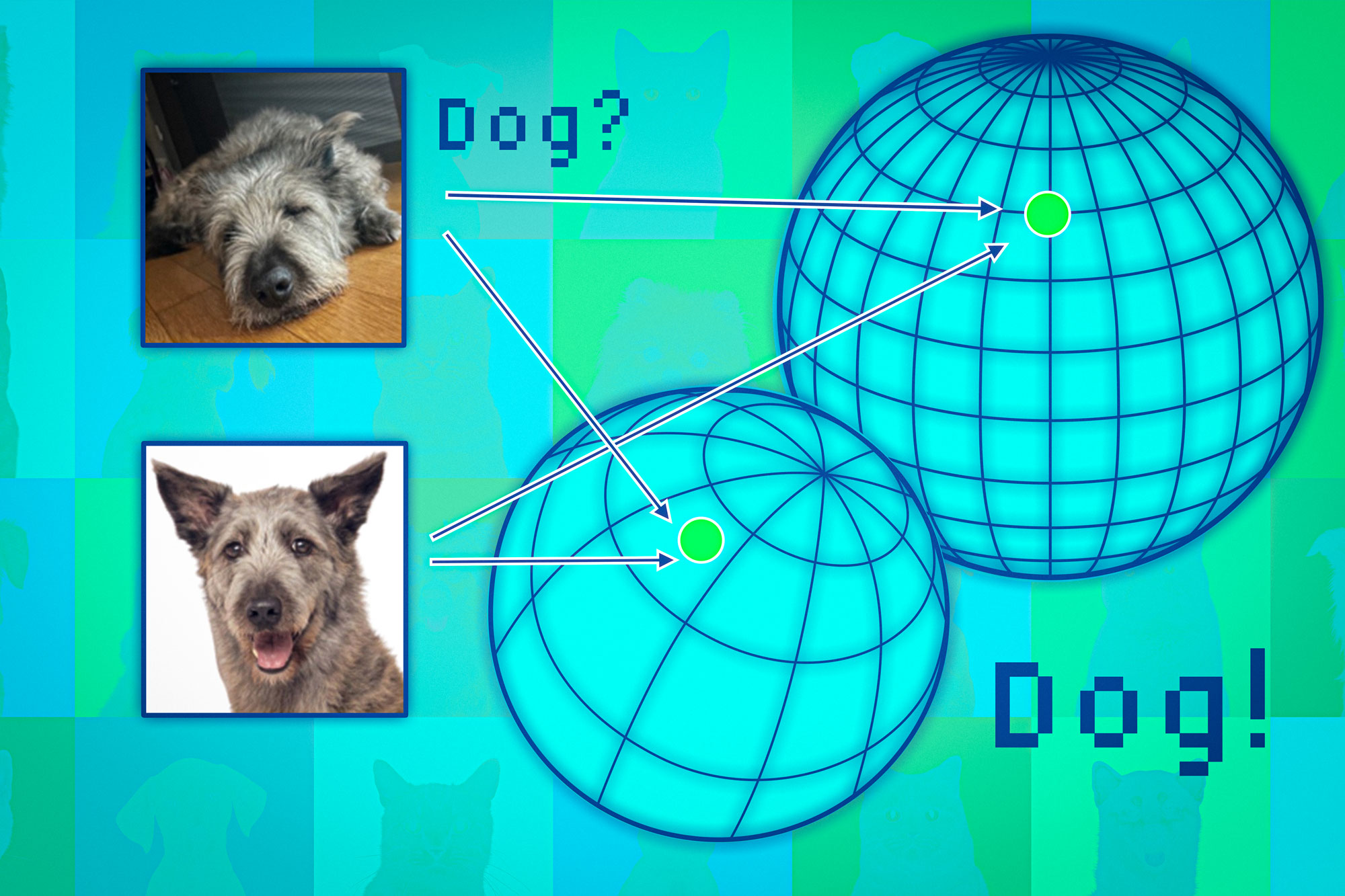

A: We recently developed a climate-adaptive computer vision tool. This domain focuses on utilizing AI for image analysis—such as distinguishing between cats and dogs, labeling objects, or identifying components of interest within images.

Our innovative tool features real-time carbon telemetry, delivering insights into carbon emissions from our local grid while the model is operational. With this data, the system can intelligently switch to a more energy-efficient model with fewer parameters during peak carbon intensity or utilize a higher-resolution model when carbon output is lower.

This approach yielded nearly an 80 percent reduction in carbon emissions over a period of one to two days. We have recently expanded this concept to other generative AI tasks, such as text summarization, achieving similar results and, interestingly, sometimes enhancing performance after employing our method!

Q: How can consumers of generative AI help mitigate its environmental impact?

A: As consumers, we should advocate for greater transparency from AI providers. For instance, just like on Google Flights, where you see a flight’s carbon footprint, we should demand similar insights from generative AI tools. This enables us to make informed choices based on our environmental priorities.

We can also improve our understanding of generative AI emissions. Many are familiar with the concept of vehicle emissions; therefore, comparing generative AI emissions using relatable analogies can be effective. For example, generating a single image could be roughly equivalent to driving four miles in a gasoline-powered car, or charging an electric vehicle could require the same energy as creating around 1,500 text summaries.

In numerous cases, users would be willing to consider trade-offs if they understood the associated impacts.

Q: What does the future hold?

A: Addressing the climate impact of generative AI is a global challenge that numerous individuals and organizations are tackling with a unified purpose. While we’re making strides here at Lincoln Laboratory, there is still much work to be done. In the long run, data centers, AI developers, and energy networks must collaborate to conduct “energy audits” that reveal unique opportunities for enhancing computing efficiencies. Building more partnerships and fostering collaboration will be vital as we progress into the future.

If you want to delve deeper into this topic or collaborate with Lincoln Laboratory on these initiatives, feel free to reach out to Vijay Gadepally.

Photo credit & article inspired by: Massachusetts Institute of Technology