Creating lifelike 3D models for fields such as virtual reality, filmmaking, and engineering can often be a tedious, trial-and-error affair. While generative artificial intelligence has advanced significantly in producing vibrant 2D images from simple text prompts, the challenge of generating realistic 3D shapes remains. This is where a new technique, Score Distillation, enters the picture. Although it utilizes 2D image generation models to attempt 3D shape creation, the results can frequently appear blurry or cartoonish.

Researchers at MIT have examined the algorithms behind 2D and 3D generation and pinpointed what causes the subpar quality of 3D models. Their findings led to a straightforward enhancement of Score Distillation that successfully produces sharp, high-quality 3D shapes comparable to the best 2D model outputs.

Unlike other methods that rely on costly retraining or fine-tuning of generative AI models—often a time-consuming process—the MIT team’s development achieves superior 3D quality without any additional training or elaborate post-processing steps. By understanding the fundamental issues at play, they have further advanced the mathematical foundations of Score Distillation, paving the way for improvements in future projects.

“Now we have a clearer direction, which allows us to seek more efficient solutions for faster and higher-quality results,” notes Artem Lukoianov, a graduate student in electrical engineering and computer science (EECS) and the lead author of the study. “Ultimately, our work could serve as a co-pilot for designers, simplifying the creation of realistic 3D shapes.”

Joining Lukoianov in this research effort are Haitz Sáez de Ocáriz Borde from Oxford University, Kristjan Greenewald from the MIT-IBM Watson AI Lab, Vitor Campagnolo Guizilini from the Toyota Research Institute, Timur Bagautdinov from Meta, and senior authors Vincent Sitzmann and Justin Solomon from MIT’s CSAIL. This groundbreaking research is set to be presented at the upcoming Conference on Neural Information Processing Systems.

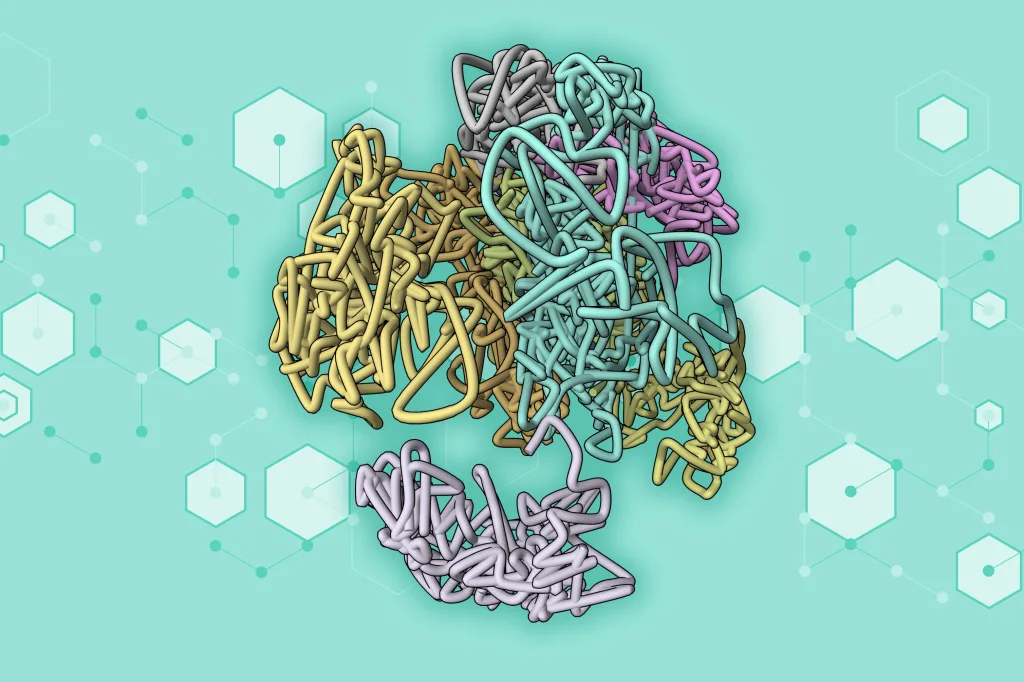

From 2D Images to Striking 3D Shapes

Diffusion models like DALL-E are at the forefront of generative AI, capable of transforming random noise into stunningly detailed images. They achieve this by adding noise to images and training the model to effectively undo that noise, producing images in response to user prompts.

However, these models struggle with generating realistic 3D shapes due to the scarcity of available 3D training data. To tackle this limitation, researchers introduced Score Distillation Sampling (SDS) in 2022, which leverages a pretrained diffusion model to synthesize 3D representations from available 2D images.

This innovative technique begins with a random 3D model, rendering a 2D perspective of an object from a randomly chosen camera angle. Noise is added to this image, and the diffusion model is employed to clean it up. The process is iterative, optimizing the random 3D model to closely align with the denoised image until the targeted 3D object emerges. Unfortunately, the shapes produced often lack clarity, tending to look blurry or oversaturated.

“This has been a persistent bottleneck. We knew the underlying model should yield better results, but the reason for these poor-quality 3D shapes was unclear,” explains Lukoianov.

Through a meticulous investigation of the SDS process, the MIT team discovered an inconsistency between a crucial equation used in the 3D generation process and its equivalent in 2D diffusion models. This equation guides how the model iteratively updates the random 3D representation, a method that unfortunately incorporates a complex formula that hinders efficiency. Consequently, this complexity resulted in the reliance on random noise at each step, leading to the undesirable softness in the generated 3D shapes.

Finding an Approximate Solution

Rather than wading through the intricate formula, the researchers opted to explore approximation techniques. Their breakthrough came when they developed a method to infer the necessary noise term from the current rendering of the 3D shape, instead of relying on random sampling.

“This adjustment, as predicted in our analysis, allows for the generation of 3D shapes that appear sharp and realistic,” says Lukoianov.

Additionally, the team increased the rendering resolution and fine-tuned model parameters to further elevate the quality of the 3D shapes. In doing so, they successfully utilized a readily available, pretrained image diffusion model to create smooth, realistic 3D objects without the expense of retraining, achieving quality akin to other methods that depend on makeshift solutions.

“Previously, experimental parameter adjustments were hit-or-miss. Now that we’ve identified the key equation, we can pursue more efficient resolution strategies,” he adds.

While their method benefits from a pretrained diffusion model, it inherits the model’s biases and limitations, making it susceptible to producing inaccurate results. Enhancing the foundational diffusion model would consequently refine their approach.

The researchers are also keen to explore how these insights could advance image editing techniques moving forward.

This important research is supported by funding from organizations including the Toyota Research Institute, the U.S. National Science Foundation, the Singapore Defense Science and Technology Agency, and others.

Photo credit & article inspired by: Massachusetts Institute of Technology