From cleaning up messes to serving delicious dishes, robots are being programmed to tackle an array of intricate household chores. Many of these robotic trainees are learning through imitation, where they mimic actions demonstrated by a human mentor.

Interestingly, robots excel at imitation. However, without engineers programming them to adapt to unexpected bumps or nudges, these robots often must restart a task if something goes awry.

Now, engineers at MIT are working to imbue robots with a touch of common sense to help them navigate unplanned disruptions during tasks. They have developed an innovative method that integrates robot motion data with the intuitive problem-solving capabilities of large language models (LLMs).

This groundbreaking approach allows robots to dissect various household tasks into manageable subtasks. As a result, robots can adapt to interruptions without needing to restart the entire task, eliminating the necessity for engineers to code fixes for every conceivable mishap.

“While imitation learning is a popular method for training household robots, blindly copying a human’s motions can lead to cumulative errors that derail task execution,” explains Yanwei Wang, a graduate student in MIT’s Department of Electrical Engineering and Computer Science (EECS). “Our method enables robots to self-correct execution errors, enhancing overall task success.”

Wang and his team outline their new method in a study set to be presented at the International Conference on Learning Representations (ICLR) in May. Co-authors include graduate students Tsun-Hsuan Wang and Jiayuan Mao, postdoc Michael Hagenow from MIT’s Department of Aeronautics and Astronautics (AeroAstro), and Julie Shah, the H.N. Slater Professor in Aeronautics and Astronautics at MIT.

Understanding Tasks

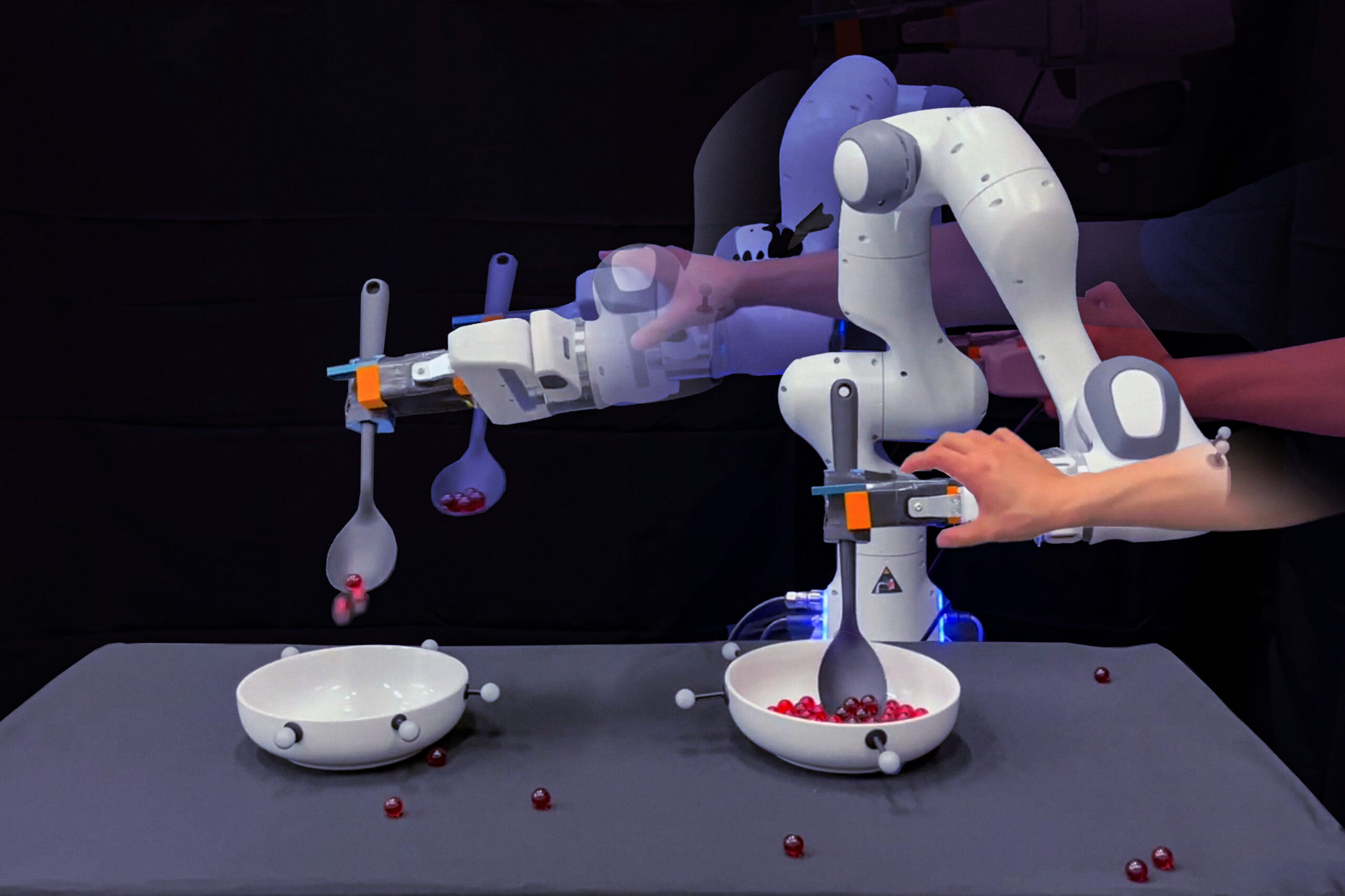

The researchers demonstrate their approach using a relatively simple chore: scooping marbles from one bowl and pouring them into another. Typically, engineers would guide a robot through the complete motion—scooping and pouring in one fluid trajectory—multiple times to provide enough examples for mimicry.

“However, a human’s demonstration is one extended, continuous motion,” Wang notes.

The team recognized that, although a human might showcase a task in one go, it involves a series of subtasks, or trajectories. The robot must, for example, reach into the bowl before scooping, and it must scoop marbles before transporting them to the empty bowl. If the robot encounters a bump or a nudge, it usually has to stop and restart unless engineers explicitly define each subtask and program the robot to correct its course.

“That level of planning can be quite tedious,” Wang adds.

Instead, the researchers realized that LLMs could automate part of this process. These deep learning models analyze vast amounts of text data to connect words and generate new sentences based on learned relationships. Remarkably, they also can produce a logical sequence of subtasks for any given task. If queried about the steps to scoop marbles, an LLM might generate actions like “reach,” “scoop,” “transport,” and “pour.”

“LLMs excel at describing the steps needed to accomplish a task in natural language, while a human’s physical demonstration embodies those steps in real-world actions,” Wang explains. “Our goal was to merge these two realms so that a robot could understand its current task stage and replan if needed.”

Connecting the Dots

For this innovative method, the team created an algorithm to link an LLM’s natural language labels for subtasks with the robot’s physical location or state image. This process, known as “grounding,” allows the algorithm to learn a “classifier” that automatically identifies the subtask a robot is undertaking—be it “reach” or “scoop”—by its coordinates or images.

“This grounding classifier establishes a dialogue between the robot’s physical actions and the subtasks defined by the LLM, along with the specific conditions to consider within each subtask,” Wang elaborates.

The team tested their approach using a robotic arm trained for the marble-scooping task. Initially, they physically guided the robot through each step: reaching for the bowl, scooping marbles, carrying them to another bowl, and pouring. After several demonstrations, they employed a pretrained LLM to outline the necessary steps for transferring marbles. Their grounding algorithm then mapped the LLM’s subtasks to the robot’s motion trajectory data.

Upon allowing the robot to perform the scooping task independently, experimenters nudged the robot off course while it was working. Rather than freezing or resuming blindly, the robot adeptly self-corrected, ensuring it completed each subtask before progressing. For instance, it confirmed that it had effectively scooped the marbles before attempting to transport them.

“With our method, when robots encounter errors, we no longer need to request additional programming or demonstrations to correct failures,” Wang states. “That’s incredibly exciting because there’s considerable interest in training household robots using data from teleoperation systems. Our algorithm translates this training data into dependable robot behavior capable of performing complex tasks amidst external perturbations.”

Photo credit & article inspired by: Massachusetts Institute of Technology