Effective communication through natural language hinges on several key factors: comprehending words within their context, trusting that the information shared is genuine, reasoning about the content, and applying it to real-world situations. A group of MIT PhD students — Athul Paul Jacob SM ’22, Maohao Shen SM ’23, Victor Butoi, and Andi Peng SM ’23 — at the MIT-IBM Watson AI Lab are dedicated to enhancing the reliability and accuracy of AI systems by addressing these aspects in natural language models.

Jacob’s research focuses on improving the outputs of existing natural language models through game theory principles. He highlights two primary interests: understanding human behavior using multi-agent systems and language comprehension, and leveraging these insights to develop better AI systems. His work is inspired by the board game “Diplomacy,” where his team created a system that predicts human behavior and negotiates strategically to attain optimal outcomes.

“In ‘Diplomacy,’ trust and effective communication are crucial,” explains Jacob. Unlike traditional games like poker and Go, where opponents operate independently, players must interact with multiple parties simultaneously. This led to several research challenges, such as modeling human behavior and recognizing when humans might act irrationally. Collaborating with mentors from the MIT Department of Electrical Engineering and Computer Science (EECS) and the MIT-IBM Watson AI Lab, Jacob’s team reframed language generation as a two-player game.

By employing “generator” and “discriminator” models, Jacob’s team devised a natural language system capable of generating answers to questions and evaluating their correctness. Correct answers earn points for the AI, while incorrect ones receive none. Known for their tendencies to hallucinate, language models can be less reliable; Jacob’s approach utilizes a no-regret learning algorithm, promoting more truthful and trustworthy responses while adhering closely to the pre-trained language model’s existing knowledge. This technique, when applied alongside smaller language models, shows the potential to rival larger models in performance.

Confidence and accuracy in language models often misalign, leading to overconfidence in erroneous outputs. Maohao Shen and his team, under the guidance of Gregory Wornell and several IBM Research collaborators, are focused on addressing this issue through uncertainty quantification (UQ). “Our project aims to recalibrate poorly calibrated language models,” Shen explains. They transform language model outputs into a multiple-choice classification task, asking models to validate their answers as “yes, no, or maybe,” thereby assessing their confidence levels.

Shen’s team developed a technique that automates tuning the confidence of outputs from pre-trained language models. By utilizing ground-truth data, their auxiliary model can adjust the language model’s confidence. “If a model is overconfident in its prediction, we can detect this and reduce its confidence, and vice versa,” says Shen. Their methodology has been rigorously tested across various benchmark datasets, proving its effectiveness in recalibrating model predictions for new tasks with minimal supervision.

Meanwhile, Victor Butoi’s team aims to boost model capabilities by allowing vision-language models to reason about visual inputs. Collaborating with researchers, including John Guttag, Butoi’s team is developing methods to enhance compositional reasoning—an essential part of decision-making. “AI needs to comprehend problems in a composed manner,” Butoi explains. For example, if a model recognizes that a chair is positioned left of a person, it should understand both entities and directional context.

Vision-language models often struggle with compositional reasoning, but enhanced training techniques, such as low-rank adaptation of large language models (LoRA), are helping improve their capabilities. Using an annotated dataset called Visual Genome, which identifies objects and their relationships within images, Butoi’s team guides models to generate context-aware outputs. This approach simplifies complex reasoning tasks, making it easier for the vision-language model to draw necessary conclusions.

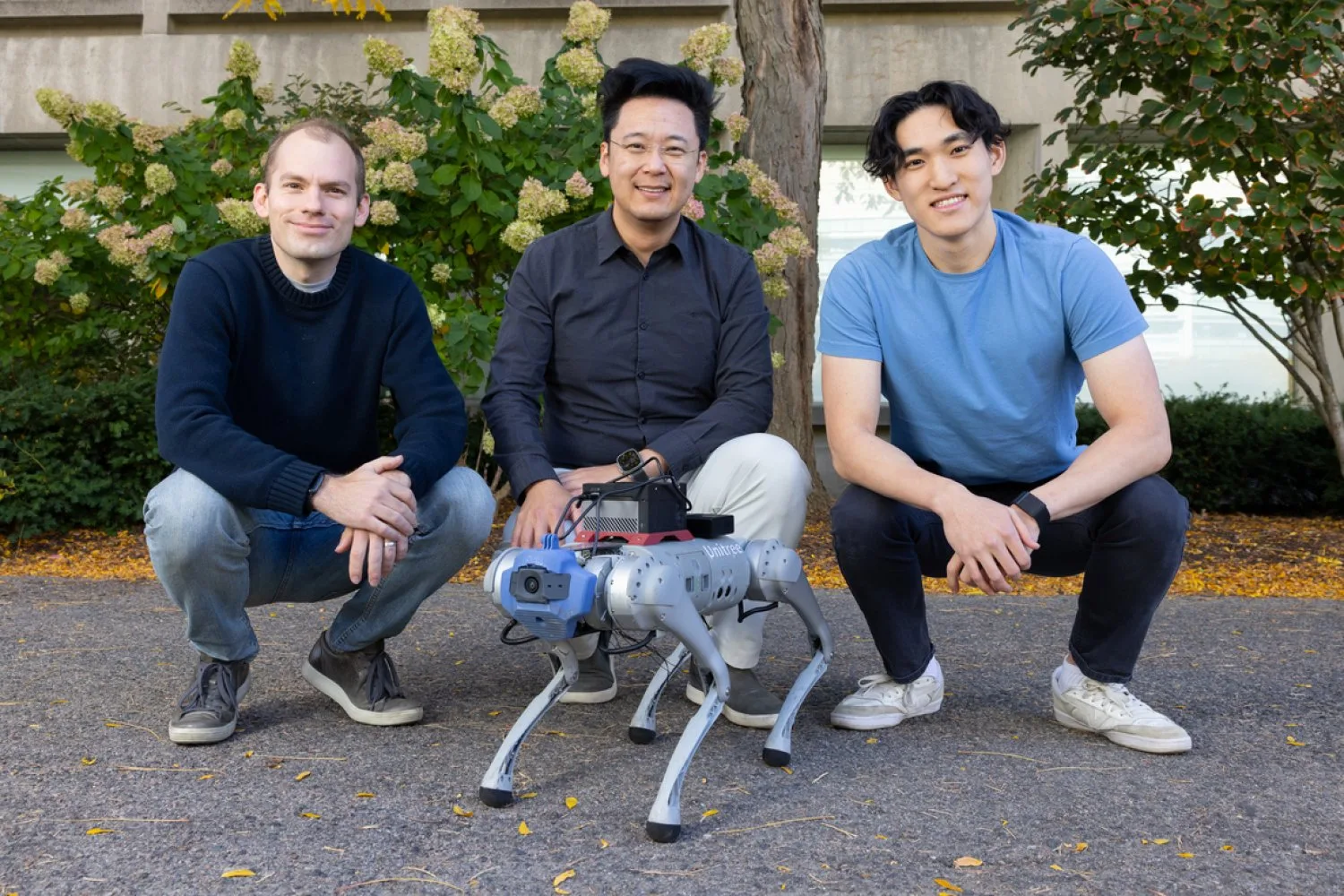

Andi Peng’s focus is on robotics, specifically aiding individuals with physical disabilities through virtual environments. Collaborating with mentors Julie Shah and Chuang Gan, Peng’s team is developing two embodied AI models within a simulated space called ThreeDWorld. One model represents a human needing assistance, while the other acts as a helper agent. By harnessing semantic knowledge from large language models, they aim to enhance the helper’s decision-making capabilities, facilitating effective human-robot interactions.

“AI systems should be designed for human users,” emphasizes Peng, highlighting the importance of conveying human knowledge in interactions. “Our goal is to ensure these systems operate in a way that aligns with human understanding.”

Photo credit & article inspired by: Massachusetts Institute of Technology