Imagine wanting to train a robot so it can adeptly use tools like a hammer, wrench, and screwdriver to make repairs around your home. To achieve this, you’d need a vast amount of data demonstrating effective tool usage.

Currently, robotic datasets are diverse in terms of modalities—some feature color images, while others capture tactile sensations. Additionally, data can be sourced from different environments, such as simulations or demonstrations by humans, with each dataset highlighting specific tasks and contexts.

Integrating data from this plethora of sources into a singular machine-learning model poses a significant challenge. Many approaches resort to training robots with only one type of data, often leading to limitations. Robots that rely on a relatively small and task-centered dataset typically struggle with new tasks in unpredictable settings.

To develop more versatile robots, researchers at MIT have pioneered a method that merges multiple data sources across various domains and modalities using a kind of generative AI called diffusion models.

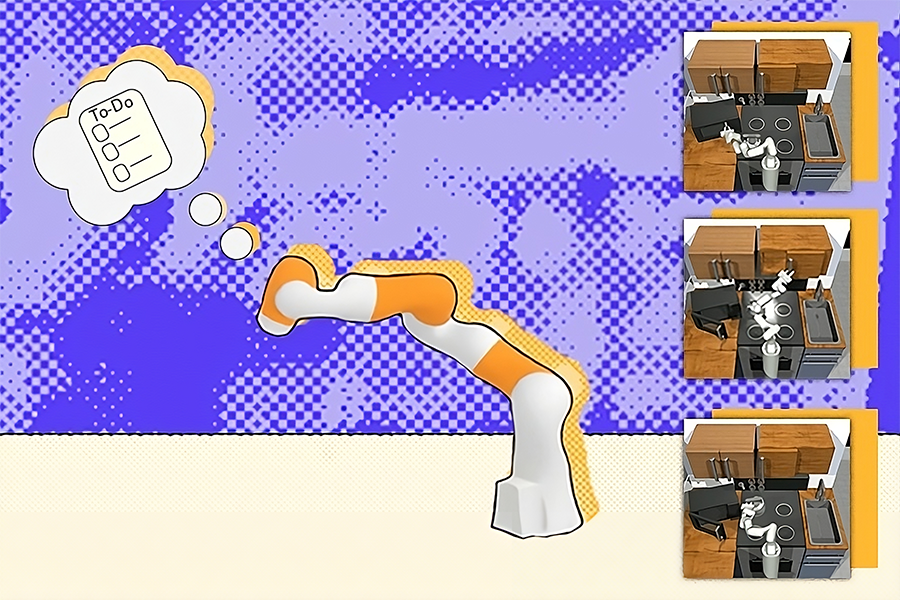

The team initiates the process by training individual diffusion models to devise strategies, known as policies, for completing specific tasks with unique datasets. Subsequently, they amalgamate these learned policies into a comprehensive general policy, allowing the robot to perform numerous tasks across different environments.

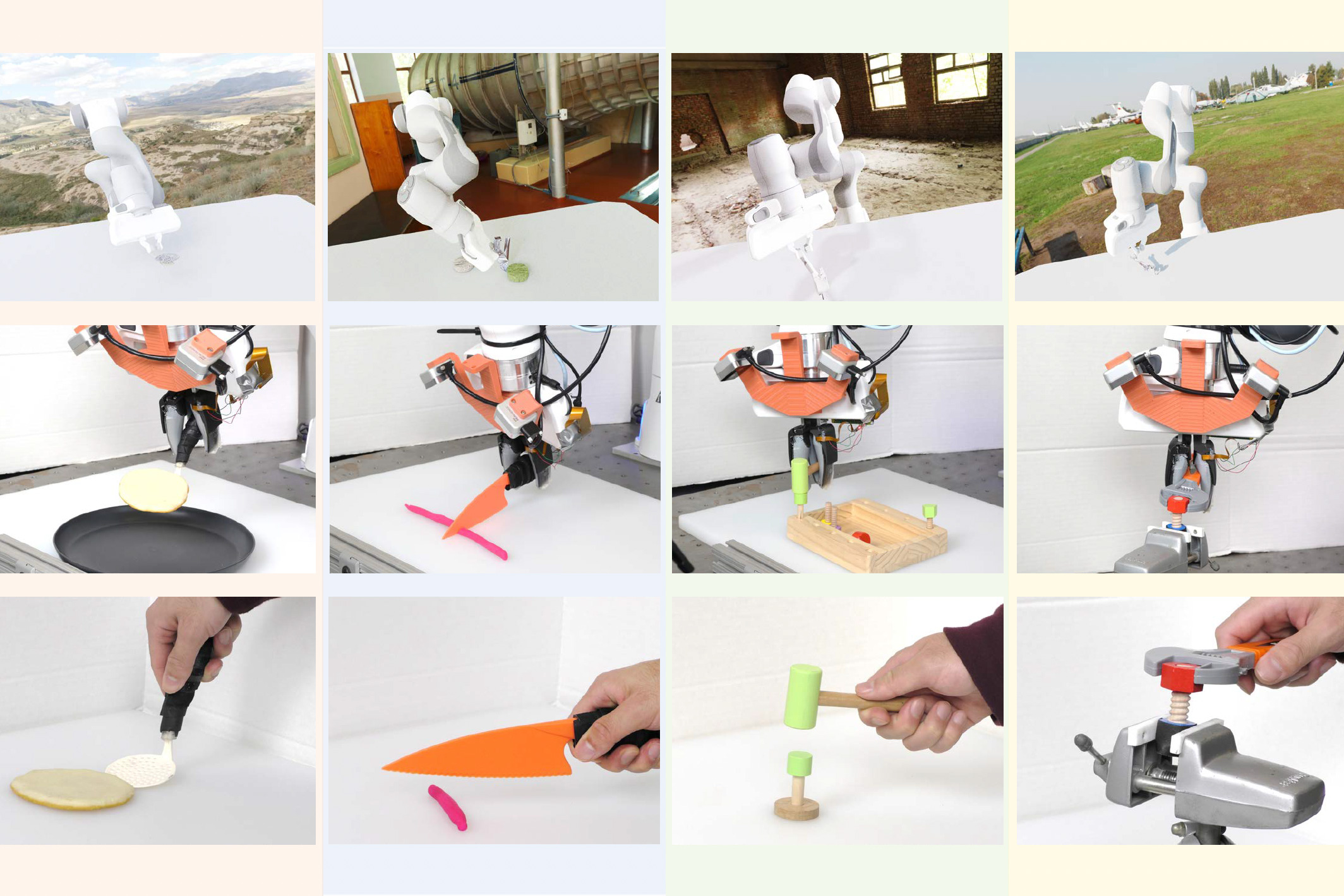

In both simulations and real-world experiments, this innovative training approach facilitated the robot’s ability to execute various tool-related tasks and adapt to new challenges not encountered during training. Termed Policy Composition (PoCo), this method yielded a remarkable 20% improvement in task performance compared to conventional techniques.

“The challenge of dealing with diverse robotic datasets is akin to a chicken-and-egg dilemma. To utilize extensive data for training adaptable robot policies, we first need operational robots to gather this data. Leveraging diverse data sources, similar to methodologies applied in ChatGPT, is essential for advancing the field of robotics,” explains Lirui Wang, an electrical engineering and computer science graduate student and the lead author of a study on PoCo.

Wang’s colleagues include Jialiang Zhao, a graduate student in mechanical engineering; Yilun Du, an EECS graduate student; Edward Adelson, the John and Dorothy Wilson Professor specializing in Vision Science in the Department of Brain and Cognitive Sciences, as well as a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL); and senior author Russ Tedrake, the Toyota Professor of EECS, Aeronautics, Astronautics, and Mechanical Engineering, who is also part of CSAIL. This groundbreaking research will be showcased at the Robotics: Science and Systems Conference.

Integrating Diverse Datasets

A robotic policy refers to a machine-learning model that interprets inputs and executes corresponding actions. You might envision a robotic arm’s policy as a trajectory—a sequence of movements enabling it to grasp a hammer and drive a nail.

The datasets allocated for developing robotic policies are frequently limited, focusing on singular tasks and environments, such as sorting package boxes in a warehouse.

“Robotic installations in warehouses generate terabytes of data, but this information is confined to that specific robot handling those items. This scenario is far from ideal for training a general-purpose machine,” remarks Wang.

MIT’s researchers conceived a strategy that can assimilate smaller datasets, like those sourced from multiple robotic warehouses, allowing for the learning of distinct policies from each and their eventual integration to enable a robot’s adaptability across tasks.

Each policy is illustrated using diffusion models. These generative AI models, commonly employed for image creation, learn to produce new data samples that mirror those in a training dataset by incrementally adjusting their output.

However, instead of training a diffusion model for image generation, the researchers focused on programming it to formulate trajectories for robotic actions. They accomplish this by introducing noise to the training data’s trajectories; the diffusion model then systematically eliminates the noise, refining its output into an accurate trajectory.

This technique, referred to as Diffusion Policy, was initially proposed by a team from MIT, Columbia University, and the Toyota Research Institute, and PoCo enhances this foundational work.

The team trains each diffusion model on different dataset types, such as those derived from human video demonstrations and those obtained from the teleoperation of robotic arms.

Afterwards, researchers perform a weighted combination of the individual policies acquired from the diffusion models, iteratively fine-tuning the output to ensure it aligns with the objectives of each policy.

Greater than the Sum of Its Parts

“One of the significant advantages of this strategy is its ability to merge policies, thus harnessing the strengths of each. For example, a policy based on real-world data may exhibit superior dexterity, whereas one trained in simulations could demonstrate broader adaptability,” Wang elaborates.

Image: Courtesy of the researchers

With separate training for each policy, researchers can creatively mix and match diffusion policies for optimized results on specific tasks. Users can introduce new modalities or domains by training additional Diffusion Policies with new datasets, thus avoiding the necessity to restart the entire training cycle.

Image: Courtesy of the researchers

The researchers have applied PoCo both in simulations and with real robotic arms tasked with various functions, including using a hammer to drive a nail and flipping items with a spatula. This technique resulted in a substantial 20% enhancement in task execution compared to traditional methods.

“Notably, after tuning and visualizing the results, we clearly observed that the composed trajectory was significantly superior to either individual approach,” Wang mentions.

Looking ahead, the research team aims to extend this method to longer-horizon tasks where the robot would pick up one tool, utilize it, and then transition to another. They also plan to integrate larger datasets to further refine performance.

“To truly succeed in robotics, we require a comprehensive mix of data types: internet data, simulation data, and real robot data. The critical challenge will be efficiently combining these assets. PoCo represents a solid advancement toward that goal,” remarks Jim Fan, a senior research scientist at NVIDIA and leader of the AI Agents Initiative, who was not affiliated with this research.

This groundbreaking research is partially funded by Amazon, the Singapore Defense Science and Technology Agency, the U.S. National Science Foundation, and the Toyota Research Institute.

Photo credit & article inspired by: Massachusetts Institute of Technology