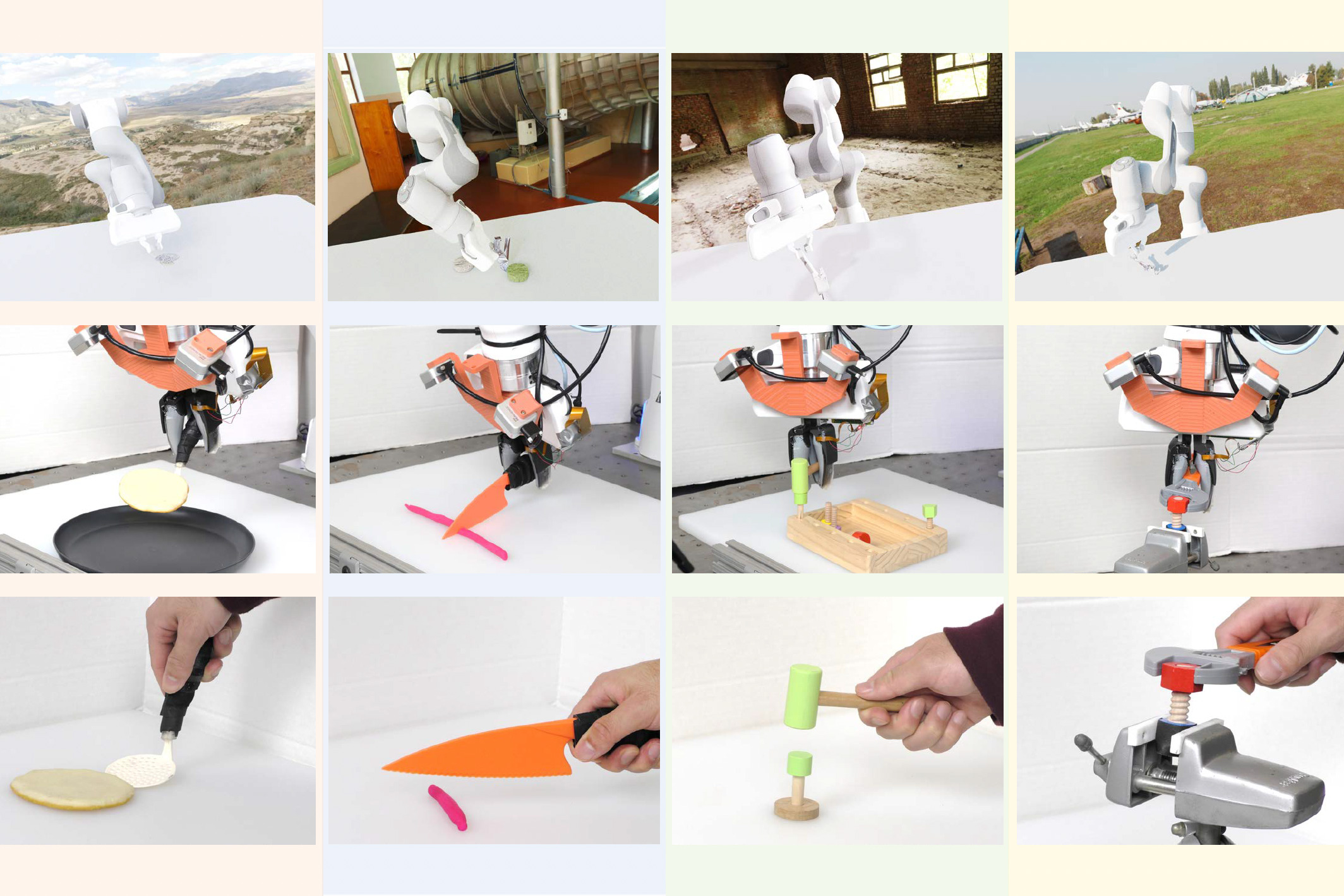

When water freezes, it undergoes a dramatic transition from a liquid to a solid, leading to significant changes in properties such as density and volume. While many individuals may take phase transitions in water for granted, the study of phase transitions in novel materials and complex physical systems is a crucial area of scientific inquiry.

To gain insights into these systems, it’s essential for scientists to identify different phases and detect transitions between them. However, quantifying these changes in an uncharted system can be challenging, especially when data is limited.

Researchers from MIT and the University of Basel in Switzerland have turned to generative artificial intelligence models to tackle this dilemma. They have developed an innovative machine-learning framework capable of automatically mapping phase diagrams for new physical systems.

Their physics-informed machine-learning strategy is far more efficient than conventional manual methods, which often require extensive theoretical expertise. Notably, this new approach utilizes generative models, eliminating the necessity for vast labeled training datasets commonly used in other machine-learning techniques.

This groundbreaking framework could empower scientists to explore the thermodynamic properties of novel materials or identify entanglement in quantum systems. Ultimately, this method may enable researchers to autonomously uncover previously unknown phases of matter.

“If you have a new system with fully unknown properties, how would you decide which observable quantity to study? The goal, with data-driven tools, is to automatically scan large new systems and identify key changes within them. This could be a significant step in the automated scientific discovery of exotic phase properties,” explains Frank Schäfer, a postdoc in MIT’s Julia Lab within the Computer Science and Artificial Intelligence Laboratory (CSAIL) and co-author of the research paper.

Joining Schäfer in this research are first author Julian Arnold, a graduate student at the University of Basel; Alan Edelman, an applied mathematics professor and leader of the Julia Lab; and senior author Christoph Bruder, a physics professor at the University of Basel. Their findings have been published in Physical Review Letters.

Detecting Phase Transitions with AI Technology

While the transformation of water into ice may be one of the most recognizable examples of phase change, there are several more exotic transitions that captivate scientists, such as when a material changes from being a regular conductor to a superconductor.

These transitions can be identified by determining an “order parameter,” a crucial quantity expected to change during the transition. For example, water freezes into ice when its temperature drops below 0 degrees Celsius, where the suitable order parameter could be defined by the ratio of water molecules forming a crystalline lattice versus those remaining disordered.

Historically, researchers have depended on expert knowledge in physics to manually create phase diagrams, based on theoretical understanding of which parameters are important. This approach can be tedious for complex systems and may be unfeasible for unknown systems exhibiting new behaviors, introducing biases into the results.

Recently, machine learning has emerged as a solution, offering discriminative classifiers capable of learning to classify measurements according to their corresponding physical system phases, akin to how algorithms differentiate between images of cats and dogs.

The MIT researchers have highlighted how generative models can effectively streamline this classification task, applying a physics-informed methodology.

The Julia Programming Language, widely regarded for scientific computing, is instrumental in constructing these generative models, as noted by Schäfer.

Generative models, which form the foundation of systems like ChatGPT and DALL-E, function by estimating data probability distributions—allowing for the generation of new data points consistent with these distributions, such as creating fresh cat images that resemble existing ones.

However, simulations of a physical system based on established scientific techniques offer researchers a model of its probability distribution at no extra cost. This distribution encapsulates the measurement statistics of the physical system.

A More Enhanced Model

The MIT team’s key insight is that this probability distribution constitutes a generative model, serving as the basis for constructing classifiers. They integrate generative models into standard statistical formulas to create a classifier directly, rather than relying on sampling as with traditional discriminative methods.

“This approach effectively incorporates what you know about your physical system into the machine-learning framework. It extends beyond mere feature engineering or basic inductive biases,” remarks Schäfer.

This generative classifier can adeptly determine which phase the system resides in based on parameters like temperature or pressure. As the researchers directly estimate the underlying probability distributions of physical system measurements, their classifier is informed by system knowledge.

This allows their methodology to outperform various machine-learning techniques. Moreover, since it operates automatically without extensive training, it represents a significant advancement in the computational efficiency of detecting phase transitions.

In a way similar to how one might prompt ChatGPT for mathematical solutions, researchers can engage the generative classifier with queries such as “Does this sample belong to phase I or II?” or “Was this sample generated at high or low temperature?”

Additionally, this method can address various binary classification tasks in physical systems, potentially detecting quantum entanglement (Is this state entangled or not?) or discerning whether theory A or B is more applicable to specific challenges. This approach might also enhance our understanding of large language models, like ChatGPT, by refining the tuning of parameters for optimal performance.

Looking ahead, the researchers aim to investigate theoretical guarantees regarding the number of measurements needed to effectively detect phase transitions and compute the associated requirements.

This research received support from the Swiss National Science Foundation, the MIT-Switzerland Lockheed Martin Seed Fund, and MIT International Science and Technology Initiatives.

Photo credit & article inspired by: Massachusetts Institute of Technology