Large language models (LLMs) showcase surprising abilities, such as composing poetry or coding complex programs, yet their fundamental training involves simply predicting the next word in a sequence. This raises fascinating questions about whether these models grasp some inherent truths about the world. However, a recent study challenges that notion.

Researchers discovered that a well-known generative AI model can navigate New York City, providing remarkably accurate turn-by-turn driving directions without possessing an accurate internal representation of the city’s layout. When faced with street closures and detours, the model’s performance deteriorated dramatically, revealing potential risks in relying on AI systems in dynamic environments.

Upon further investigation, it became clear that the mental maps the AI created included numerous nonexistent streets and unconventional routes. These insights highlight significant implications for AI deployed in real-world applications, underscoring that a model’s seeming efficacy in one scenario could lead to failure in another.

“One of the exciting possibilities is leveraging LLMs to unlock new scientific discoveries. However, it’s crucial to ascertain whether these models have genuinely developed coherent world models,” remarks Ashesh Rambachan, lead author and assistant professor of economics at MIT’s Laboratory for Information and Decision Systems (LIDS).

Rambachan collaborated on the research with Keyon Vafa, a postdoc at Harvard; Justin Y. Chen, an electrical engineering and computer science graduate student at MIT; Jon Kleinberg, a professor at Cornell University; and Sendhil Mullainathan, an MIT professor across multiple departments. The findings will be presented at the Conference on Neural Information Processing Systems.

Evaluating AI with New Metrics

The focus of the research is on transformer models—foundational frameworks for LLMs like GPT-4. These transformers are trained extensively on language data to predict future text elements. However, merely evaluating the accuracy of their predictions does not provide a complete picture of their understanding of the world.

For instance, while these models can play Connect 4 almost flawlessly, they do so without grasping the game rules. To better understand the world models these AIs possess, the researchers devised two innovative evaluation metrics, applying them to deterministic finite automata (DFAs)—problems defined by a series of states and rules.

They applied these metrics to two scenarios: navigating New York City streets and playing the board game Othello. The first new metric, “sequence distinction,” measures whether a model can identify differences between distinct states, like various Othello boards. The second metric, “sequence compression,” assesses the model’s understanding of identical states, judging if it recognizes that two identical Othello boards have the same potential moves.

Discoveries: Incoherent World Models

In a surprising turn, the study revealed that transformers trained on random sequences exhibited more accurate world models than those trained on strategies. This could be because the former encountered a broader array of potential moves during their training phase. “Observing less experienced players can expose the AI to a wider range of moves, including those that expert players wouldn’t make,” explains Vafa.

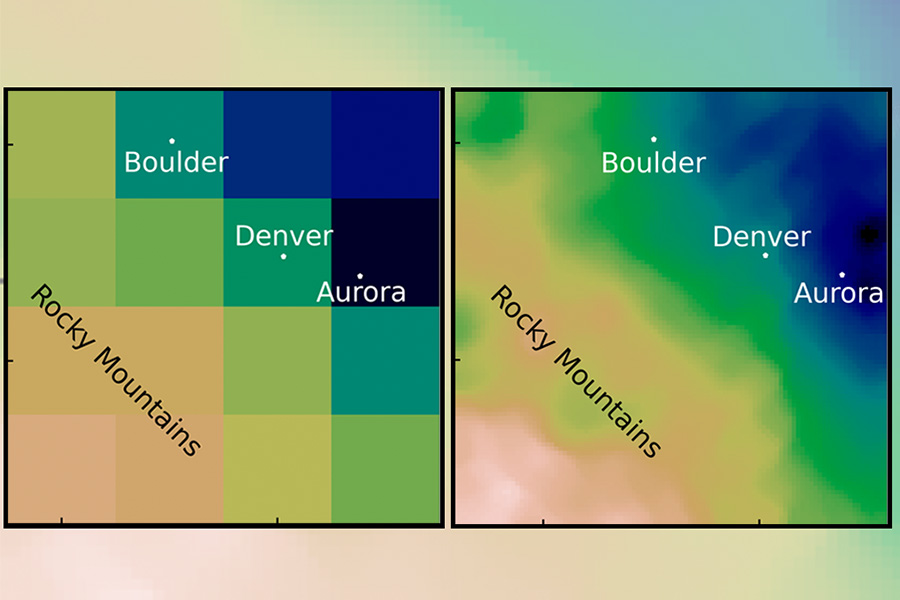

Despite their high success rates in navigation and gaming, the metrics indicated that only one model truly developed a coherent world model in Othello, while none did so effectively for navigation tasks. The researchers further illustrated this issue by introducing detours in the mapped city, resulting in a substantial drop in the models’ navigational accuracy.

“I was astonished at how quickly performance dropped when we added even minor detours; accuracy fell from nearly perfect to just 67% with only 1% of streets closed,” Vafa states.

When they analyzed the city maps generated by the models, they found strange, tangled layouts filled with fictitious streets and impossible connections. These findings underscore that while current AI models may excel at certain tasks, they lack a genuine understanding of the underlying rules governing those tasks. If researchers aim to build LLMs that accurately capture world models, they need to rethink their methods.

“We often marvel at the impressive feats these models achieve, leading us to assume they comprehend the world. It’s vital to approach this question with care, going beyond our intuitions,” Rambachan concludes.

Moving forward, the team aims to explore a broader range of problems, including scenarios where not all rules are known. They also plan to apply their evaluation metrics to real-world scientific challenges.

This research was partially funded by the Harvard Data Science Initiative, a National Science Foundation Graduate Research Fellowship, the Vannevar Bush Faculty Fellowship, a Simons Collaboration grant, and support from the MacArthur Foundation.

Photo credit & article inspired by: Massachusetts Institute of Technology