In mechanical engineering, the journey of computational design typically commences with identifying a challenge or a goal, followed by an evaluation of literature, resources, and systems that could resolve the dilemma. However, the Design Computation and Digital Engineering (DeCoDE) Lab at MIT takes a different approach by pushing the boundaries of what is achievable.

In collaboration with the MIT-IBM Watson AI Lab, the team led by ABS Career Development Assistant Professor Faez Ahmed and graduate student Amin Heyrani Nobari from the Department of Mechanical Engineering is harnessing the power of machine learning and generative AI technologies, along with physical modeling and engineering principles, to revolutionize design methodologies and improve the development of mechanical systems. One key initiative, Linkages, examines innovative ways to connect planar bars and joints to trace curved paths, as Ahmed and Nobari elaborate on their findings.

Q: How is your team taking a novel perspective on mechanical engineering challenges through observational insights?

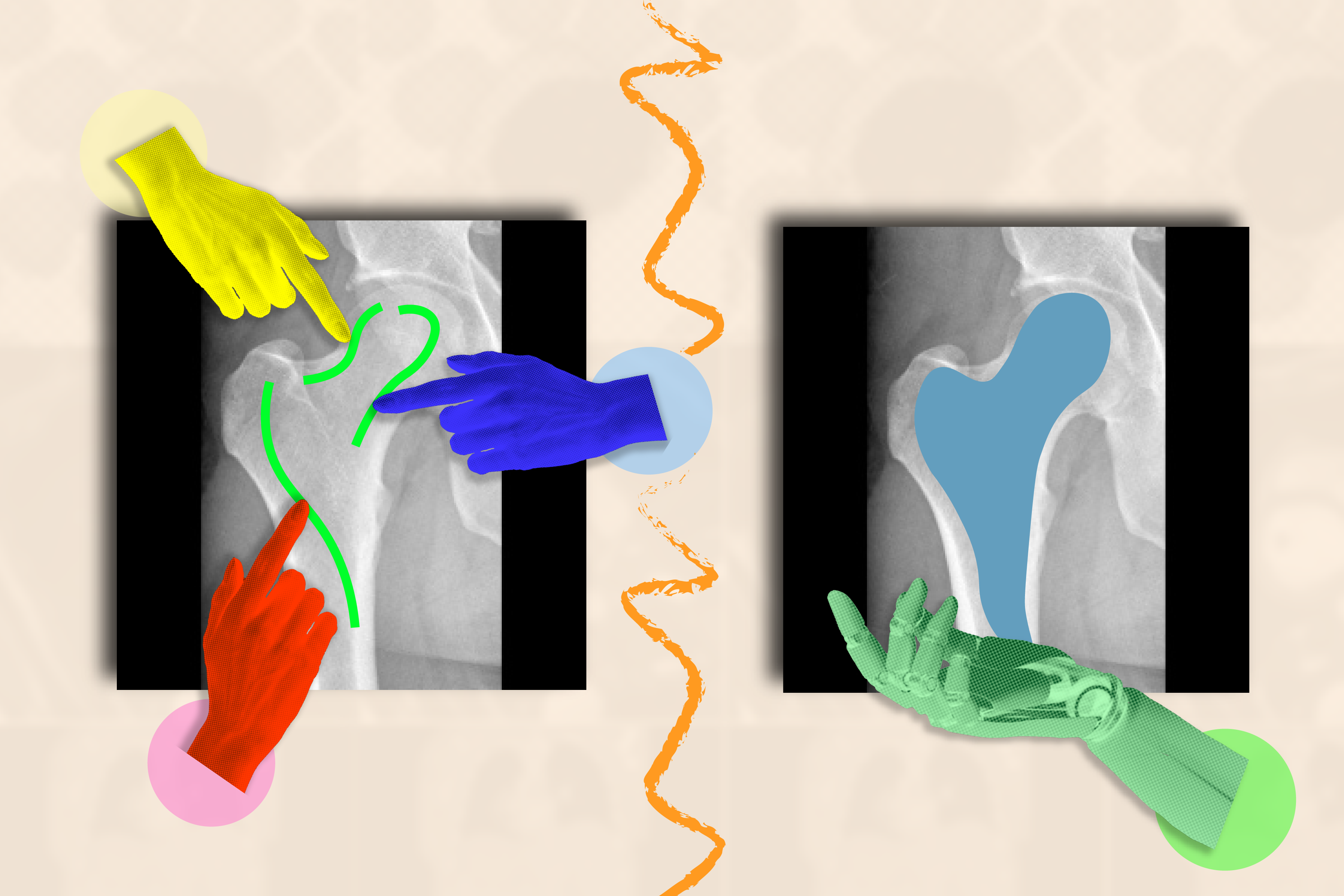

Ahmed: We’re exploring the question: How can generative AI enhance engineering applications? A significant challenge here involves integrating precision within generative AI models. Our research utilizes self-supervised contrastive learning techniques, which allow us to derive representations of linkages and curves in design—essentially understanding both how these designs function and what they look like.

This concept is closely tied to automated discovery: Can AI algorithms help us unearth new products? Broadly, whether focusing on linkages, generative AI, or large language models, the paramount role of precision remains clear. Insights gained from these models—combining data-driven learning with engineering simulations and joint design-performance embeddings—could extend to various engineering fields. We aim to present a proof of concept that designers can leverage for applications ranging from vessels to aircraft and intricate image generation tasks.

In our linkages project, we visualize designs through a network of interconnected bars. These connections essentially define the path traced as they operate, allowing us to learn about joint representations. Our primary input involves user-defined paths, which we use to generate mechanisms capable of reproducing those routes. This process significantly enhances our ability to solve design challenges with remarkable speed, achieving 28 times greater accuracy and 20 times faster execution than previous leading approaches.

Q: Can you elaborate on the linkages methodology and how it stands out against other methodologies?

Nobari: Our contrastive learning method involves treating mechanisms as graphs; each joint acts as a node containing key features, including positional data and joint types, such as fixed or free joints.

We employ an architecture that captures essential aspects of kinematics; fundamentally, it relies on a graph neural network that computes embeddings for these mechanism graphs. Another model generates embeddings from input curves, bridging these modalities via contrastive learning.

This training framework allows us to identify innovative mechanisms while prioritizing precision. For each identified candidate mechanism, we implement an optimization phase to align closely with the targeted curves.

Once the combinatorial element is optimized and we’re nearing the desired target curve, we utilize direct gradient-based optimization to refine the joints’ positions for exceptionally precise outcomes. This facet is crucial for our success.

We’ve tackled complex tasks like tracing letters from the alphabet—endeavors that traditional methods struggle to execute. Many existing machine learning approaches rely on only a limited number of bars (four or six) to define mechanisms, but our method demonstrates that even with fewer joints, we can accurately replicate complex curves.

Previously, the design limitations of single linkage mechanisms remained unclear. The challenge of crafting specific intricate shapes, such as the letter ‘M,’ posed a significant hurdle. Our innovative method proves such complex designs are indeed achievable.

While exploring off-the-shelf generative models for graphs, we encountered difficulties in training effectiveness—especially when managing continuous variables attributed to kinematic behavior. The various combinations of joints and linkages also posed compatibility issues for these models.

The complexity surfaces more acutely during optimization. Addressing the design as a mixed-integer, nonlinear challenge demands simplification of functions to employ mixed-integer conic programming. Beyond seven joints, the high combinatorial and continuous space complexity yields considerable challenges—requiring two days to devise a single mechanism for a specific target. Exhaustively navigating the entire design landscape proves virtually unfeasible. Thus, blindly applying deep learning won’t suffice.

Existing advanced deep learning strategies often use reinforcement learning, wherein given a target curve, mechanisms are generated randomly—akin to a Monte Carlo optimization. Our approach, on the other hand, excels with performance 28 times better, executing in just 75 seconds compared to the approximately 45 minutes required for reinforcement learning methods. Traditional optimization tactics can stretch beyond 24 hours without reaching convergence.

We’ve established a robust proof of concept centered around linkage mechanisms. This inherently complex problem reveals that traditional optimization strategies and standard deep learning approaches alone fall short.

Q: What broader implications does the development of techniques like linkages have for the evolution of human-AI collaborative design?

Ahmed: The most apparent benefits lie in machine and mechanical system design, which we’ve already illustrated. This work highlights our ability to navigate discrete and continuous spaces. Think about how linkages interconnect—this represents a discrete space, while their positional coordinates indicate a continuous variable that can shift accordingly. Tackling the challenges within linked discrete and continuous spaces is incredibly intricate. Most machine learning applications cater exclusively to continuous variables, such as those seen in computer vision or language processing. Demonstrating this dual-system competency paves the way for a multitude of engineering applications—from meta-materials to intricate network designs.

We’re contemplating immediate advancements, particularly concerning more intricate mechanical systems and the addition of diverse physics models, like incorporating various elastic behaviors. We’re also interested in integrating precision techniques with large language models, transferring learnings to develop generative capabilities. Our current model retrieves mechanisms and optimizes from established datasets; future generative models could autonomously create these methods, pushing for end-to-end learning that obviates the need for optimization.

Nobari: Many areas within mechanical engineering can benefit from this technology. Consider its application in inverse kinematic synthesis, where specific motion paths must be achieved—for instance, in car suspension systems, where 2D planar models are employed to facilitate accurate movement behaviors.

Ultimately, the forthcoming phase will involve demonstrating comparable frameworks for other complex problems interlacing combinatorial and continuous values. One area of interest is compliant mechanisms, which employ deformable materials rather than rigid structures to achieve desired motions.

Applications of compliant mechanisms are prevalent in precision machinery—essentially where fixtures need to hold components securely and consistently while maintaining high precision. The potential to automate these processes through our established framework promises significant advancements.

These challenges incorporating both combinatorial and continuous design variables remain substantial, yet we stand on the brink of significant developments in this domain.

This research received partial support from the MIT-IBM Watson AI Lab.

Photo credit & article inspired by: Massachusetts Institute of Technology