Meet Mark Hamilton, an innovative PhD student at MIT specializing in electrical engineering and computer science, affiliated with the renowned Computer Science and Artificial Intelligence Laboratory (CSAIL). His groundbreaking work focuses on harnessing machines to decode animal communication, and he’s embarked on a fascinating mission to teach algorithms human language from scratch.

Hamilton’s inspiration struck during a captivating scene in the documentary ‘March of the Penguins.’ In this moment, a penguin struggles to rise after slipping on ice, emitting a comical groan that seems to convey frustration. This observation sparked Hamilton’s curiosity: could we train an algorithm to watch endless hours of video to understand human language? “Is there a way we could let an algorithm watch TV all day and from this figure out what we’re talking about?” he muses.

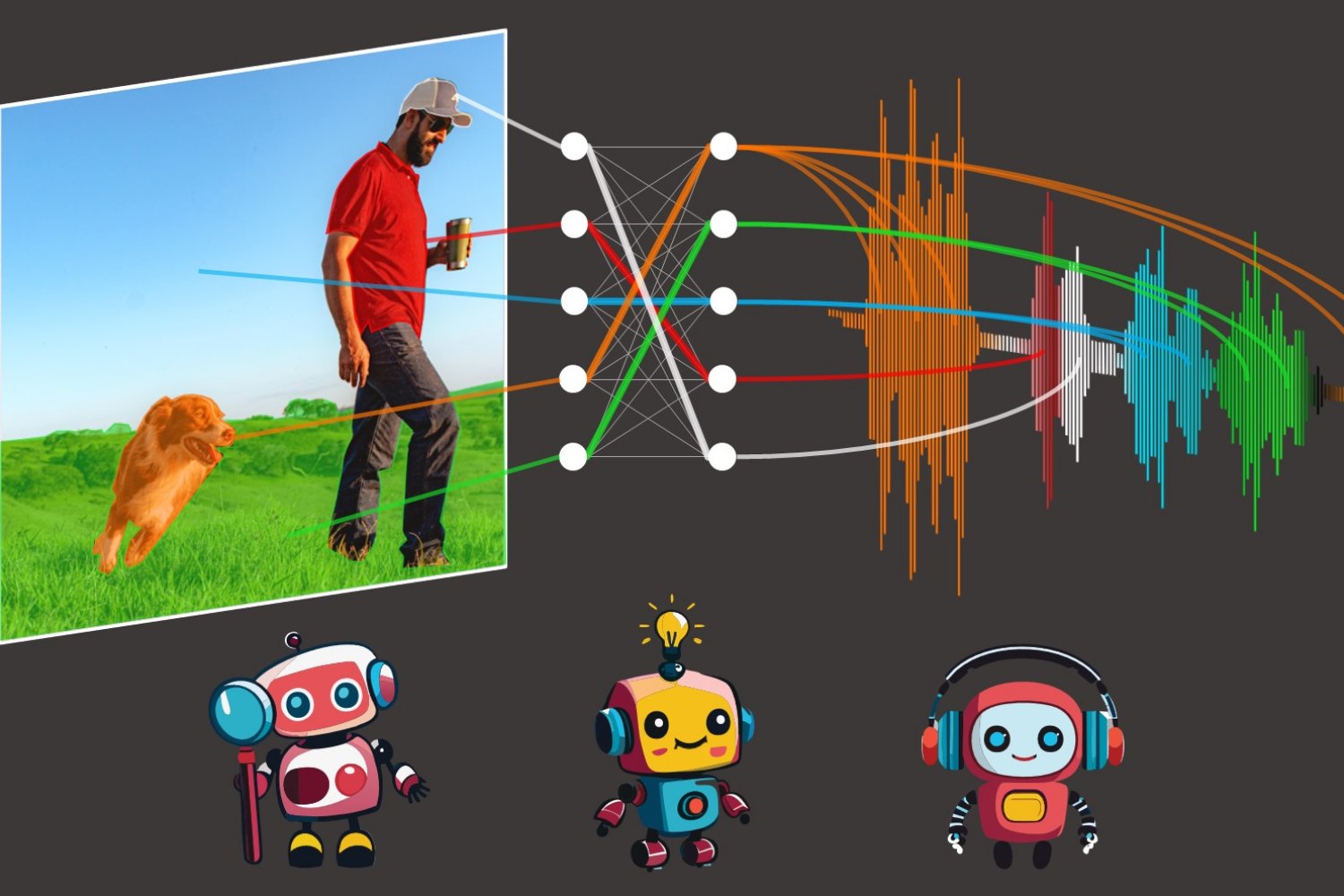

Thus, the model known as ‘DenseAV’ was born. Its unique design allows it to learn language by associating what it hears with what it sees, and vice versa. For instance, if the audio says, “bake the cake at 350,” it likely correlates with visual cues of a cake or an oven. “To excel in this audio-visual matching challenge across millions of videos, our model needs to grasp the context of human communication,” Hamilton explains.

After training DenseAV to play this matching game, the team analyzed which pixels the model focused on in response to various sounds. For example, when it hears the word “dog,” it actively searches for dogs within the video stream. This process offers insight into the algorithm’s interpretation of words and sounds.

Intriguingly, DenseAV exhibited a similar process when it detected a bark; it immediately sought out a dog in the video. Hamilton and his team addressed whether the model could differentiate between the spoken word “dog” and the actual sound of barking. They equipped DenseAV with a “two-sided brain,” leading to a remarkable discovery: one hemisphere specialized in words, while the other focused on sounds. This ability showcases DenseAV’s capacity to learn diverse types of connections without any need for human aids or conventional text.

DenseAV holds promising potential across various applications, from analyzing the vast amounts of video content uploaded daily to understanding new languages, such as dolphin or whale communications, which lack a textual representation. “Our ambition is for DenseAV to unlock the secrets of languages that have eluded human translators,” Hamilton asserts. Furthermore, this approach might unveil patterns between other data signals, such as the Earth’s seismic noises and geological features.

One significant challenge for the team was enabling language learning without any text input. Their mission was to rediscover language meaning from a blank slate, mirroring how children naturally listen and learn from their surroundings.

DenseAV achieves this ambitious goal by utilizing two primary components for processing audio and visual data separately. This separation eliminates the possibility of the algorithm cheating by ensuring that neither side can peek at the other’s data. Consequently, the algorithm is equipped to identify objects and build comprehensive and meaningful representations for both audio and visual signals. By contrasting pairs of corresponding audio and visual signals, DenseAV learns what matches and what doesn’t. This method, known as contrastive learning, requires no labeled examples and empowers DenseAV to uncover crucial predictive patterns inherent in language.

Unlike its predecessors, DenseAV doesn’t limit itself to a single interpretation of sound-image similarity. Prior algorithms matched complete audio clips, such as “the dog sat on the grass,” to entire corresponding images. This approach neglected fine-grained details, like the association of the word “grass” with the actual grass beneath the dog. DenseAV, however, examines and aggregates all possible audio-visual matches. “Where conventional methods used a single class token, our technique compares each pixel and sound moment, enhancing performance and enabling superior localization,” Hamilton details.

The researchers trained DenseAV on AudioSet, which encompasses 2 million YouTube videos, while also designing new datasets to evaluate how effectively the model could link sounds and images. DenseAV has proven itself in tests by outperforming leading models in identifying objects based on their sounds and names. “Previous datasets only supported broad assessments, so we created one utilizing semantic segmentation datasets for precise performance evaluation,” Hamilton explains. This leads to pixel-perfect annotations for accurate analysis of the model’s abilities.

The complexity of the project prompted it to take nearly a year to complete. The team faced challenges when adopting a large transformer architecture, as these types of models often overlook intricate details. Guiding the model to focus on these finer points proved to be a notable hurdle.

Looking forward, the researchers envision creating systems capable of learning from extensive amounts of only video or audio data, particularly crucial for domains where one format predominates over the other. They also aspire to scale their approach using larger models and possibly incorporate knowledge from existing language models to enhance outcomes.

“Recognizing and segmenting visual objects in images, alongside environmental sounds and spoken words in audio, are each formidable problems in their own right. Traditionally, researchers have relied on costly human-generated annotations to train machine learning models for these tasks,” remarks David Harwath, an assistant professor in computer science at the University of Texas at Austin, who was not involved in this study. “DenseAV makes impressive strides toward developing methods that learn to tackle these challenges by simply observing the world through both sound and sight, leveraging the reality that things we see and interact with often produce sounds, and we converse about them using spoken language. Importantly, this model carries no assumptions about the specific language being spoken, allowing it to absorb data from any language. It’s exciting to think about what DenseAV could achieve if scaled up with thousands or millions of hours of video across various languages.”

Additional authors on a paper describing the work include Andrew Zisserman, a professor of computer vision engineering at the University of Oxford; John R. Hershey, a Google AI Perception researcher; and William T. Freeman, a professor at MIT and CSAIL principal investigator. Their research received partial support from the U.S. National Science Foundation, a Royal Society Research Professorship, and an EPSRC Programme Grant Visual AI. This groundbreaking work will be presented at the IEEE/CVF Computer Vision and Pattern Recognition Conference this month.

Photo credit & article inspired by: Massachusetts Institute of Technology