In today’s rapidly evolving world of artificial intelligence (AI), sequence models have gained immense traction due to their ability to analyze data and predict subsequent actions. You’ve likely interacted with next-token prediction models such as ChatGPT, which forecast each word (or token) to form coherent responses. On the other hand, full-sequence diffusion models like Sora transform textual inputs into stunning, lifelike visuals by progressively “denoising” entire video sequences.

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have introduced a novel approach to enhance the flexibility of diffusion training schemes. This innovative technique could significantly improve AI applications in fields like computer vision and robotics.

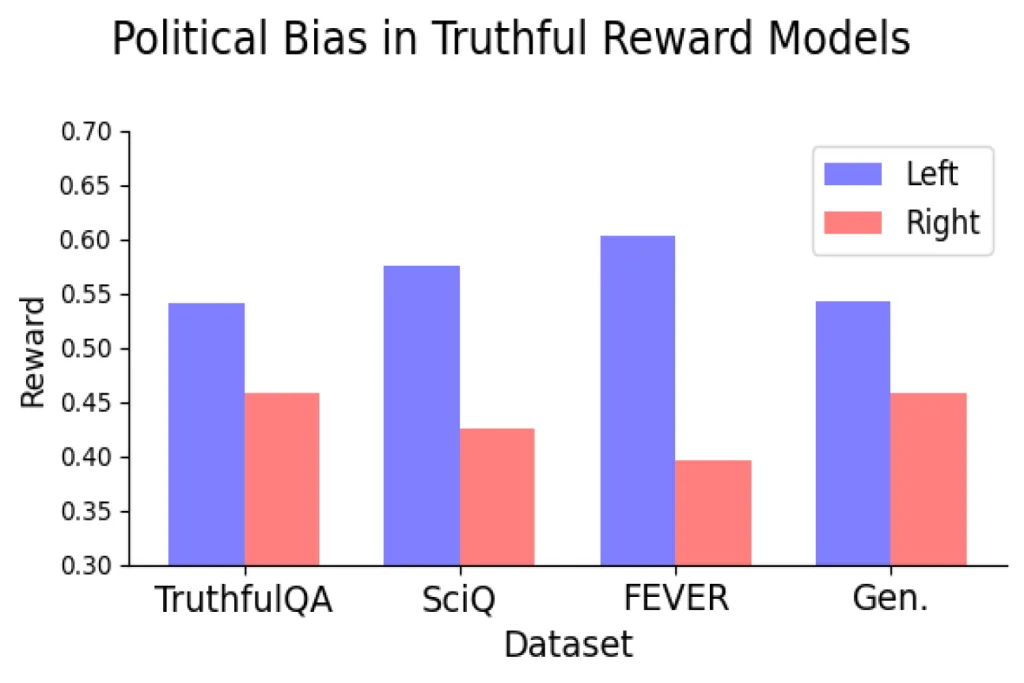

Both next-token and full-sequence diffusion models exhibit unique strengths and limitations. Next-token models can generate sequences of varying lengths, but they often overlook optimal states that lie far ahead—like steering their output 10 tokens into the future. This limitation necessitates auxiliary mechanisms for long-term planning. Conversely, diffusion models can condition on future states but struggle with generating sequences of variable length.

To capitalize on the strengths of both models, CSAIL researchers devised a training technique known as “Diffusion Forcing.” This name draws inspiration from “Teacher Forcing,” a conventional strategy that simplifies the complex process of full sequence generation by breaking it down into manageable next-token generation steps.

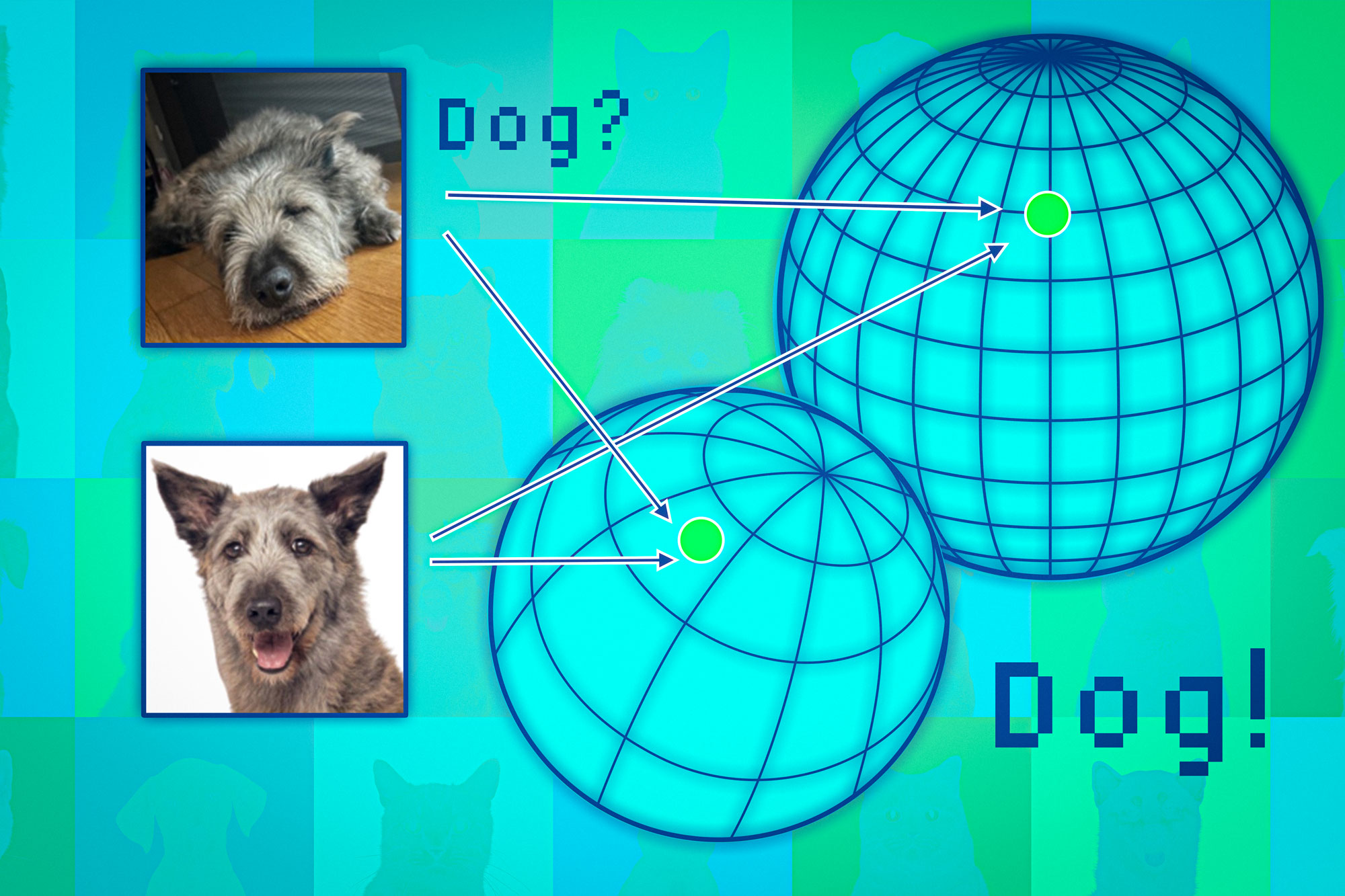

Diffusion Forcing reconciles diffusion models with teacher forcing by employing training methods that involve predicting masked (noisy) tokens from their unmasked counterparts. For diffusion models, noise is gradually introduced to data, akin to fractional masking. With their Diffusion Forcing method, MIT researchers trained neural networks to cleanse a set of tokens by managing various levels of noise while simultaneously predicting subsequent tokens. The outcome? A flexible and robust sequence model that enhances the quality of AI-generated videos and refines decision-making capabilities for robots and intelligent agents.

By navigating through noisy data and accurately forecasting the next steps in tasks, Diffusion Forcing allows a robot to disregard visual distractions while performing manipulation tasks. Moreover, it excels in producing stable, consistent video sequences and can even guide an AI agent through virtual mazes. This technique holds the potential to empower household and industrial robots to adapt to new tasks and elevate the quality of AI-generated entertainment.

“Sequence models aim to leverage known past events to predict unknown futures, employing a form of binary masking. However, not all masking needs to be binary,” explains Boyuan Chen, an MIT PhD student in electrical engineering and computer science, and a CSAIL research member. “With Diffusion Forcing, we introduce various levels of noise to each token, effectively achieving a type of fractional masking. During testing, our system can ‘unmask’ a set of tokens and diffuse a sequence for the near future at a reduced noise level. This allows it to discern trustworthy data amidst out-of-distribution inputs.”

In rigorous experiments, Diffusion Forcing demonstrated robust capabilities in ignoring misleading data while performing tasks and anticipating future actions.

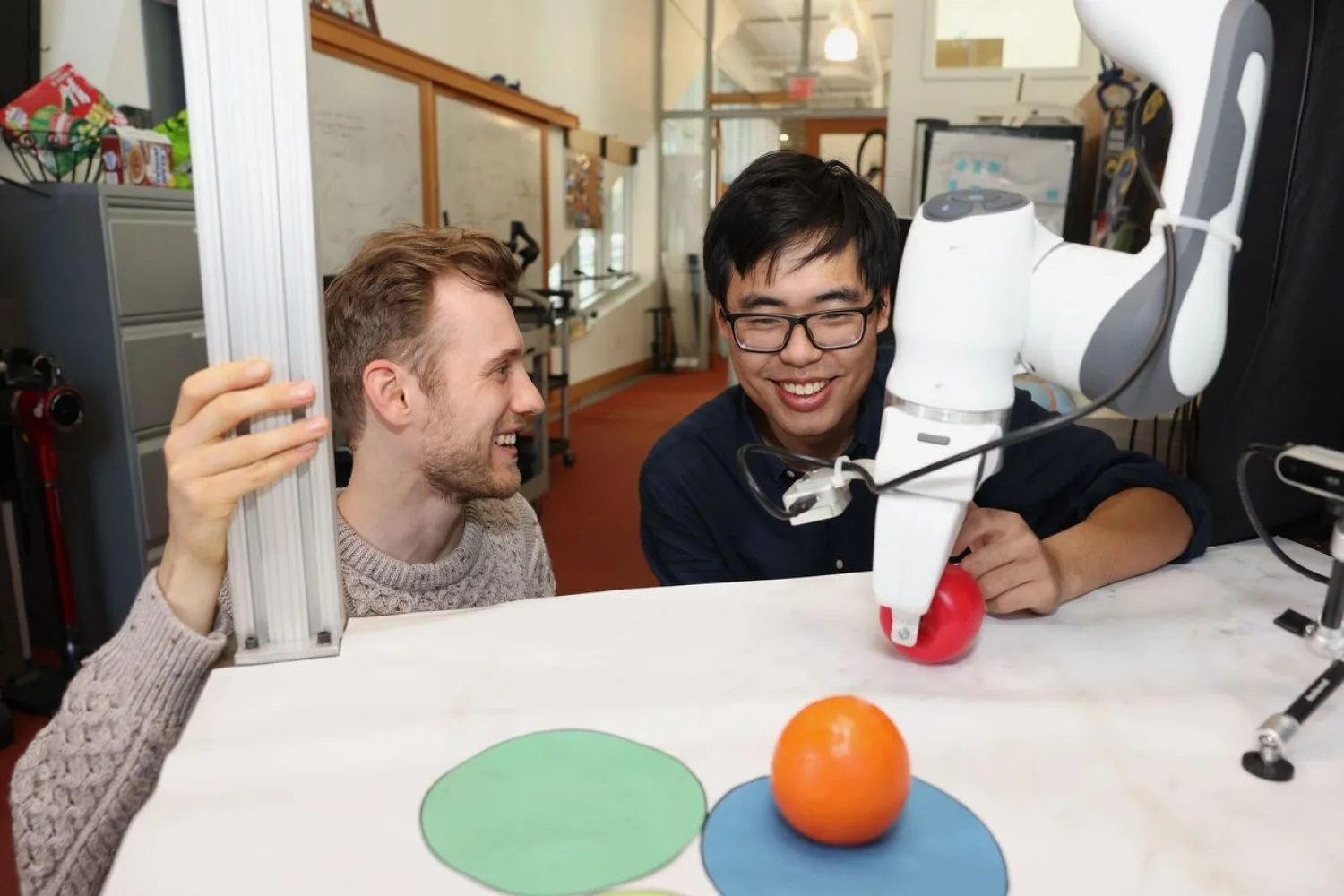

For instance, when implemented in a robotic arm, it facilitated the transfer of two toy fruits across three circular mats—a fundamental example of complex, long-horizon tasks requiring memory. Researchers trained the robot to replicate user actions from a distance using virtual reality and a camera feed. Despite initial random positions and distractions like a shopping bag obstructing markers, the robot successfully placed the objects in their designated spots.

Furthermore, the researchers utilized Diffusion Forcing to create videos by feeding it gameplay footage from Minecraft and colorful digital environments simulated within Google’s DeepMind Lab Simulator. Given just a single frame of footage, this method produced stable, high-resolution videos, outperforming comparable models such as full-sequence diffusion models like Sora and next-token models akin to ChatGPT, the latter of which often fail to generate coherent video beyond 72 frames.

Beyond merely generating engaging videos, Diffusion Forcing can function as a motion planner, guiding entities toward desired outcomes or rewards. Its inherent flexibility allows it to create plans with varied horizons, perform tree search, and incorporate the intuition that uncertainty increases with time. In a 2D maze solving task, Diffusion Forcing surpassed six baseline models by generating quicker solutions, showcasing its potential as a robust planner for future robotics applications.

Throughout each demonstration, Diffusion Forcing operated seamlessly as both a full sequence model and a next-token prediction model. According to Chen, this adaptable approach may serve as a foundational element for a “world model”—an AI system capable of simulating real-world dynamics by learning from vast quantities of internet videos. This could empower robots to undertake new and unfamiliar tasks by visualizing the necessary actions based on their environment. For example, if instructed to open a door without prior training, the model could generate a visual representation showing the process.

The research team aims to expand their method with larger datasets and modern transformer models to further enhance performance. They envision the development of a ChatGPT-like robot brain that allows robots to operate autonomously in novel environments without requiring human demonstrations.

“With Diffusion Forcing, we are making strides toward intertwining video generation and robotics,” stated Vincent Sitzmann, a senior author, MIT assistant professor, and member of CSAIL leading the Scene Representation group. “Ultimately, we aspire to leverage the wealth of knowledge captured in online videos to enable robots to assist in our daily lives. There are still numerous captivating research challenges ahead—like enabling robots to learn by imitating humans, despite their differing physical forms!”

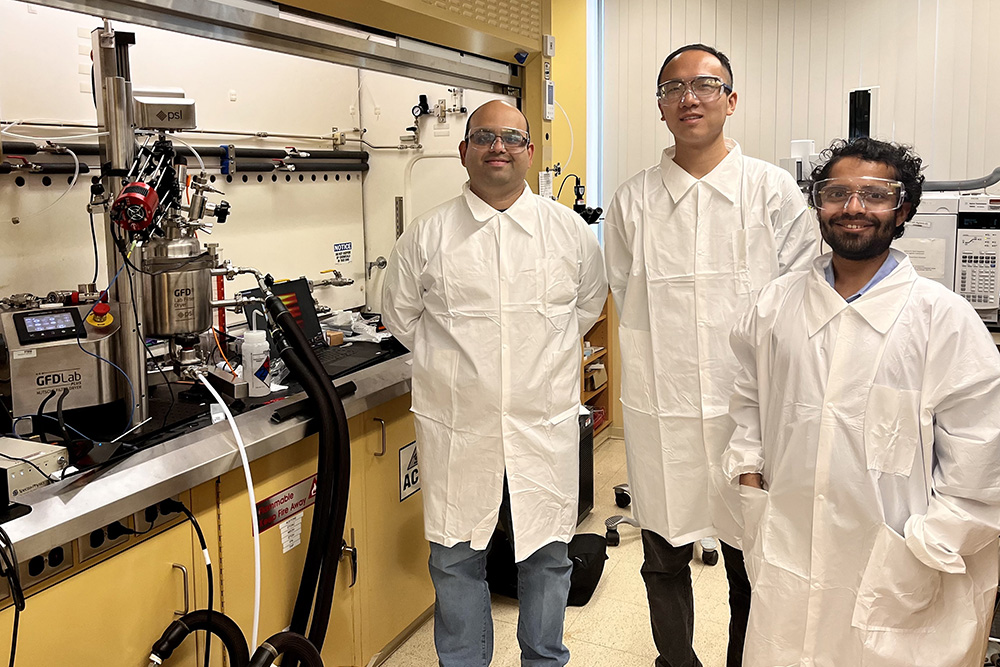

Chen and Sitzmann collaborated on this paper with visiting MIT researcher Diego Martí Monsó, along with CSAIL affiliates Yilun Du, a graduate student in EECS; Max Simchowitz, a former postdoc set to join Carnegie Mellon University as an assistant professor; and Russ Tedrake, the Toyota Professor at MIT, vice president of robotics research at the Toyota Research Institute, and a CSAIL member. Their research received support from various organizations, including the U.S. National Science Foundation, Singapore Defence Science and Technology Agency, and the Amazon Science Hub. They plan to present their findings at NeurIPS in December.

Photo credit & article inspired by: Massachusetts Institute of Technology