In the medical field, one of the essential responsibilities of a physician is to assess probabilities: What are the chances of a treatment’s success? Is there a risk of severe complications for the patient? When should follow-up tests be scheduled? Amid these vital evaluations, the emergence of artificial intelligence (AI) holds the potential to mitigate risks in healthcare settings, enabling doctors to better prioritize care for at-risk patients.

Despite its promise, a collaborative commentary by researchers from the MIT Department of Electrical Engineering and Computer Science (EECS), Equality AI, and Boston University emphasizes the need for increased regulatory oversight of AI technologies. This call to action appears in the New England Journal of Medicine AI (NEJM AI) for its October issue, following a new directive from the U.S. Office for Civil Rights (OCR) within the Department of Health and Human Services (HHS).

In May, the OCR unveiled a final rule under the Affordable Care Act (ACA), which bars discrimination based on race, color, national origin, age, disability, or sex in “patient care decision support tools.” This phrase now refers to both AI systems and non-automated medical tools.

This rule stems from President Joe Biden’s Executive Order on Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence issued in 2023. It reinforces the Biden-Harris administration’s commitment to fostering health equity and preventing discrimination within healthcare practices.

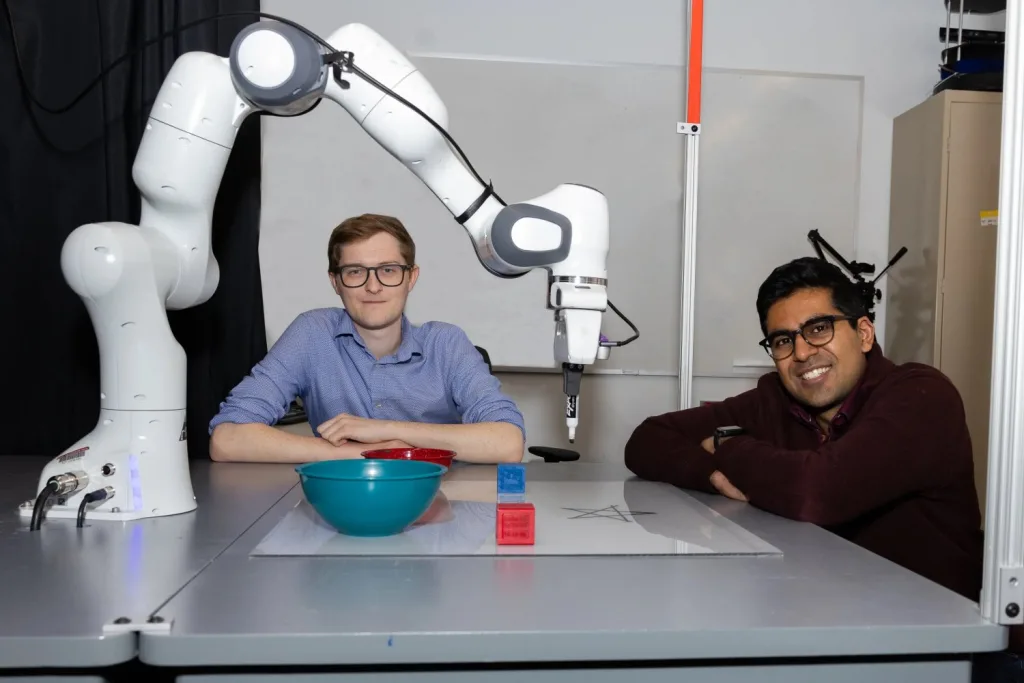

Senior author Marzyeh Ghassemi, an associate professor at EECS, highlights the significance of the rule, stating it represents an important advancement. Affiliated with the MIT Abdul Latif Jameel Clinic for Machine Learning in Health, CSAIL, and IMES, Ghassemi asserts that this regulation should inspire equity-centered enhancements to both AI and existing clinical decision-support tools.

The number of AI-enabled medical devices approved by the U.S. Food and Drug Administration (FDA) has surged over the last decade, following the initial approval of the PAPNET Testing System in 1995 for cervical screening. As of October, the FDA has greenlit nearly 1,000 AI-driven devices, many of which aid in clinical decision-making.

However, the researchers contend that clinical risk scores generated by decision-support tools remain unregulated, even as an estimated 65 percent of U.S. physicians utilize these tools monthly to navigate patient care pathways.

To tackle this regulatory gap, the Jameel Clinic will convene a regulatory conference in March 2025. The previous year’s event sparked compelling discussions among experts, regulators, and faculty members about the need for governing AI in healthcare.

Isaac Kohane, chair of the Department of Biomedical Informatics at Harvard Medical School and editor-in-chief of NEJM AI, remarked on the transparency of clinical risk scores compared to more complex AI algorithms. He emphasized that these scores depend on the quality of the datasets used for training, and since they influence clinical decisions, they should be held to the same rigorous standards as AI technologies.

Furthermore, while many decision-support tools do not incorporate AI, they can still perpetuate existing biases in healthcare, necessitating regulatory oversight.

Maia Hightower, CEO of Equality AI, pointed out the regulatory challenges posed by the vast array of clinical decision-support tools integrated into electronic health records and used in daily practice. However, she reaffirmed the critical need for such oversight to ensure transparency and reduce discrimination.

Looking ahead, Hightower acknowledged that the incoming administration may face hurdles in regulating clinical risk scores, particularly due to views favoring deregulation and opposition to specific nondiscrimination policies within the Affordable Care Act.

Photo credit & article inspired by: Massachusetts Institute of Technology