When someone suggests you should “know your limits,” they often mean exercising within safe boundaries. However, for robots, this advice translates to understanding their operational constraints, which is crucial for performing tasks effectively and safely. Imagine a robot tasked with cleaning a kitchen without knowledge of its environment. How would it formulate an action plan to achieve cleanliness while navigating obstacles? While large language models (LLMs) are advanced, they may overlook the robot’s specific physical capabilities, such as reach and proximity to objects, leading to messy outcomes.

To address this challenge, researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed a novel approach that combines vision models with LLMs, enabling robots to see their surroundings and comprehend their limitations. Their innovative strategy involves an LLM creating a plan that is evaluated within a simulated environment to verify its feasibility. If the proposed sequence of actions is impractical, the model iteratively generates new plans until it finds one that the robot can carry out, ensuring optimal performance.

This process, known as “Planning for Robots via Code for Continuous Constraint Satisfaction” (PRoC3S), allows robots to handle diverse tasks including writing letters, drawing shapes, and sorting objects. In future applications, PRoC3S could empower robots to tackle more complex home tasks, such as preparing breakfast, which may consist of multiple steps and require agility in a dynamic setting.

“While LLMs and traditional robotics systems, like task and motion planners, have limitations when operating independently, our approach leverages their combined strengths. This synergy enables more effective problem-solving for open-ended tasks,” explains PhD student Nishanth Kumar SM ’24, co-lead author of the PRoC3S study. “We create realistic simulations of a robot’s environment, allowing effective reasoning about actionable steps in long-term plans.”

The team recently presented their findings at the Conference on Robot Learning (CoRL) held in Munich, Germany.

The researchers utilize an LLM that is pre-trained on extensive internet data. Before executing specific tasks with PRoC3S, the team feeds the model sample tasks, like drawing a square, which relate to the intended task (e.g., drawing a star). Each sample introduces the activity, a long-term action plan, and crucial environmental details.

So how effective is this method? In simulations, PRoC3S successfully executed tasks like drawing stars and letters 80% of the time. It also demonstrated adeptness in stacking digital blocks and accurately placing items, such as fruits on a plate. Throughout these trials, the CSAIL approach consistently outperformed other methodologies, including “LLM3” and “Code as Policies”.

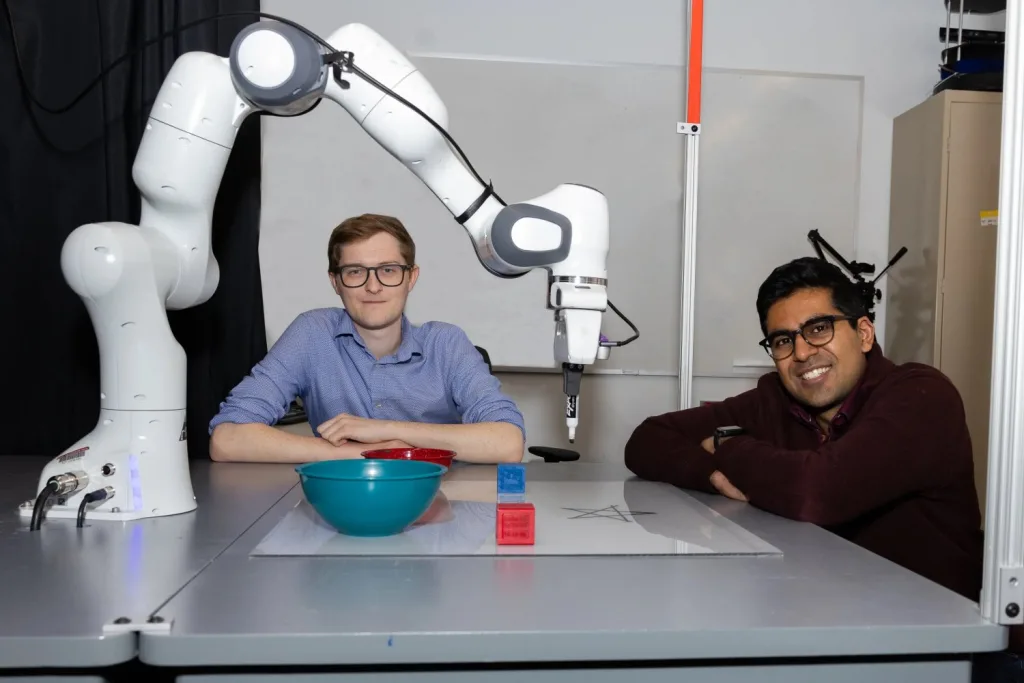

Taking it a step further, the CSAIL team implemented their technique on a physical robotic arm, directing it to arrange blocks in a straight line, match colored blocks to corresponding bowls, and manipulate objects around a table’s center.

According to Kumar and co-lead author Aidan Curtis SM ’23, the potential for LLMs to create safe and reliable action plans is promising. They envision a scenario where home robots can handle general requests, like “bring me some chips,” navigating the necessary steps effectively. PRoC3S could facilitate robots in testing plans in a simulated environment before executing them in real life—to ensure they can deliver your favorite snacks hassle-free.

Future plans for the research include enhancing results with advanced physics simulation technologies and scaling the approach for more intricate tasks. Additionally, they intend to adapt PRoC3S for mobile robots, including quadrupeds, for tasks like walking and environmental scanning.

“Using foundation models like ChatGPT to guide robot behaviors can sometimes result in unsafe or inaccurate actions. PRoC3S addresses this issue by employing high-level guidance while utilizing AI methodologies to ensure safe and verified outcomes. This blend of planning and data-driven techniques could be pivotal in developing robots capable of understanding and executing a broader variety of tasks,” notes Eric Rosen from The AI Institute, who is not a part of the research.

Kumar and Curtis’ team also includes MIT affiliates: undergraduate researcher Jing Cao and professors Leslie Pack Kaelbling and Tomás Lozano-Pérez from the Department of Electrical Engineering and Computer Science. Their work received support from several institutions, including the National Science Foundation and the Air Force Office of Scientific Research.

Photo credit & article inspired by: Massachusetts Institute of Technology