As AI systems find their way into critical healthcare scenarios, concerns are rising about their accuracy. These technologies can generate misleading information, exhibit biases, or fail in unexpected ways, leading to potentially harmful outcomes for both patients and healthcare providers.

In a recent commentary published in Nature Computational Science, MIT’s Associate Professor Marzyeh Ghassemi, along with Boston University’s Associate Professor Elaine Nsoesie, emphasize the necessity for responsible-use labels for AI systems in healthcare. Such labels could mirror the safety warnings mandated by the U.S. Food and Drug Administration (FDA) on prescription drugs.

MIT News interviewed Ghassemi to discuss the importance of these labels, the vital information they should contain, and potential strategies for implementing them.

Q: Why are responsible-use labels essential for AI systems in healthcare?

A: The healthcare field presents a unique challenge. Often, doctors depend on technologies and treatments that may not be entirely understood. This lack of understanding can stem from fundamental science, like the mechanism of action for acetaminophen, or simply from the specialization of the physician. We don’t expect doctors to service MRI machines, for example. However, we do have certification systems in place through the FDA and other federal bodies to ensure the safe use of medical devices and medications.

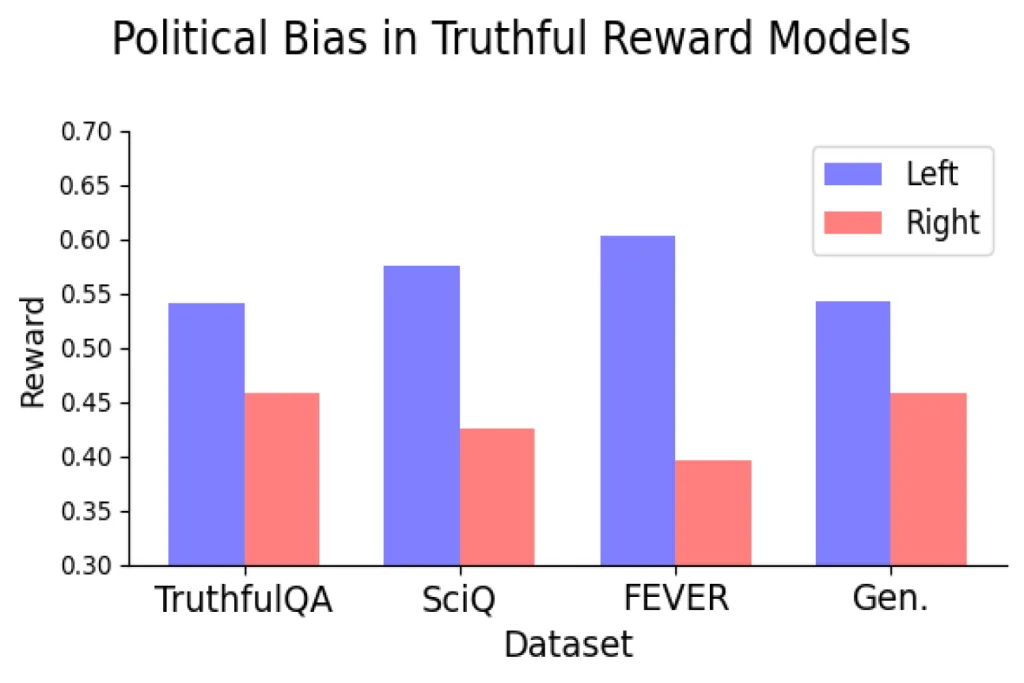

Moreover, medical devices come with service agreements—if an MRI machine is miscalibrated, a technician from the manufacturer will fix it. Prescription medications also benefit from ongoing surveillance and reporting systems that track adverse effects or issues. Unfortunately, many predictive models and algorithms—AI or not—bypass these essential approval and monitoring processes. This is particularly alarming because previous research has highlighted the need for rigorous evaluation and oversight of predictive models. Studies on generative AI models reveal that their outputs can be inappropriate, unreliable, or biased. Lacking proper surveillance on model predictions, it becomes increasingly challenging to identify issues with these models that healthcare institutions currently employ.

Q: Your article outlines key elements of responsible-use labels for AI, akin to FDA prescription labels, including intended usage, ingredients, potential side effects, etc. What critical information should these labels deliver?

A: A responsible-use label must clearly outline the time, place, and manner of a model’s intended application. Users should comprehend that models were developed using data from specific periods. For example, knowing whether they account for data during the COVID-19 pandemic is crucial, as practices shifted dramatically during that time. This necessity underlines our call for disclosure of the model’s “ingredients” and completed studies.

Location also plays a critical role. Previous research demonstrates that models trained in one locale often perform poorly when applied elsewhere. Understanding where data comes from and how a model was optimized for a specific population can help inform users of “potential side effects,” since different locations may exhibit unique health dynamics.

When a model is designed to predict a specific outcome, recognizing the timeline and geography of training data can aid in making informed decisions about deployment. However, generative models are notably versatile and can be repurposed for various tasks. In these cases, details about the “conditions of labeling” and distinctions between “approved” vs. “unapproved” usage become vital. For instance, if a model is evaluated for generating prospective billing codes based on clinical notes, it may exhibit biases toward overbilling certain conditions. Consequently, users should be cautious about applying that same model to refer patients to specialists. This flexibility highlights the need for more comprehensive guidelines on how models ought to be utilized.

In general, our advice is to develop high-quality models using the best available tools, but ample disclosure is essential. Just as we recognize that no medication is perfect and carries some level of risk, we must adopt a similar mindset towards AI models. Every model—regardless of its technology—is bound to some limitations and should be approached critically.

Q: If responsible-use labels were to be created, who would oversee the labeling process, and how would regulation and enforcement be implemented?

A: If a model isn’t intended for practical application, the disclosures made for a high-quality research publication suffice. However, once a model is meant for deployment in healthcare settings, developers must perform an initial labeling based on established frameworks. Validation of these statements is critical before deployment; in high-stakes fields like healthcare, various agencies from the Department of Health and Human Services could play a role.

For developers, knowing they must label system limitations encourages careful deliberation throughout the development process. If one anticipates disclosing the population from which a model’s data is derived, they might avoid training a model exclusively on dialogue from male users, for instance.

Contemplating factors such as data collection demographics, timelines, sample sizes, and inclusion criteria can illuminate potential deployment challenges.

Photo credit & article inspired by: Massachusetts Institute of Technology