The advancement of artificial intelligence (AI) cannot achieve fairness if it is monopolized by a single entity that designs and manages the models, alongside the data that fuels them. Presently, state-of-the-art AI models comprise billions of parameters, demanding extensive training and refinement to optimize their effectiveness for various applications. This reality places powerful AI tools beyond the grasp of numerous individuals and businesses.

MosaicML emerged with a clear vision: to democratize access to AI models. Founded by Jonathan Frankle PhD ’23 and MIT Associate Professor Michael Carbin, the company created a platform that empowers users to train, enhance, and oversee open-source models with their own datasets. Additionally, they developed their own open-source models utilizing advanced graphical processing units (GPUs) from Nvidia.

This innovative method made deep learning—initially a nascent field—more attainable for a diverse array of organizations, especially as the excitement around generative AI and large language models (LLMs) surged following the advent of Chat GPT-3.5. Consequently, MosaicML became a valuable ally for data management companies dedicated to helping organizations harness their data without compromising it to larger AI firms.

In 2022, this strategic mission led to the acquisition of MosaicML by Databricks, a leading global player in data storage, analytics, and AI renowned for its collaborations with major organizations. Since the merger, Databricks and MosaicML have launched one of the most high-performing open-source LLMs to date, named DBRX, which has set new standards in tasks such as reading comprehension, general knowledge queries, and logic challenges.

DBRX has quickly gained recognition for its remarkable speed as an open-source LLM, proving particularly advantageous for large enterprises.

More than just the model itself, Frankle emphasizes that DBRX is pivotal because it was developed using Databricks tools. This means that any of Databricks’ customers can replicate its performance with their own models, thereby propelling the influence of generative AI forward.

“It’s exhilarating to witness the community harnessing this technology,” Frankle shares. “As a researcher, that’s what excites me most. The model is just a starting point; the true enchantment unfolds with all the creative applications the community generates on top of it.”

Enhancing Algorithm Efficiency

Frankle, who earned both his bachelor’s and master’s degrees in computer science from Princeton University, arrived at MIT to pursue his PhD in 2016. Initially uncertain about his specialization, he ultimately gravitated towards deep learning, an area that, back then, did not attract the widespread enthusiasm it does today. Deep learning had been under study for decades, yet had not yielded substantial breakthroughs.

“No one anticipated that deep learning would elevate to such prominence,” Frankle reflects. “Experts recognized its potential, but terms like ‘large language model’ and ‘generative AI’ were still in the future.”

Excitement in the field ignited with the groundbreaking 2017 release of a paper by Google researchers that introduced the transformer architecture, revealing its unexpected effectiveness in language translation and various other applications, including content creation.

In 2020, Naveen Rao, an eventual Mosaic co-founder, reached out to Frankle and Carbin after coming across their co-authored paper that detailed a method to shrink deep learning models without compromising performance. Rao proposed the idea of establishing a startup, which was later joined by Hanlin Tang, who had collaborated with Rao at a previous AI startup acquired by Intel.

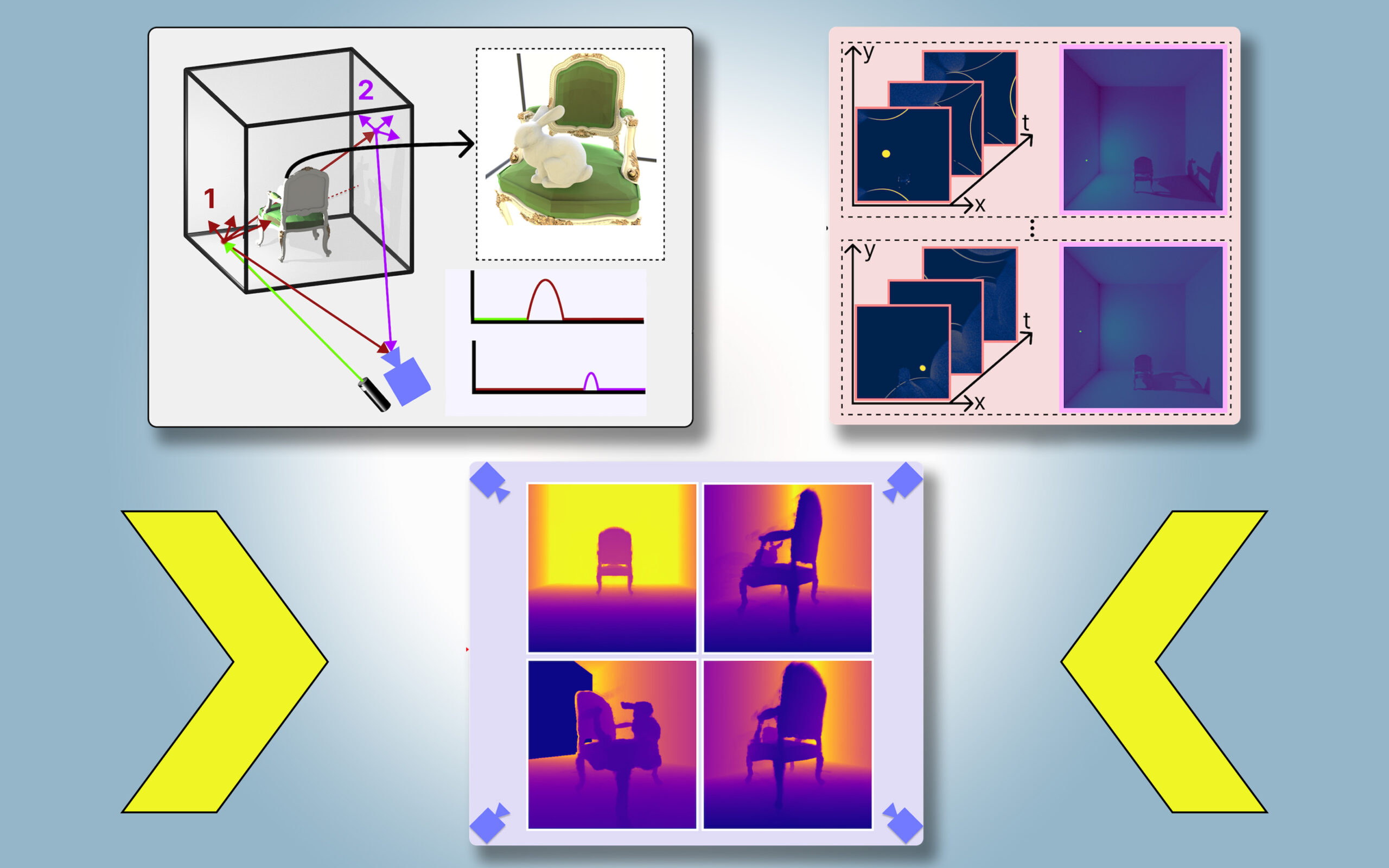

The founders immersed themselves in research on various techniques designed to accelerate AI model training, eventually merging several methods to demonstrate a model capable of performing image classification four times faster than earlier attempts.

“The secret? There was no singular trick,” Frankle explains. “We implemented about 17 different adjustments to the training process, each providing incremental improvements that collectively resulted in remarkable speed gains. That’s been the essence of Mosaic.”

After showcasing their techniques for making models more efficient, the team launched an open-source large language model in 2023, along with an open-source library showcasing their methodologies. They also crafted visualization tools to aid developers in mapping out diverse experimental options for training and operating models.

MIT’s E14 Fund supported Mosaic’s Series A funding round and provided invaluable guidance early in the journey. Mosaic’s evolution has enabled a new wave of companies to train their own generative AI models.

“Mosaic’s mission promotes democratization and embraces an open-source ethos,” Frankle reveals. “This principle has been at the core of my aspirations since my PhD days when I lacked access to GPUs. I wished for universal participation—why shouldn’t everyone have the chance to engage with these technologies and contribute to scientific progress?”

Pioneering Open Source Innovation

Simultaneously, Databricks was striving to empower its users with access to AI models, culminating in its acquisition of MosaicML in 2023 for an estimated $1.3 billion.

“At Databricks, we recognized a founding team of scholars akin to ourselves,” Frankle notes. “They are proficient in technology, and we bring expertise in machine learning. Both elements are essential; you cannot have one without the other. This synergy formed an ideal partnership.”

In March, Databricks unveiled DBRX, which provided both the open-source community and companies developing their own LLMs with capabilities previously associated solely with proprietary models.

“DBRX demonstrates that you can build the world’s leading open-source LLM using Databricks technology,” Frankle affirms. “For enterprises, the possibilities are limitless.”

Frankle reveals that the team at Databricks has been energized by applying DBRX internally across a range of applications.

“It starts off strong, and with some minor adjustments, it exceeds the performance of proprietary models,” he notes. “While we can’t outdo GPT in every scenario, we recognize that not every task requires a universal solution. Each organization wants to excel in particular areas, and we can tailor this model for those specific needs.”

As Databricks forges ahead, exploring new frontiers in AI and as competitors pour significant investments into this burgeoning field, Frankle remains hopeful that the industry will embrace open source as the optimal pathway forward.

“I have faith in scientific progress, and I’m thrilled to be part of such an exciting era for our field,” Frankle expresses. “I also believe in the value of openness, trusting that others will champion it as we have. Our achievements stem from robust science and the willingness to share knowledge.”

Photo credit & article inspired by: Massachusetts Institute of Technology