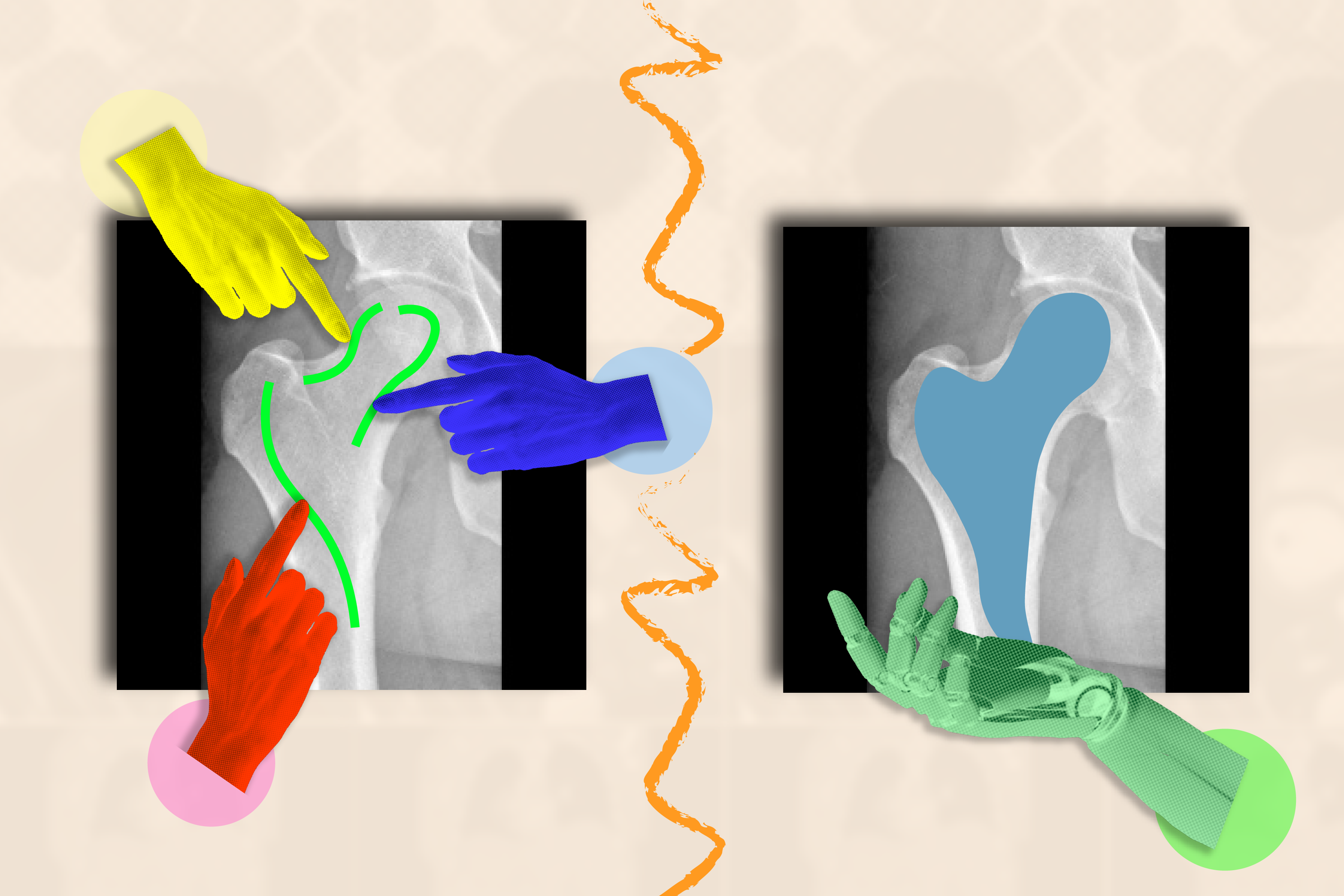

At first glance, medical images such as MRIs or X-rays might seem like a confusing assembly of shadowy black-and-white shapes. Identifying where one structure ends and another begins can be quite challenging, especially for those untrained in medical imaging.

However, with advanced AI systems, the task of segmenting crucial areas—such as tumors or other abnormalities—becomes significantly easier. These artificial assistants can quickly outline regions of interest, saving valuable time for doctors and biomedical professionals who would otherwise spend countless hours tracing anatomy across multiple images.

The challenge, though, is that researchers and clinicians must meticulously label thousands of images to effectively train their AI systems. For instance, annotating the cerebral cortex in numerous MRI scans is essential to teach a supervised model about the various shapes different brains might exhibit.

Aiming to simplify this cumbersome data collection process, a multidisciplinary team from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), Massachusetts General Hospital (MGH), and Harvard Medical School has introduced the interactive framework known as ScribblePrompt. This innovative tool allows for rapid segmentation of medical images, even those the system has never encountered before.

Instead of relying on human annotators for every image, the research team simulated user interactions over a vast array of medical scans—more than 50,000, including MRIs, ultrasounds, and various biological images. Utilizing algorithms to mimic human scribbling and clicking, they efficiently labeled structures in the eyes, cells, brains, bones, and skin. Furthermore, superpixel algorithms were employed to recognize parts of the image with similar characteristics, enabling the identification of new areas of interest for medical research.

“AI holds immense potential for analyzing images and high-dimensional data, enhancing productivity for humans,” states MIT PhD student Hallee Wong SM ’22, lead author of a recent paper on ScribblePrompt. “Our goal is to support, not replace, medical professionals through an interactive system. ScribblePrompt enables doctors to concentrate on more compelling aspects of their analysis, boasting a speed and accuracy that surpass other interactive segmentation methods. For instance, it reduces annotation time by 28% compared to Meta’s Segment Anything Model (SAM).”

The interface of ScribblePrompt is designed for simplicity: users can scribble or click over the area they wish to have segmented, prompting the tool to highlight the entire structure or background as needed. For instance, one could click on different veins in a retinal scan, and ScribblePrompt will effectively mark the selected structure.

Moreover, ScribblePrompt adapts based on user feedback. If a user wants to highlight a kidney in an ultrasound, they can employ a bounding box and then add further details with scribbles if the system omits edges. Users can even fine-tune segments using “negative scribbles” to exclude regions if necessary.

These interactive, self-correcting capabilities made ScribblePrompt the preferred option for neuroimaging researchers at MGH during a user study. A remarkable 93.8% of participants favored the MIT system over the SAM baseline for enhancing segmentation in response to user corrections, while 87.5% preferred ScribblePrompt for click-based edits.

ScribblePrompt was meticulously trained on simulated user interactions with 54,000 images spanning 65 datasets, which included scans of various anatomical parts such as the eyes, thorax, spine, and brain. It covered 16 different types of medical images, including CT scans, MRIs, ultrasounds, and even photographs.

“Many existing methods struggle to respond effectively to user scribbles because simulating these interactions in training can be complex. Our synthetic segmentation tasks allowed ScribblePrompt to focus on diverse inputs,” explains Wong. “We aimed to create a foundational model trained on a broad spectrum of data so it could generalize to new images and tasks.”

After processing this extensive data set, the team evaluated ScribblePrompt across 12 new datasets, where it outperformed four existing methods by being more efficient and providing accurate predictions for the precise areas users desired to highlight, despite never encountering those images before.

“Segmentation is the most widely practiced task in biomedical image analysis, critical for both clinical procedures and research—thus making it both a diverse and essential undertaking,” notes Adrian Dalca SM ’12, PhD ’16, CSAIL research scientist and assistant professor at MGH and Harvard Medical School. “ScribblePrompt is deliberately designed to be practically beneficial for clinicians and researchers, substantially speeding up this critical step.”

According to Bruce Fischl, a Harvard Medical School professor in radiology and MGH neuroscientist not involved with the study, “Most segmentation algorithms rely heavily on our ability to annotate images manually. In medical imaging, this challenge is even greater due to the typically three-dimensional nature of our images. ScribblePrompt significantly enhances both the speed and accuracy of manual annotation by training a network on interactions that resemble real-life image annotations. This leads to a user-friendly interface that improves productivity in engaging with imaging data.”

Wong and Dalca collaborated with two other CSAIL affiliates, John Guttag, the Dugald C. Jackson Professor of EECS at MIT, and PhD student Marianne Rakic SM ’22. Their innovative work received support from organizations such as Quanta Computer Inc., the Broad Institute’s Eric and Wendy Schmidt Center, Wistron Corp., and the National Institute of Biomedical Imaging and Bioengineering of the NIH, with additional hardware support from the Massachusetts Life Sciences Center.

The groundbreaking results of Wong and her colleagues will be presented at the 2024 European Conference on Computer Vision and were featured in an oral presentation at the DCAMI workshop at the Computer Vision and Pattern Recognition Conference earlier this year. They were honored with the Bench-to-Bedside Paper Award at the workshop for the clinical impact potential of ScribblePrompt.

Photo credit & article inspired by: Massachusetts Institute of Technology