Large Language Models (LLMs) have made significant strides in assisting with programming and robotics, but a considerable gap still exists when it comes to their capabilities in complex reasoning tasks. Unlike humans, these AI systems struggle to learn new concepts in the way we do, leading to insufficient high-level abstractions—essentially shortcuts that simplify complex ideas. This lack of abstraction is a key factor hindering LLMs from tackling sophisticated challenges.

Fortunately, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have uncovered a wealth of abstractions embedded in natural language. In their upcoming presentations at the International Conference on Learning Representations, this team will discuss three innovative frameworks that demonstrate how common words can enhance the contextual understanding of language models, leading to improvements in code synthesis, AI planning, and robotic navigation.

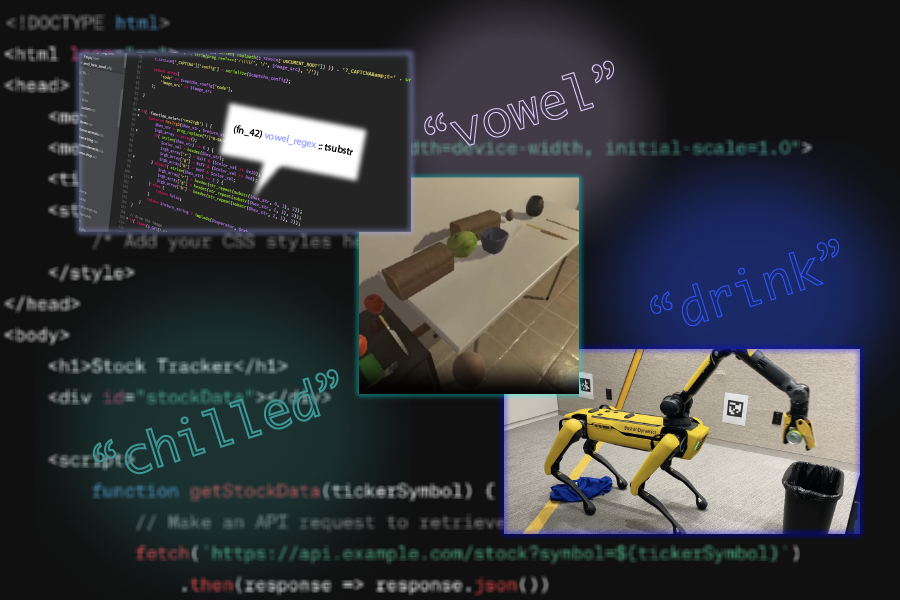

The three frameworks include: LILO (Library Induction from Language Observations), which focuses on synthesizing, compressing, and documenting code; Ada (Action Domain Acquisition), which tackles sequential decision-making; and LGA (Language-Guided Abstraction), designed to help robots better comprehend their environments. Each framework employs a neurosymbolic approach, merging neural networks with logical programming components to achieve these advancements.

LILO: A Neurosymbolic Framework for Code

While LLMs can quickly generate code for simple tasks, they often falter when tasked with creating extensive software libraries like human developers do. To enhance their coding capabilities, AI models need to incorporate refactoring, which involves refining code into succinct, reusable libraries. Building on earlier efforts, like the MIT-led Stitch algorithm that identifies abstractions, the CSAIL team introduced LILO, which synergizes these refactoring techniques with LLMs.

LILO uniquely leverages natural language, enabling it to perform tasks requiring human-like commonsense knowledge, such as identifying strings in code. In tests, LILO surpassed both standalone LLMs and previous algorithms like DreamCoder, demonstrating a profound understanding of prompt language. The results highlight LILO’s potential to assist with tasks like manipulating documents or creating 2D drawings in visual software.

“Language models thrive on functions named in natural language, making our abstractions more interpretable and boosting performance,” states Gabe Grand, an MIT PhD student and the lead author of this research. Initially, LILO proposes solutions using an LLM’s trained data, followed by a thorough search for optimal approaches. Then, Stitch efficiently identifies patterns within the code, pulling out useful abstractions for documentation and naming, leading to more streamlined programs capable of addressing complex challenges.

Currently, LILO operates in domain-specific programming languages like Logo, developed at MIT for programming education. Future research will aim to expand these automated refactoring strategies to more general languages like Python, marking a pivotal step toward enhancing coding capabilities through LLMs.

Ada: Guiding AI Task Planning with Natural Language

Just as LLMs struggle in programming, they face similar challenges in automating multi-step tasks, such as cooking breakfast. While humans naturally abstract their knowledge into actionable steps, LLMs find it difficult to develop flexible plans. Named after Ada Lovelace, the first computer programmer, the “Ada” framework addresses this issue by compiling libraries of effective plans for tasks in virtual environments.

Ada trains on potential tasks alongside their natural language descriptions, allowing a language model to propose action abstractions. Human operators then assess and filter these ideas into a coherent library, which streamlines the implementation of structured plans for various tasks.

“Historically, LLMs face challenges with complex tasks due to difficulties in abstract reasoning,” explains Lio Wong, the lead researcher for Ada. “By merging traditional tools from software development with LLMs, we’re tackling these intricate problems.”

Upon integrating the powerful GPT-4 model into Ada, researchers saw that it completed tasks in environments like kitchen simulators and Mini Minecraft with impressive accuracy—improving task performance by 59% and 89%, respectively. The goal is to adapt these concepts for real-world applications, envisioning Ada as a helper in homes or collaborative robots in kitchens. The team plans to fine-tune Ada’s underlying LLM for enhanced task planning capabilities, potentially combining it with robotic frameworks like LGA.

Language-Guided Abstraction: Enhancing Robotics Understanding

Designed by Andi Peng and a team of MIT researchers, the LGA framework streamlines the way machines interpret complex environments, emphasizing the importance of human-like understanding. Similar to the other frameworks, LGA explores the relationship between natural language and effective abstractions.

In dynamic environments, a robot needs contextual knowledge to perform tasks successfully. For example, when asked to fetch a bowl, the robot must discern necessary features from its surroundings. LGA uses a pre-trained language model to distill this information into actionable abstractions, assisting a robot in grasping the desired item based on a simple request.

Prior methodologies required painstaking note-taking for every manipulation task, but LGA changes the game by producing annotations similar to those created by a human in less time. This innovative method enabled robots to execute tasks ranging from retrieving fruit to disposing of waste. The capability of LGA to interpret environments holds great promise in various scenarios, including autonomous vehicles and manufacturing robots.

“In robotics, we often overlook the need to refine our data for real-world utility,” Peng remarks. “By integrating computer vision with captions, we enable robots to cultivate valuable world knowledge.” The researchers are considering adding multimodal visualization tools to overcome challenges inherent in underspecified tasks, further enhancing LGA’s capabilities as a supportive robotic system.

The Future of AI: An Exciting Frontier

“Library learning is an exciting frontier in AI research, paving the way for compositional abstraction discovery,” notes Robert Hawkins, a professor at the University of Wisconsin-Madison. “While traditional methods have been computationally intensive, these recent studies present a viable route to produce interpretable and adaptive task libraries through interactive loops with symbolic search and planning algorithms.”

By building high-quality code abstractions from natural language, these three neurosymbolic frameworks empower LLMs to engage with more complex challenges and environments in the future, thereby enhancing AI’s capability to operate in a human-like manner.

The senior authors for these studies from MIT include Joshua Tenenbaum (LILO and Ada), Julie Shah (LGA), and Jacob Andreas (all three). Other contributors comprise MIT PhD students focusing on different aspects of each research paper. The development of LILO and Ada enjoyed backing from various organizations, including MIT Quest for Intelligence and the U.S. Defense Advanced Research Projects Agency, while LGA’s research received support from the U.S. National Science Foundation and other entities.

Photo credit & article inspired by: Massachusetts Institute of Technology