At just five years old, Tomás Vega SM ’19 began experiencing a stutter that shaped his understanding of disabilities and sparked a passion for technology. “Using a keyboard and mouse became my lifelines,” Vega recalls. “They allowed me to express myself more fluently, transcending the limitations I faced. This experience fueled my fascination with human augmentation and cyborgs, instilling a deep sense of empathy in me. We all possess empathy, but how we apply it varies with our individual journeys.”

Vega’s journey into the realm of technology began when he started programming at the age of 12. By high school, he was already helping individuals with disabilities, such as those suffering from hand impairments or multiple sclerosis, to enhance their day-to-day activities. His academic path took him from the University of California at Berkeley to the prestigious halls of MIT, where he focused on creating technologies that empower people with disabilities to lead more independent lives.

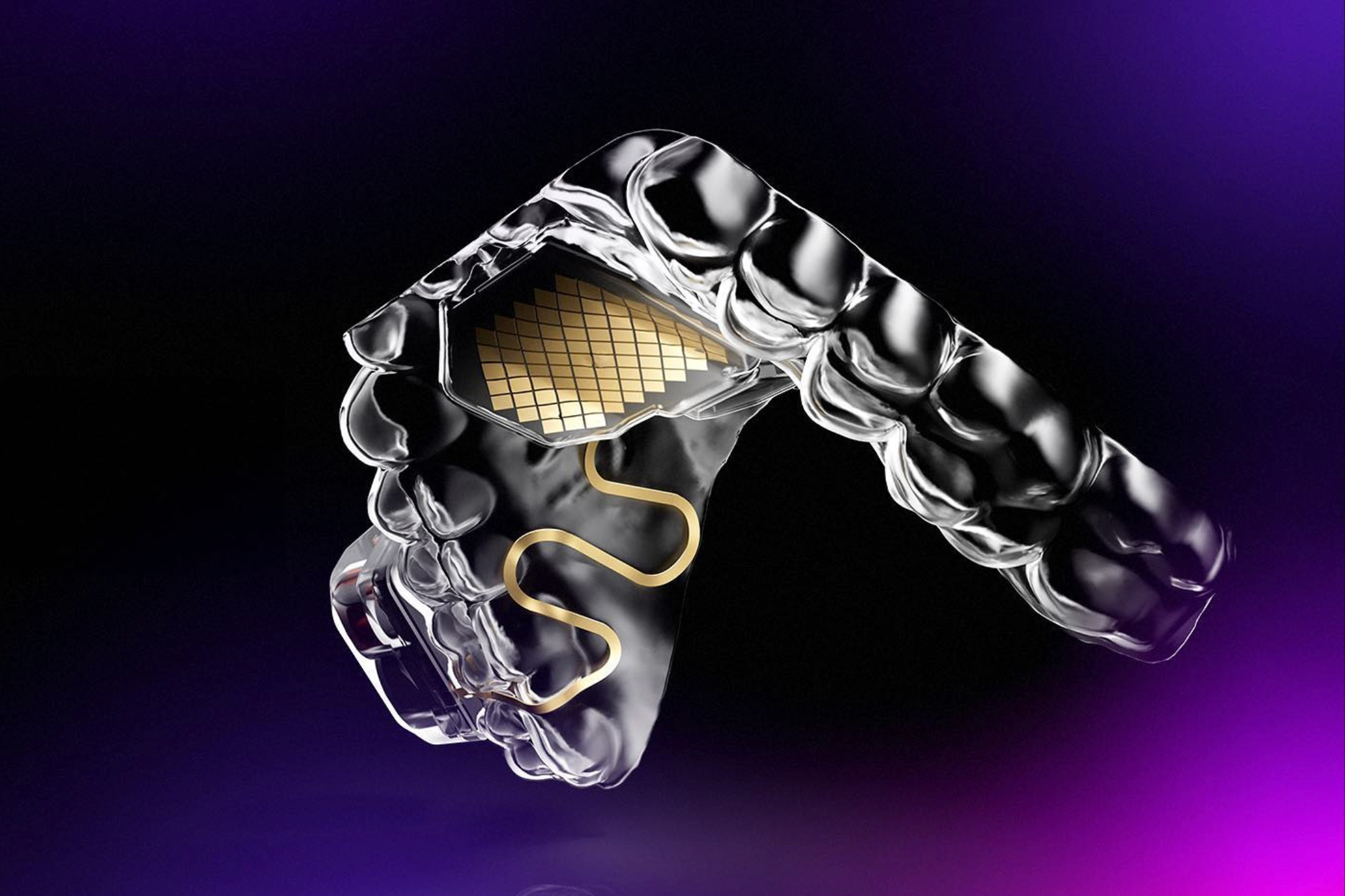

Today, Vega embodies his vision as the co-founder and CEO of Augmental, a pioneering startup developing solutions that enable individuals with movement impairments to interact effortlessly with their devices. Their flagship product, the MouthPad, allows users to control computers, smartphones, and tablets through intuitive tongue and head movements. This innovative device employs a pressure-sensitive touch pad positioned on the roof of the mouth, coupled with motion sensors, to convert these gestures into real-time cursor commands via Bluetooth.

“Interestingly, a significant part of our brain is dedicated to tongue movement control,” Vega explains. “The tongue, composed of eight muscles, mostly comprises slow-twitch fibers, making it less prone to fatigue. This inspired me to leverage that potential.”

People with spinal cord injuries are already benefiting from the MouthPad, using it to navigate their devices independently. One user, a quadriplegic college student studying math and computer science, praises the device for allowing her to take notes and study in environments where traditional assistive technologies fall short. “Now, she can engage in class, play games with friends, and experience a newfound independence,” Vega shares. “Her mom told us receiving the MouthPad was the most transformative moment since her injury.”

This encapsulates Augmental’s overarching mission: to enhance the accessibility of essential technologies in our daily lives.

Enhancing Technology Accessibility

In 2012, during his freshman year at UC Berkeley, Vega crossed paths with future co-founder Corten Singer, sharing his ambition to join MIT’s Media Lab. Four years later, he realized this aspiration by joining the Fluid Interfaces research group, led by Pattie Maes, a prominent figure in Media Arts and Sciences at MIT.

“I had only one graduate program in mind, and it was the Media Lab. I believed it was the only environment where I could truly pursue my passion for augmenting human capabilities,” Vega stated. During his time at the lab, he studied microfabrication, signal processing, and electronics, all while developing wearable technologies aimed at enhancing online access, improving sleep, and regulating emotions.

“The Media Lab felt like Disneyland for makers,” Vega remarked. “It was a playground where I could explore and create freely.” While initially drawn to the concept of brain-machine interfaces, a pivotal internship at Neuralink led him to seek alternative solutions.

[…] This realization dawned on him: while brain implants hold remarkable potential for future advancements, he recognized significant development delays could hinder immediate assistance for those in need.

In his final semester at MIT, Vega innovated what he describes as “a lollipop equipped with sensors” to evaluate mouth-based computer interaction. The results were promising. “I reached out to Corten, a co-founder, and explained how this technology could transform countless lives and redefine human-computer interaction,” Vega said.

With support from various MIT resources—including the Venture Mentoring Service and the MIT I-Corps program—and initial funding from the E14 Fund, Augmental was officially launched after Vega graduated in late 2019.

The MouthPad’s design process incorporates a 3D model tailored to each user’s mouth scan, ensuring a customized fit. Once designed, the device is 3D printed using dental-grade materials before integrating the electronic components.

Users can smoothly navigate by sliding their tongues in various directions, using sip gestures for right clicks and palate presses for left clicks. For those with limited tongue control, alternative gestures can be utilized; individuals with head mobility can use head-tracking functions to manage their cursor.

“Our vision is to create a multimodal interface that adapts to each user’s abilities, catering to diverse conditions,” Vega emphasizes.

Expanding the Reach of MouthPad Technology

Currently, many MouthPad users are individuals with spinal cord injuries, some unable to move their hands or heads. Gamers and programmers have also integrated the device into their daily routines. Frequent users reportedly engage with the MouthPad for up to nine hours each day, highlighting the device’s seamless integration into their lives and its immense value.

“This level of usage is incredible—it speaks volumes about how well our solution fits into their daily routines,” Vega explains.

Looking ahead, Augmental aims to secure U.S. Food and Drug Administration (FDA) clearance within the next year to enable users to control wheelchairs and robotic arms, unlocking insurance reimbursement options and enhancing product accessibility.

They are also working on the next iteration of the system, which will react to whispers and subtle movements from internal speech organs, addressing the specific needs of users with impaired lung function.

Vega expresses optimism about advancements in AI and associated hardware. Regardless of how the digital landscape evolves, he envisions Augmental as a tool that can serve a broad audience. “Our ultimate goal is to establish a robust, private interface to intelligence, possibly representing the most expressive, wearable, and hands-free input system created by humanity,” he states.

Photo credit & article inspired by: Massachusetts Institute of Technology