Imagine trying to capture a picture of each of North America’s approximately 11,000 tree species. Even then, you’d only see a tiny fraction of the millions of images in nature image datasets. These extensive collections, which include everything from butterflies to humpback whales, serve as invaluable resources for ecologists. They provide crucial insights into unique organism behaviors, rare conditions, migration patterns, and responses to climate change and pollution.

However, despite their vastness, these nature image datasets could be significantly more useful. Searching for relevant images can take a considerable amount of time, making it tedious for researchers. Wouldn’t it be fantastic to have an automated research assistant? This is where artificial intelligence systems known as multimodal vision language models (VLMs) come into play. Trained on both text and imagery, VLMs can more effectively identify specifics, such as particular trees accentuating the backdrop of a photograph.

The big question is, to what extent can VLMs aid nature researchers in retrieving images? A collaborative team from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), University College London, and iNaturalist set up a test to explore this. The goal was to determine how well each VLM could locate and organize results from their extensive “INQUIRE” dataset, consisting of 5 million wildlife photos paired with 250 search prompts designed by ecologists and biodiversity experts.

Searching for the Elusive Frog

During the tests, researchers discovered that larger, more sophisticated VLMs—those trained on substantial datasets—can effectively deliver the results researchers seek. The models showed a fair degree of competency with straightforward visual queries, such as identifying debris on a reef. However, they hit a wall with more complex queries using expert knowledge, like recognizing biological conditions or behaviors. For instance, VLMs adeptly identified jellyfish on the shore but faltered with commands like “axanthism in a green frog,” a term that describes the condition affecting a frog’s ability to produce yellow pigmentation.

These findings suggest that current models require significantly more domain-specific training data to tackle complex questions. Edward Vendrow, an MIT PhD student and CSAIL affiliate who co-led the dataset research featured in a new paper, expresses optimism about VLMs becoming reliable research aides. “Our aim is to create retrieval systems that precisely identify what scientists need for biodiversity monitoring and climate change analysis,” says Vendrow. “Multimodal models still face challenges in understanding intricate scientific terminology, yet INQUIRE will be a crucial benchmark for tracking improvements in their comprehension, eventually assisting researchers in effortlessly locating the specific images they desire.”

The experiments showed that the more extensive models were generally more efficient in both simple and complex searches, thanks to their broad training scope. They utilized the INQUIRE dataset to assess how well VLMs could filter a pool of 5 million images down to the top 100 most relevant results, known as “ranking.” For simpler queries like “a reef with manmade structures and debris,” relatively larger models such as SigLIP found consistent matches, whereas smaller CLIP models struggled. Vendrow mentions that larger VLMs are “just beginning to be helpful” when it comes to ranking more challenging queries.

In addition, Vendrow and his team tested how effectively multimodal models could re-rank those top 100 results, organizing which images were most relevant to a particular search. Even the more expansive language models trained on curated data like GPT-4o faced hurdles, achieving a precision score of only 59.6 percent—the best score obtained across all models.

The researchers shared these insights at the recent Conference on Neural Information Processing Systems (NeurIPS).

Deep Inquiries Into INQUIRE

The INQUIRE dataset comprises search queries developed in collaboration with ecologists, biologists, oceanographers, and other experts, pinpointing the types of images they would seek, including unique physical conditions and behaviors of animals. Annotators invested 180 hours sifting through the iNaturalist dataset, meticulously examining around 200,000 results to label 33,000 matches that corresponded to the prompts.

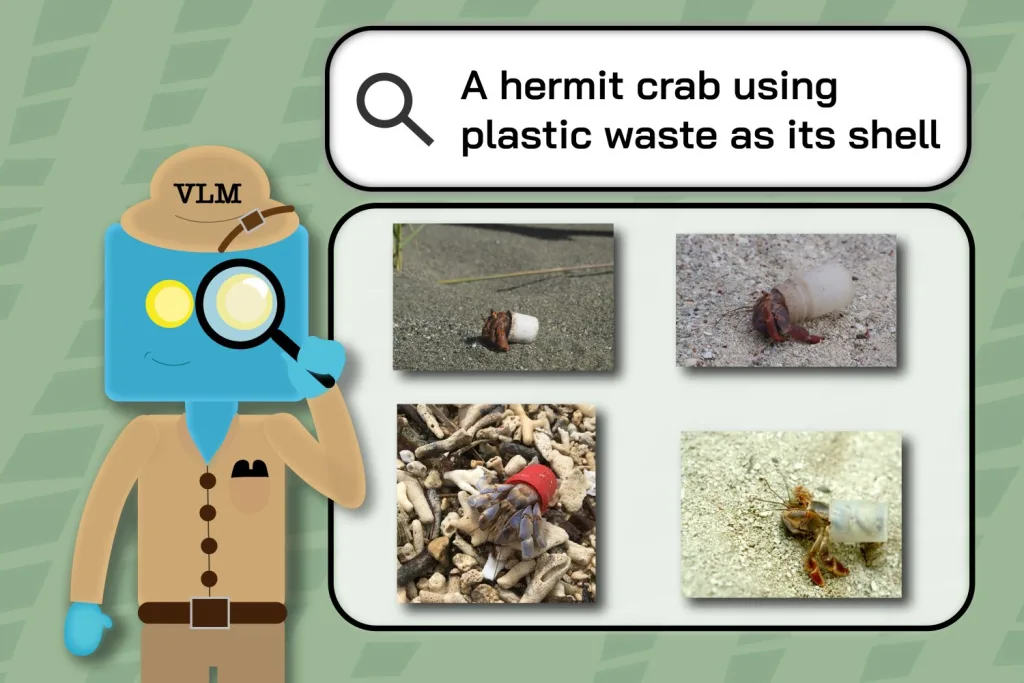

For example, the annotators employed queries such as “a hermit crab using plastic waste as its shell” and “a California condor tagged with a green ‘26’” to select subsets from the larger image dataset portraying these specific, unique occasions.

Once the annotations were complete, the team used the same queries to test how well VLMs could retrieve iNaturalist images. The annotators’ labels illuminated the models’ challenges in grasping scientific keywords, revealing that results often included images flagged as irrelevant. For example, when prompted for “redwood trees with fire scars,” VLMs sometimes returned images of trees devoid of any markings.

“This meticulous data curation focuses on encapsulating genuine examples of scientific inquiries across various fields in ecology and environmental science,” states Sara Beery, MIT’s Homer A. Burnell Career Development Assistant Professor, CSAIL principal investigator, and co-senior author of the research. “Understanding VLMs’ current capabilities in these potentially impactful scientific contexts is vital. It also highlights areas for improvement, particularly regarding technical terminology and the nuanced differences that distinguish categories essential for our collaborators.”

“Our results suggest that certain vision models are already sophisticated enough to assist wildlife scientists in retrieving particular images, yet many intricate tasks remain challenging for even the best-performing models,” Vendrow explains. “Although INQUIRE’s focus lies in ecology and biodiversity monitoring, its diverse queries indicate that VLMs excelling on INQUIRE could also thrive in analyzing extensive image collections in other observation-heavy disciplines.”

Curiosity Fuels Discovery

Pushing their project further, the researchers are collaborating with iNaturalist to develop a query system tailored to help scientists and other inquisitive individuals discover the images they genuinely want to see. Their working demo allows users to filter searches by species, streamlining the process of finding relevant results, such as the myriad eye colors of cats. Vendrow and co-lead author Omiros Pantazis, a recent PhD recipient from University College London, are also focused on enhancing the re-ranking system to improve outcomes.

Justin Kitzes, an Associate Professor at the University of Pittsburgh, underscores INQUIRE’s role in uncovering secondary data. “Biodiversity datasets are becoming increasingly unwieldy for any single scientist to analyze,” observes Kitzes, who is not involved in the research. “This study sheds light on a complex, unsolved problem: how to efficiently search through extensive data using questions that transcend simple inquiries to delve into individual traits, behavior, and species interactions. Accurately deciphering such complexities in biodiversity image data is crucial for advancing fundamental science and fostering real-world impacts on ecology and conservation.”

Along with Vendrow, Pantazis, and Beery, the team includes iNaturalist software engineer Alexander Shepard, along with professors Gabriel Brostow and Kate Jones from University College London, University of Edinburgh Associate Professor Oisin Mac Aodha, and University of Massachusetts at Amherst Assistant Professor Grant Van Horn, who are all co-senior authors. Their work received support from the Generative AI Laboratory at the University of Edinburgh, the U.S. National Science Foundation/Natural Sciences and Engineering Research Council of Canada Global Center on AI and Biodiversity Change, a Royal Society Research Grant, and the Biome Health Project funded by the World Wildlife Fund United Kingdom.

Photo credit & article inspired by: Massachusetts Institute of Technology